The power of AI art - vernacular decoding Diffusion Model

In recent years, with the rapid development of artificial intelligence (AI) technology, we have witnessed its revolutionary progress in many fields, especially in the field of image generation. From simple geometric shapes in the early days to the ability to generate images with a variety of artistic styles and lifelike realism, today's AI has reached an incredible level of creation. Among these image generation technologies, "Diffusion Model" has become mainstream. Its unique working mechanism and generation effects have become a popular field of research and application. Many well-known tools such as: Midjourney, DALL-E 3 , Stable Diffusion, etc., are all applications based on Diffusion Model.

The core idea of the Diffusion Model comes from the diffusion process of physics, which is similar to how to separate different substances that are mixed together. In the context of image generation, this means gradually restoring a clear image from an image containing a large amount of random noise. This process is like restoring a photo covered in stains to its original clean and clear appearance through step-by-step cleaning. The advantage of this approach is its ability to produce images of great detail and texture, while maintaining a high degree of creativity and control. This article will start with the basic concepts and gradually delve into how the Diffusion Model works and how it can be applied to generate stunning visual content.

What is Diffusion Model?

Let's first imagine that you have a beautiful landscape photo, but accidentally smear it with stains and dust. what will you do? An intuitive approach is to soak a cloth with detergent and rub it vigorously, hoping to remove all the stains at once. But this can damage the photo and the results won't be ideal.

Another smarter approach is to clean up gradually in stages. First, use a soft cloth with a small amount of detergent and gently wipe the surface to remove some stains. Then change to a more delicate cloth, dip it in special cleaning fluid, and carefully clean the details. Finally, use a soft suede cloth and gently wipe the surface to remove the last dust. Step by step in this way, only removing a little stain at a time, you can eventually restore the photo to its original appearance, which is both safe and effective.

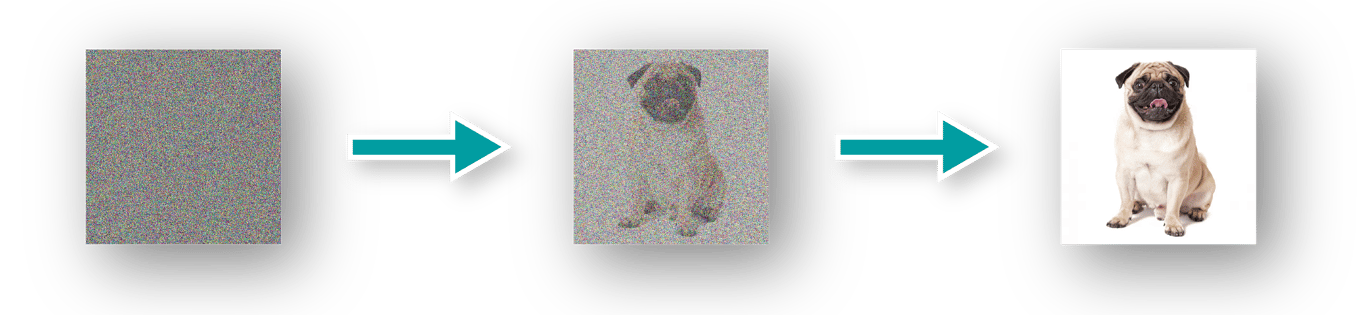

The Diffusion Model is based on this idea of "gradual cleaning". It breaks down image generation into two reciprocal processes: the forward "noise-adding" process and the reverse "noise-removing" process. In the noise adding stage, we start from a clean image without noise and gradually add random Gaussian noise - this noise is considered in statistics to be the distribution that best simulates natural noise - until the image Completely covered by noise. This process is similar to how we intentionally add stains to a photo, with each stain appearing randomly and gradually blurring the original landscape.

In the process of removing noise, we started from this seemingly dirty image and used a carefully designed neural network to gradually remove the noise. This neural network learns how to identify and separate noise and useful information in images through deep learning technology. At each stage of noise removal, the network carefully restores a portion of the image, from blurry outlines to detailed textures, ultimately restoring a clear, lifelike image.

Powerful neural network

In the Diffusion Model, there is a neural network driven by deep learning, which is responsible for performing the complex task of removing noise. This network, similar to the neurons in the human brain, is composed of multiple layers of mathematical functions, each of which can learn to process specific information. When a noisy image is input to this network, it passes through a series of carefully designed layers, each of which gradually extracts and enhances important features in the image while suppressing and removing noise. Ultimately, the network outputs a clearer image with less noise. There are a large number of learnable parameters inside the network, and these parameters determine the strategy of how to remove noise. The training goal of the network is to make the output image as close as possible to a truly clean image.

Let’s go back to the example of cleaning up photos. Let's say you hire a cleaning expert to help you restore that dirty photo. This expert has years of cleaning experience and knows the best methods for dealing with different types of stains. You take that dirty photo to an expert, who takes a closer look at the photo's condition and decides what the first step is to clean it up. Maybe use a soft cloth to remove surface dust first, or use a cleaner to treat a stubborn stain first. In short, experts will develop an optimal cleaning strategy based on the specific circumstances of the photo. After the first step of cleaning, the expert will return the photos to you for review. If you feel it's not clean enough, take the photo to the experts again and ask them to clean it up. Repeat this several times, cleaning a little bit each time, until you are satisfied with the quality of the photo.

In the Diffusion Model, the neural network plays the role of the cleaning expert. It receives a noisy image, removes part of the noise according to its own "cleaning strategy", and then outputs a cleaner image. This "cleaning strategy" is contained in the parameters of the network, and these parameters are learned through training. In other words, when the network processes images with noise, it will conduct detailed analysis instead of randomly removing noise. It uses deep learning algorithms to identify what are the characteristics of the image and what is unnecessary noise. Then, gradually adjust your strategy, from the general outline to the subtle texture, revealing the original appearance of the image bit by bit.

The training process can be seen as "asking" cleaning experts how to remove noise. We prepare a large number of dirty photos (images with noise) and corresponding clean photos (original images) for experts (the Internet) to try to clean. After each cleaning, we compare the expert's cleaning results (network output) with the real clean photos. If there are still gaps, we tell the experts where the cleaning is not good enough and ask the experts to improve. This is an iterative process, where the expert (the network) learns something new after each failure and does better on the next try. Through continuous practice and improvement, experts (networks) can master the best strategies for removing various types of noise.

The secret of generating images

After the training is completed, we will have a powerful neural network that can gradually restore any noisy image to a clean image. But the ultimate goal of Diffusion Model is to generate new, never-before-seen images. How is this achieved? The secret is that we can start with random noise, and then use a neural network to "clean" it step by step, and finally get a clean image. This process starts with a bunch of noise, and is refined layer by layer by a neural network, finally presenting a lifelike image.

Let’s go back to the photo cleaning analogy again. Now, we have a trained cleaning expert (neural network) on hand, but no dirty photos to recover. So, we decided to "make" some dirty photos ourselves.

First, we prepare a noisy image full of random black and white dots, like a canvas with dirty paint splattered on it. We then ask cleaning experts (online) to clean this "canvas". After the first step of cleaning, some noise on the "canvas" was removed, and some fuzzy structures appeared vaguely. We handed this result back to the cleaning expert and asked him to carry out the next step of cleaning.

In this way, the experts cleaned up step by step, and the image on the "canvas" gradually became clearer. Maybe some big shapes and outlines come first, then some details and textures, and finally some tiny tweaks. After many cleanings, the originally messy "canvas" of noise magically turned into a realistic image, perhaps a landscape photo or a portrait.

This is the secret of Diffusion Model generating images. It first creates a pure noise image, and then uses a trained neural network to remove the noise step by step, and finally obtains a clean and realistic image. The process is like that of a sculptor, starting from a featureless piece of stone and carving it bit by bit until he finally creates a lifelike statue.

Conclusion

Diffusion Model is an important development in the field of image generation in recent years. By imitating the diffusion process in physics, it allows AI to learn how to gradually remove noise to generate high-quality images. This idea of gradually removing noise not only makes the image generation process more stable and controllable, but also provides new possibilities for further improving the generation quality.

At the same time, Diffusion Model also provides a new perspective for us to understand how AI works. It tells us that AI does not simply "imagine" an image, but carves out the final result bit by bit through a meticulous noise removal process. And this process of "from chaos to clarity" may be closer to the essence of human creation.

References:

Ho, J., Jain, A., & Abbeel, P. (2020). Denoising diffusion probabilistic models. Advances in neural information processing systems , 33 , 6840-6851.

Paper download: arXiv:2006.11239

Note: This article uses Claude 3 and Microsoft Copilot for some of the content development and editing.

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!