connect the dots.

The Computer as a Communication Device

Compiled from: The Computer as a Communication Device , paper by JCR Licklider and Robert Taylor

Within a few years, people will be able to communicate through computers, which will be more efficient than face-to-face communication.

It's a bit surprising to say, but here's our conclusion. As if to confirm this, we attended a tech conference via a computer a few weeks ago. With the help of a computer, the group completed in two days what would normally take a week.

We'll discuss the mechanics of meetings further later; here, it's enough to note that we're all in the same room. We may be thousands of miles apart and yet communicate just as efficiently.

The value we place on people is deliberate. Communication engineers think of communication as the use of codes and signals to pass information from one point to another.

But communication is more than just sending and receiving. When two recorders play each other and record each other, will they communicate with each other? We believe that communicators must do something important with the information they send and receive. We believe we have entered an era of technology in which we are able to interact with a wealth of active information - not just in the passive ways we are accustomed to using books and libraries, but as active agents in an ongoing process The participant creates something for it by interacting with it, not simply receiving by connecting with it.

To someone calling an airline's flight operations information service, the tape recorder that answers the question appears to be more than just a passive memory. It is a frequently updated model of a changing situation - a synthesis of collected, analyzed, evaluated and combined information to represent a situation or process in an organized manner.

Direct interaction with airline information services is still limited; recordings do not change for customer calls. What we want to emphasize is not just one-way transmission: the growing importance of common constructive, mutually reinforcing communication that goes beyond "now we both know a fact that only one person knew before" (now we both know a fact that only one of us knew before). When ideas interact, new ideas emerge. We want to talk about creativity in communication.

Creative, interactive communication requires a malleable medium, a dynamic medium in which premises can be transformed into results, and above all a common medium in which all can contribute and experiment.

One such medium is at hand—the programmable digital computer. Its advent could change the nature and value of communication more profoundly than the printing press and picture tube, because as we shall show, a well-programmed computer can provide direct access to information resources and the processes that utilize them.

Communication: Comparing models

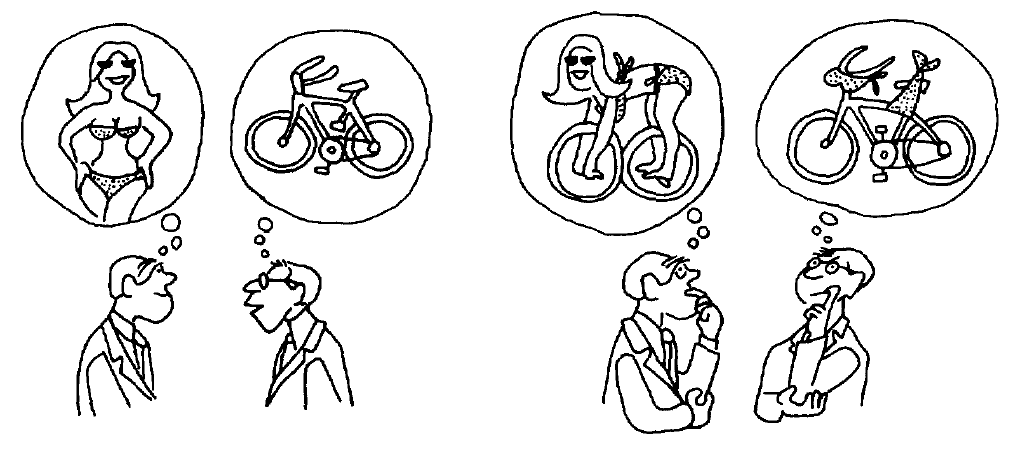

To understand how and why computers can have such an effect on communication, we must examine the idea of modeling with the help of computers and computers. We believe that modeling is the foundation and core of communication. Any communication between people about the same thing is a shared revealing experience of an information model about that thing. Each model is an abstract conceptual structure that is initially formed in the mind of the person who communicates, and if the concept in the mind of one person who wants to communicate is very different from the concept in the mind of another, there is no common model, and there is no communication.

By far the most numerous, complex, and important models are those that exist in people's heads. The mental model has no peers in terms of richness, plasticity, convenience, and economy, but in other respects it falls short. Nobody knows how it works. It serves more faithfully the hope of the master than reason. It can only access information stored in a person's head. It can only be observed and manipulated by one person.

Society rightfully distrusts the models one's mind makes. Society needs consensus, consensus, or at least a majority. Fundamentally, this amounts to asking the various models to compare and agree to some degree. The need for communication, which we now define succinctly as "cooperative modeling", is cooperation in the construction, maintenance, and use of models.

Unless we can compare models, how can we be sure that we are modeling collaboratively and that we are communicating?

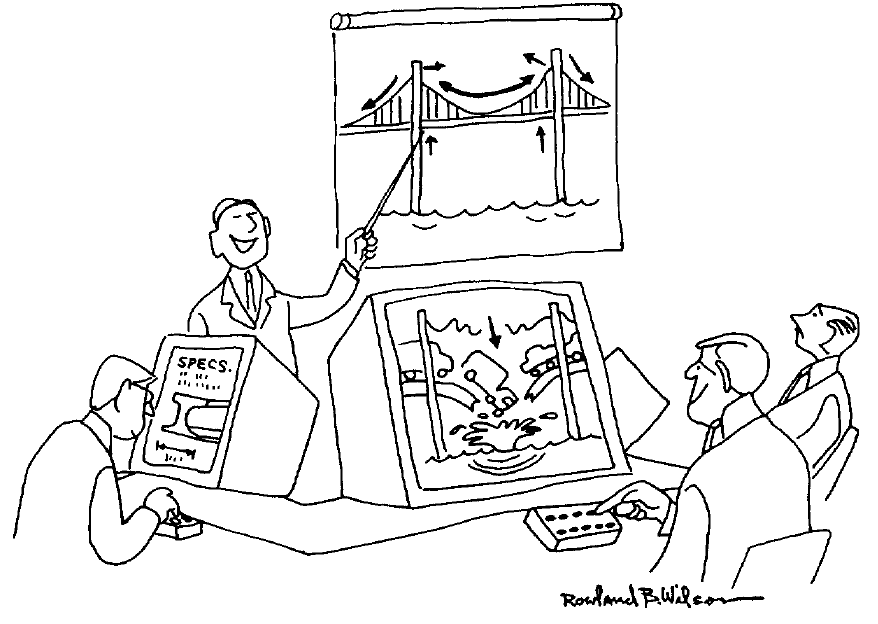

When people communicate face-to-face, they externalize their models so they can be sure they're talking about the same thing. Even a simple externalized model like a flowchart or outline - because it is visible to all communicants - can be the focus of discussion. It changes the nature of communication: when communicators don't have such a common framework, they just talk to each other; but when they have an actionable model in front of them, they say a few words, point a finger, sketch, Nod or express disapproval.

The dynamics of this communication are model-centric, so an important conclusion can be drawn: perhaps the reason two-way telecommunications today is so far from face-to-face communication is simply that it does not facilitate externalizing models. Are face-to-face meetings, where you can see each other's expressions, really more productive than teleconferences? Or is it able to create and modify external models?

Project meeting as a model

At a technical project meeting, one can clearly see that the modeling process we advocate constitutes communication. Almost every reader can recall a meeting during the development phase of a project. Each member of the project brings a different mental model of a common task to such a meeting—its purpose, goals, plans, progress, and status. Each model ties (1) himself; (2) the group he represents; (3) his boss; and (4) the past, present, and future state of the project.

Much of the raw data that attendees bring to meetings is undigested and irrelevant. The data collected by himself is interesting and important for each participant. They are not just factual and repeatedly reported documents. They are strongly influenced by insight, subjective feelings, and educated guesses. So each person's data is reflected in his mental model. Getting his colleagues to integrate his data into their models is the essence of the communication task.

Suppose you can see the models in the minds of two potential communicators at this meeting. By looking at their models, you can tell if the communication is going on. If, at the outset, their two models are structurally similar, but differ only in the values of some parameters, then the communication will lead to convergence to a common pattern. This is the easiest and most frequent form of communication.

If the two mental models are structurally different, then the success of communication will be reflected in structural changes in one or both of the modes. We might conclude that one side of the communication has insight, or is experimenting with new hypotheses in order to begin to understand the other—or that both are reorganizing their mental models to reach commonality.

The encounter of many interacting minds is a more complex process. Opinions and suggestions are available from all quarters. This interaction results not only in a solution to a problem, but also in a new set of rules for solving the problem. Of course, this is the nature of creative interaction. The process of maintaining the current model includes a constantly changing set of rules for processing and disposing of information.

The project meetings we have just described represent a broad range of human endeavours, so to speak, creative informational activities. Let's distinguish it from another class we call informational housekeeping . The latter are the primary use of computers today; they process paychecks, keep track of bank balances, calculate the orbits of spacecraft, control repetitive machine programs, and maintain various loan lists. For the most part, they have not been used to coherently describe less clear situations.

We mentioned a meeting earlier where attendees communicated with each other via computers. That meeting was organized by Doug Engelbart of the Stanford Research Institute and was actually a progress review meeting for a specific project. The topics discussed were so detailed and wide-ranging that none of the attendees, not even the moderator, knew everything about this particular project.

face to face via computer

The desks are arranged into a square workspace with five desks on one side. At the center of the area are six television monitors that display the alphanumeric output of computers located elsewhere in the building, but are controlled remotely via a keyboard and a set of electronic pointer controllers called "mouse". Any participant in the meeting can move a nearby mouse to control the movement of the tracking pointer on the TV screen for all other participants to see.

Everyone involved in the project prepared a topic outline for his presentation at the conference, and as he spoke, his outline appeared on the screen—providing a broad view of his own model. Many outline statements contain the names of specific reference documents that the speaker can find from the computer and detailed on the screen as participants put their work into the computer system's files from the beginning of the project.

So this meeting, like any other, has a full agenda list, and each speaker brings the material he's going to talk about (figuratively in a briefcase, but actually on a computer).

Computer systems are of great help in exploring the depth and breadth of material. When facts must be ascertained, more detailed information can be displayed; more global information can be displayed to answer questions of relevance and interrelationships. A future version of the system will make it possible for each participant to view the file of the speaker's speech on their own TV screen - checking for accompanying questions without interrupting the presentation.

Obviously, the collection of raw data can become too large to digest. At some point, the complexity of the communication process will outweigh the resources and coping capabilities available; at this point, we must simplify and draw conclusions.

It is frightening how early and how radically one simplifies the problem and draws conclusions too early, even when the stakes are high and transmission facilities and information resources are extremely rich. Deep modeling to communicate—understand—requires a huge investment. Maybe even the government can't afford it.

But one day, the government may not be able to afford it. Because, while we have been discussing the communication process as a cooperative modeling effort in a mutual environment, there is also an aspect of communicating with an uncooperative adversary. According to the reports of the recent international crisis, at each decision point in the "game" or among the hundreds of alternatives faced by each decision-making level, only a few can be considered on average, never more than a few dozen. Only a few branches can delve into two or three of these decision-making layers before having to act. Each side is busy simulating what the other might do, but the simulation takes time, and the pressure of events forces simplification even if there is danger.

Whether we're trying to communicate across different interest groups, or to collaborate, we need to model faster and more deeply. Improving decision-making processes - not just in government, but across business and the professions - is so important that it deserves all the effort.

Computers - Conversion or Interface?

In our view, group decision-making is simply the active, executive, effect-producing aspect of the kind of communication we're discussing. We've said that one has to oversimplify. We try to explain why one must oversimplify. But we shouldn't oversimplify the gist of this post. We can honestly and firmly say that a particular form of digital computer organization and its programs and data constitutes a dynamic, malleable medium that could revolutionize the art of modeling and, in doing so, increase the efficiency of communication between people, It can even change the situation completely.

But we must immediately relate this statement to the condition that the computer itself cannot make any contribution to our benefit, and that the computer possessing these programs and data does nothing but point a direction and provide some preliminary examples. Besides, nothing can be done. We stress not to say: "Buy a computer and your communication problems will be solved."

What we are saying is that, given the inadequacy of current machines and the primitiveness of their software, we, along with many colleagues who have had experience working online and interacting with computers, have felt more responsive, convenient than we expected and strength". As a result, many of us believe (some even to the point of religious fanaticism) that a real achievement that will dramatically improve our communication efficiency is on the horizon.

Many communications engineers are also currently excited about the use of digital computers in communications. However, the function they want the computer to implement is the transformation function. The computer either switches the lines of communication, connecting them together in the required configuration, or converts (the technical term is "store and forward") the messages.

Translation capabilities are important, but when we say computers can revolutionize communication, we're not thinking of translation capabilities. Our emphasis is on modeling capabilities, not transformation capabilities. So far, the communications engineer has not felt compelled to promote modeling capabilities within his remit to build an interactive, collaborative modeling tool. Information transmission and information processing have always been separate and institutionalized separately. By fusing the two technologies, powerful intellectual and social benefits can be achieved. However, there are also strong legal and administrative obstacles on the road to this integration.

Distributed Intellectual Resources

We've already seen the beginnings of communication through computers—communication between people at consoles in the same room, on the same university campus, or even in far-flung labs in the same R&D organization. This communication over telephone lines and a multi-access computer began to foster cooperation and consistency more effectively than the current way of sharing computer programs by courier or mail exchange of tapes. Computer programs are important because they go beyond mere "data" - they include programs and processes that construct and manipulate data. With tools and technologies such as computers and communications, we can now pool and share these primary resources, but they are only part of a whole that we can learn to pool and share. The ensemble includes raw data, curated data, data about the location of the data and documents, and especially the model.

To understand the importance of new computer-aided communications, we must consider the dynamics of the "critical mass" as it applies to collaboration in creative endeavors. Any problem worthy of the name, you will find that only a few people can effectively solve it. These people must be brought into close intellectual partnership so that their minds can come into contact with each other. But get these people together as a team in one place, and you're in trouble, because the most creative people are often not the best team members, and there aren't enough high-level positions in an organization to keep them all happy. Letting them go their separate ways, they created their own empires, big and small, spending more time in their role as leaders than in their problem-solving roles. Leaders still get together in meetings. They still visit each other. But the time horizon for their communication stretches, and the correlation between mental models degenerates between meetings, so it can take a year to complete a week of communication. There has to be some way of facilitating communication between people to bring them together in one place.

If cost is not an issue, a multi-access computer will suffice, but if there is only one computer and separate communication lines between several geographically separated consoles, paying an unnecessary amount for transmission cannot be avoided huge costs. Part of the economic difficulty lies in our current communications system. When using the computer interactively from a typewriter console, the signals transmitted between the console and the computer are intermittent and less frequent. They do not require continuous access to a telephone channel, and a considerable portion of the time do not even require the full information rate of such a channel. The difficulty is that ordinary carriers don't offer the kind of service that people want—a service that gives people free access to a channel for a short period of time and doesn't charge for when the channel isn't being used.

It seems that store-and-forward (i.e., only store for a moment and forward immediately) messaging services are best suited for this purpose, whereas common carriers offer services that provide users with channels for no less than a minute of personal use.

The problem was compounded by the fact that interacting with a computer via a fast and flexible graphic display required a significantly higher information rate, which in most cases was far superior to that via a slow-printing typewriter. Not necessarily more information, but processing the same amount of information at a faster rate.

It is perhaps not surprising that there are incompatibilities between the requirements of computer systems and the services provided by public operators, since most public operator services were developed to support voice rather than digital communications. However, the incompatibilities are frustrating. It seems that the best and fastest way to overcome these obstacles—to advance the development of interactive communities of geographically separated people—is to build an experimental network of multi-channel computers. Computers will centralize and interleave concurrent, intermittent information from many users and their programs to continuously and efficiently utilize broadband transmission channels, thereby significantly reducing overall costs.

computer and information network

The concept of computer-to-computer connections is not new. Computer manufacturers have successfully installed and maintained connected computers for many years. But in most cases, these computers are from a family of machines that are both software and hardware compatible, and they're all in the same place. More importantly, interconnected computers are not interactive, general-purpose, multi-access machines like the ones described by David and Licklider. While more interactive multiplexed computer systems are being delivered, and although more groups plan to use these systems in the next year, there may be only six interactive multiplexed computer communities at the moment.

These communities are pioneers of social technology, ahead of the rest of the computing world in several ways: What makes them so? First, some of their members are computer scientists and engineers who understand the concept of human-computer interaction and the technology of interactive multiple access systems. Second, their other members are creative people in other fields and disciplines who recognize the usefulness of interactive multi-way computing and feel its impact on their work. Third, the community has large multi-access computers and has learned to use them. Fourth, their efforts are regenerative.

In the six communities, the research and development of computer systems and the development of substantive applications support each other. They are generating a large and growing source of programs, data and know-how. But we've only seen the beginning. There's more programming and data collection -- and more learning how to collaborate -- to be done before the concept's full potential is realized.

Obviously, multiple access systems must be developed interactively. The system being built must remain flexible and open throughout the development process, which is an incremental process.

Such a system cannot be developed on a small scale on a small machine. They require large, multi-access computers, which are necessarily complex. In fact, the sound barrier in the development of such systems is complex.

The new computer systems we are describing are different from other computer systems that bear the same labels: interactive, time-sharing, multiple access. They differ by having a greater degree of openness, offering more services, and most importantly, providing facilities to foster a sense of community work among users. Commercially available time-sharing services do not yet have the capacity and flexibility to provide software resources, and have served about a thousand people for several years.

Among the thousand were many of the leaders of the ongoing computer revolution. For more than a year, they have been preparing for the transition to a completely new hardware and software organization designed to support more simultaneous users than the current system and provide them with - through new languages, new file handling Systems and new graphical displays - the fast, smooth interactions needed for truly effective human-robot collaboration.

Experience has shown that it is important to shorten response times and make conversations free and easy. We believe that these properties are almost equally important for computer networks and computers.

Today, online communities are functionally and geographically separated from each other. Each member can only view the processing, storage and software capabilities of their community center facility. But the move now is to connect independent communities to each other, thereby transforming them into, what we call, a supercommunity . It is hoped that the interconnection will enable all members of all communities to have access to the program and data resources of the entire super community. First, let's point out how these communities are interconnected; then, we'll describe an imaginary person's interaction with this network of interconnected computers.

information processing

The hardware of a multi-access computer system consists of one or more central processing units, several types of memory—cores, disks, drums, and tapes—and many consoles for simultaneous users. Different users can perform different tasks at the same time. The software for such a system includes monitoring programs (which control the entire operation), system programs for interpreting user commands, file processing, and graphical or alphanumeric display of information (allowing people who do not understand machine language to use the system effectively), and by the user Programs and data created by yourself. A collection of people, hardware, and software—multi-access computers and their local communities of users—will become a node in a geographically distributed computer network. Let us assume that such a network is already formed.

Each node has a small general-purpose computer, which we call a "message processor" . The message processors of all nodes are interconnected to form a fast store-and-forward network. Large multi-access computers on each node are directly connected to the message processors there. Thus, through a network of message processors, all large computers can communicate with each other. Through them, all members of the supercommunity can communicate with other people, with programs, with data, or with a specific combination of these resources. All message processors are the same, they introduce an element of unity into another very non-uniform situation, because they promote hardware and software compatibility between different and incompatible computers. The links between the message processors are transport and high-speed digital switching facilities provided by public operators. This allows the chaining of message handlers to be reconfigured as needed.

A message can be thought of as a short sequence of "bits" that travel over a network from one multiway computer to another. It includes two types of information: control and data. Control information directs the transfer of data from source to destination. In current transmission systems, errors are too frequent for many computer applications. However, by using error detection and correction or retransmission procedures in the message handler, messages can be delivered to their destination intact, even if many of their "bits" are corrupted in transit. In short, message processors act as flow controllers, controllers, and correctors in the system.

Today, programs created on one particular manufacturer's computer usually have little value to users on another manufacturer's computer. After learning (hardly) of the existence of a distant program, one has to get it, understand it, and recode it for one's own computer. The cost is comparable to the cost of preparing a new program from scratch, and in fact, most programmers usually do this. Across the country, the annual cost is enormous. On the other hand, in an interactive, multi-access network of computer systems, people on one node can access programs running on other nodes, even if those programs are written in different languages for different computers.

The AN/FSQ-32 computer at Systems Development Corporation (SDC) in Santa Monica, California, was successfully connected to the TX-2 computer at Lincoln Laboratory in Lexington, Massachusetts, demonstrating the feasibility of using the program at a remote location. People on the TX-2 graphics console can use SDC's unique list handler, which is very expensive to use on the TX-2. The Defense Advanced Research Projects Agency and its contractors are currently planning a network of 14 such computers, all of which will be able to share each other's resources.

The way a system manages data is critical for users who interact with many people. If not access controlled, it should put generally useful data into public files. However, each user should have full control over their personal files. He should define and distribute "keys" for each such file, exercising his right of choice to prohibit all others from accessing it in any way; or to allow anyone to "read" but not modify or execute it; or to allow selected A person or group of individuals or groups executes it but does not read it; etc. - as detailed as possible or as many sets as possible. The system should provide group and organization files in its overall repository.

At least one new multiple-access system will exhibit such features. The security and privacy of information is a matter of great concern in several of the research centers we mentioned.

In a multi-access system, the number of consoles allowed to use a computer at the same time depends on the load imposed by the user's job on the computer, and can vary automatically as the load changes. Large general-purpose multiple access systems in operation today can typically support 20 to 30 concurrent users. Some of these users may use a low-level "assembly" language, while others use a high-level "compiler" or "interpreter" language. Meanwhile, data management and graphics systems are available to others. and many more.

But back to our hypothetical user. He sits at the console, which may be a terminal keyboard plus a relatively slow printer, a complex graphics console, or any of several intermediate devices. He dialed into a local computer and "logged in" by showing his name, question number and password to the monitoring program. He needs a public program, a program of his own, or a program of a colleague he is allowed to use. The monitoring program links him to it, and he communicates with that program.

When a user (or program) needs a service from a program on another node in the network, he (or it) requests the service by specifying the location of the appropriate computer and the identity of the desired program. If necessary, he uses a computerized catalog to determine this data. The request is translated by one or more message processors into the exact language required by the remote computer monitoring program. Now the user (or his local program) and the remote program can exchange information. When the information transfer is complete, the user (or his local program) shuts down the remote computer again with the help of the message handler. In a commercial system, the remote processor would record cost information at this point for billing purposes.

Who can afford it?

The mention of bills raises an important question. Computers and long distance calls are "expensive". A standard response to an "online community" is: "It sounds great, but who can afford it?"

When thinking about this, let's do a little arithmetic. In addition to the wages of the communications personnel, the main factor in the cost of computer-aided communications is the cost of the console, processing, storage, transmission, and support software. Within each category, there is a wide variety of possible costs, depending partly on the complexity of the equipment, procedures or services used, and partly on whether they are custom-made or mass-produced.

Roughly estimating the hourly component cost per user, we came to the following conclusions: $1 for a console, $5 for a person to share the services of a processor, 70 cents for storage, $3 for Transfer from a leased line from a public carrier, $1 for software support -- a total cost of less than $11 per hour per correspondent.

In our opinion, the only apparently untenable assumption behind this result is that one's console and personal files will be used 160 hours per month. All other items are assumed to be shared with others, experience has shown that, on average, time-sharing is more utilized than our assumed 160 hours per month, however, note that console and personal files are also Projects for personal problem solving and decision making. There is no doubt that these activities, combined with communication, will take up at least 25% of the work time of an online executive, scientist or engineer. If we reduce console and file work to a quarter of the 160 hours per month, the estimated total cost is $16 per hour.

Let's assume our $16/hour interactive computer link is between Boston, Massachusetts and Washington DC. Is $16/hour affordable? Compare it first with the cost of regular telephone communications: you take advantage of the lower per-minute long-distance charges, which are also less than the cost of direct dialing during the day. Compare this to the cost of travel: If a person flies from Boston to Washington in the morning and returns in the evening, he can work eight hours in the capital, and the return is about $64 for airfare and taxis, plus four hours in the early morning and evening. journey. If those 4 hours were worth $16 an hour, then 8 hours in Washington would cost $128 -- also $16 an hour. Or put it another way: If computer-assisted communication can double the efficiency of a $16-an-hour human, by our estimates, it's worth it if you can buy it now. Therefore, we have reason to believe that computer-assisted communication is economically feasible. But we have to admit that $16 an hour sounds high, and we don't want our discussion to depend on it.

Fortunately, we don't need to do this, because the system we envision is not available yet. The time scale provides the basis for a truly optimistic cost picture. It will take at least two years to bring the first interactive computer network to a significant level of experimental activity. Operating systems could reach critical mass in as little as six years if everyone followed the herd, but costing for a more recent date makes little sense. So let's aim for six years.

In computing, the cost of a processing unit and the cost of a storage unit have been falling by 50% or more every two years for two decades. In six years, there is still time for at least three such declines to take a dollar to $0.12. Three cuts would bring machining costs down to less than 65 cents an hour, which is now $5 an hour under our assumptions.

This advancement in capability, accompanied by a reduction in cost, leads us to expect that the convenience of a computer will be affordable until many people are ready to take advantage of it. The only thing that worries us is the console and transmission.

In the console space, competition is fierce; many companies have entered the console space, and more are entering every month. Lack of competition is not the problem. The problem is a chicken-and-egg problem - in the factory and the market. If a few companies were willing to go into mass production, the cost of a satisfactory console could drop enough to open up a mass market. If large online communities already exist, their mass market will attract mass production. But there is neither mass production nor mass market, so there is no low-cost console suitable for interactive online communication.

In the field of transmission, the difficulty may be the lack of competition. In any case, transmission costs are not falling as fast as processing and storage costs. It also didn't drop as fast as we thought. Even the advent of satellites has less than double the impact on its cost. This fact is not immediately distressing because (unless the distance is great) transmission costs are not the main cost now. However, at the current rate, it will be a major cost in six years. This prospect is of great concern to us and is the biggest obstacle to our near-term realization of an interactive web of operational importance and an important online community.

online interactive community

But let's be optimistic. What would an online interactive community look like? In most fields, they will consist of geographically dispersed members, sometimes grouped in small clusters and sometimes working individually. They will be communities with common interests rather than common locations. In each domain, the overall interest group will be large enough to support an integrated system of domain-oriented programs and data.

In each geographic region, the total number of users - the sum of all areas of interest - will be sufficient to support a wide range of general-purpose information processing and storage facilities. All of these will be connected to each other through telecommunication channels. The whole will constitute an unstable network - both content and configuration are constantly changing.

What will happen inside? Ultimately, every information transaction has enough consequences to warrant the cost. Every secretary's typewriter, every data acquisition instrument, and possibly every dictation microphone, will be fed into the network.

You don't write letters or telegrams; you just determine whose files should be associated with yours and which parts should be associated with yours, and possibly assign an urgency factor. You rarely make calls; you'll be asking the network to connect your consoles together.

You'll rarely be on a pure business trip because connecting the console will be more efficient. When you visit another person for intellectual exchange, you and him sit in front of a two-seat console and communicate through it as if they were face-to-face. If our inferences from Doug Engelbart's meeting turn out to be correct, you'll spend more time in computer-assisted remote meetings and less time on the way to meetings many.

A very important part of each person's interaction with his online community will be moderated by his OLIVER. The acronym OLIVER honors Oliver Selfridge (the pioneer of artificial intelligence), the founder of the concept. OLIVER is, or will be, an "Online Interaction Replacer and Responder," a complex computer program and data that exists in a network, operates on its principal's behalf, handles many little things that do not require his personal attention, and makes He is buffered from a demanding world. You would say, "You are describing a secretary." But no! The secretary will have OLIVERS.

At your command, your OLIVER records what you did, read, bought, and where. It will know who your friends are and who your acquaintances are. It will know your value structure, who you think is prestige, what priorities do you do for whom, and who can access which profiles of you. It will know your organization's rules about proprietary information and your government's rules about security classification.

Some parts of your OLIVER program will be the same as some parts of someone else's OLIVER program; other parts will be customized for you, either by yourself, or developed through "learning" based on your service experience characteristic.

Available in the network will be the features and services that you subscribe to on a regular basis, and other features and services that you need when you need them. The former includes investment guidance, tax advice, selective dissemination of information in professional fields, announcements of cultural, sports and recreational activities, etc. The latter category is dictionaries, encyclopedias, indexes, directories, editing programs, teaching programs, testing programs, programming systems, databases, and—most importantly—communication, display, and modeling programs.

All of this will be - sometime later in the history of the web - systematized and coherent; you will be able to use a basic language proficiently until you choose a specialized language for its power or simplicity.

When people work on information "at the console" and "over the web," remote communication will become as natural an extension of personal work as face-to-face communication is now. The implications of this fact, and the marked facilitation of the communication process, will be enormous—both for individuals and societies.

For one, online personal life will be happier because the people you interact with most frequently will be chosen more by common interests and goals than by casual proximity. Second, communication will be more effective and therefore more enjoyable. Third, many communications and interactions will be with procedures and programming models that will be (a) highly responsive, (b) complementary to one's own capabilities rather than competing, and (c) capable of progressively presenting more complex ideas, without having to show all layers of its structure at the same time - so it would be both challenging and rewarding. Fourth, everyone (who can afford a console) will have many opportunities to find his mission, because the entire world of information, including all fields and rules, will be open to him - there are ready-made programs to guide him or help He explores.

Whether the impact of the Internet can be good or bad for society depends on the question, is 'going online' a privilege or a right? If only a favored group of people has the opportunity to enjoy the benefits of 'intelligent amplification', the network may widen the intellectual gap.

On the other hand, if it turns out that the idea of the Internet works for education as a few hoped, if not in concrete detailed plans, if the minds of all can respond, then the benefit to humanity is sure is immeasurable.

Unemployment will be wiped off the face of the planet forever, as people all over the world are caught up in escalating online interactive debugging considering how daunting the task of adapting network software to all new generations of computers is. (Unemployment would disappear from the face of the earth forever, for consider the magnitude of the task of adapting the network's software to all the new generations of computer, coming and closer upon the heels of their predecessors until the entire population of the world is caught up in an infinite crescendo of on-line interactive debugging.)

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…