How to tell the difference: bots, botnets and trolls

- Accurate discrimination is the basis for solving problems

Over the past few years, words like “bots,” “botnets” and “trolls” have appeared frequently in mainstream conversations about social networks and their impact on democracies.

See it here:

- " The Rise of Trolls: Government-sponsored trolls are spreading around the world "

- " Deception: Diversification of Online Information Warfare Manipulation Strategies "

- " How to Spot Bot Accounts on Social Media?" - 12 methods 》

- " How to find out who is behind the robot trolls?" 》

- " Chasing the Inside Story of Government Propaganda Wars: A Digital Forensics Case Study of Cross-Platform Network Analysis "

However, malicious social media accounts are often mislabeled, causing the discussion about them to shift back from substance to definition.

This article will introduce DFRLab’s working methods for identifying, exposing and interpreting false information online. Accurately identifying and defining them is the first step to taking effective solutions.

What is a bot?

Bots are automated social media accounts run by algorithms rather than real people. In other words, bots are designed to publish posts without human intervention.

For identification, see: " How to spot bot accounts on social media?" - 12 methods 》

An account cannot be classified as a bot if it authored individual posts and commented, replied, or interacted with other users' posts.

Bots are mostly seen on Twitter and other social networks that allow users to create multiple accounts.

Is there a way to tell if an account *isn't* a bot?

The easiest way to check if an account is not a bot is to look at the tweets they have written themselves: use the simple search function in the Twitter search bar;

From: handle

If the tweets returned by the search are authentic (i.e. not copied from another user), it is extremely unlikely that the account is a bot.

What is the difference between Trolls and bots?

Trolls are individuals who intentionally cause online conflict or offend other users by publishing inflammatory or off-topic posts in online communities or social networks with the goal of sparking divisions and tearing apart conversations.

Their method is to provoke emotional reactions in others and derail discussions.

Trolls are different from bots because trolls are real users, while bots are automated. The two types of accounts are mutually exclusive.

Trolling as an activity is not limited to trolls, however. DFRLab has observed trolls also using bots to amplify some of their messages.

For example, back in August 2017, DFRLab was targeted by trolls accounts amplified by bots following articles about the Charlottesville protests. In this regard, robots can and have been used for trolling purposes.

What is a botnet?

A botnet is a network of bot accounts managed by the same person or group.

People who manage botnets that require raw human input before deployment are called bot herders.

⚠️Botnets operate in a coordinated fashion as a network because they are designed to generate social media engagement so that the subjects deployed by the botnet appear to be more active than "actual" users.

The herd effect is very evident on social media platforms—engagement begets more engagement, and therefore, a successful botnet will force the topic it deploys into the face of more real users .

What do botnets do?

The goal of a botnet is to make a hashtag, user, or keyword appear hot or popular—when in fact it is anything but. ⚠️Due to their existence, the so-called hot topics and hot tags may not actually be credible.

Real humans especially like to chase hot spots. The result is that they are led away from the most important things by these zombies .

The goal of these bots is to manipulate social media algorithms to influence trending sections, so they expose unsuspecting users to conversations that are deliberately amplified by the bots.

Botnets rarely target human users, and if they do, they are essentially spamming, or generally harassing the target person or group - *rather than* actively trying to change the target's opinions or political views.

How to identify a botnet?

In light of Twitter's bot removal capabilities and enhanced detection methods, bot herders have become more cautious and have been working to make individual bots harder to detect.

An alternative method of identifying individual bots is to analyze the patterns of a large botnet to confirm that its individual accounts are bots.

DFRLab identified six indicators that can help identify botnets . If you encounter a network of accounts that you suspect are part of a botnet, here are a few things to keep in mind.

⚠️When analyzing a botnet, it is important to remember that no single indicator is sufficient to conclude that a suspicious account is part of a botnet. At least three botnet indications exist simultaneously to support such a claim.

1. Speech method

Bots run through algorithms are programmed to use the same language patterns.

If you come across multiple accounts using the exact same language pattern, such as posting a news article using the title as the text of a tweet, it's possible that these accounts are running on the same algorithm.

Before the Malaysian election, DFRLab discovered 22,000 bots, all using the exact same language patterns.

Each of these bots used two tags targeting the opposition coalition and tagged between 13 and 16 real users to facilitate their participation in the conversation.

2. The same post

Another botnet indicator is identical postings.

Since most bots are very simple computer programs, they are unable to produce real content. As a result, most bots publish the same posts.

A DFRLab analysis of a Twitter campaign urging the cancellation of an Islamophobic cartoon contest in the Netherlands found dozens of accounts posting the same tweet.

Although these accounts are all newly registered and have no past posts to refer to, their collaborative group behavior indicates that they are likely to be part of the same botnet.

3. Processing mode

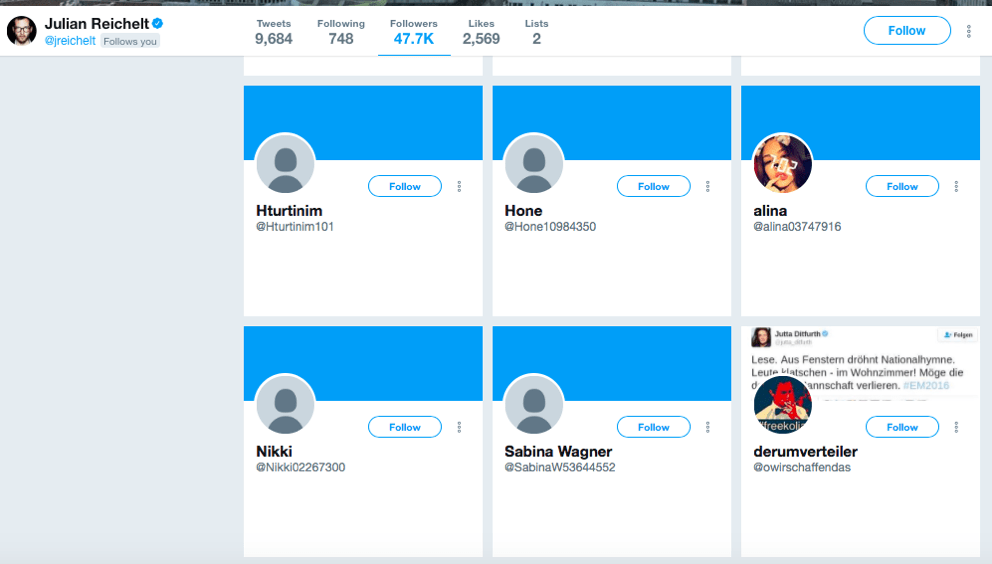

Another way to identify large botnets is to look at the handle patterns of suspicious accounts. Bot creators often use the same handle pattern when naming their bots.

For example, in January 2018, DFRLab encountered a possible botnet in which each bot had an eight-digit number at the end of its handle.

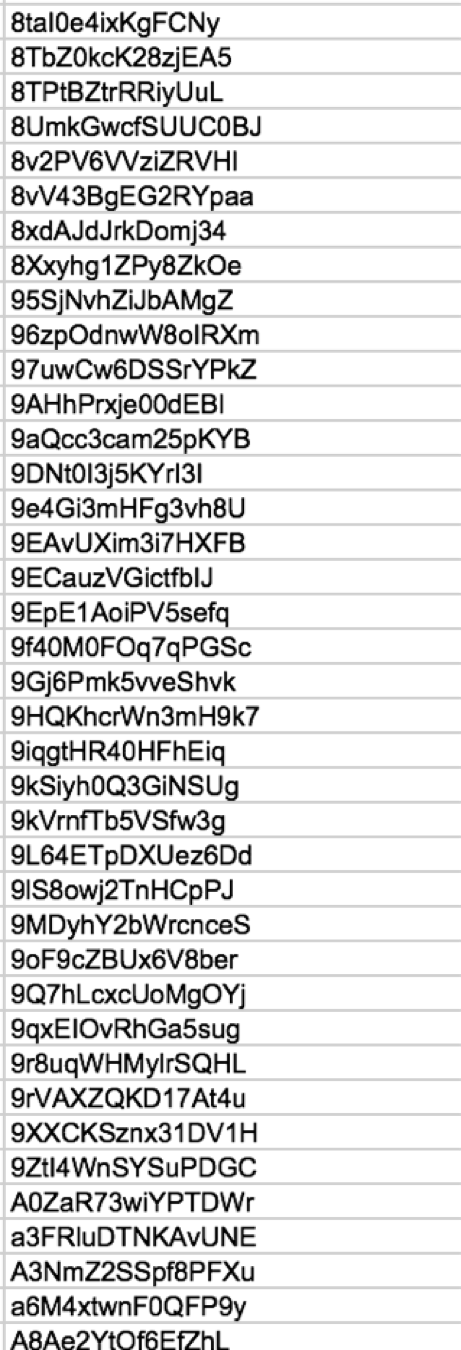

Another sign is that the system generates an alphanumeric ID. All bots in a botnet discovered by DFRLab ahead of the Malaysian election used 15-character alphanumeric IDs.

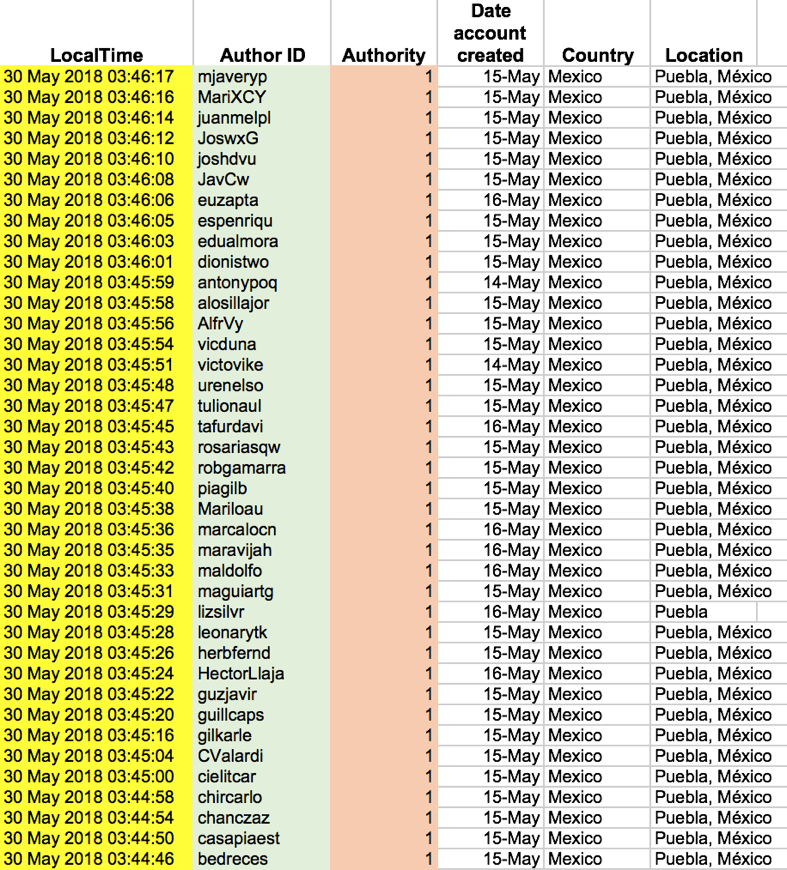

4. Creation date and time

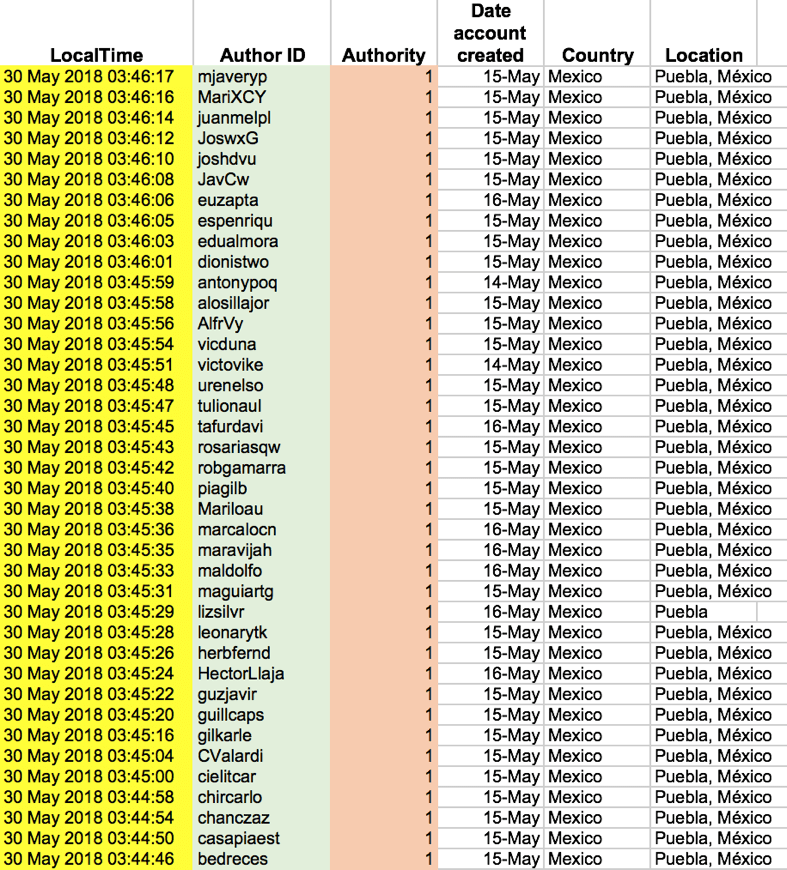

Bots belonging to the same botnet tend to exhibit similar creation dates.

If you come across dozens of accounts created within the same day or week all at once, it's a sign that these accounts may be part of the same botnet.

5. Same Twitter activity

Another botnet is judged against the same activity.

If multiple accounts are performing the exact same tasks on Twitter, or engaging in exactly the same way, they are likely part of the same botnet.

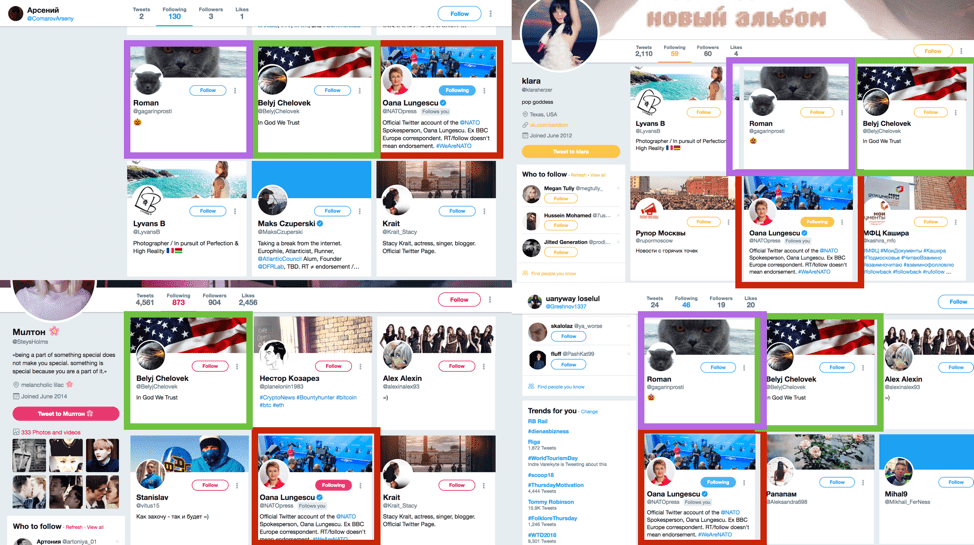

For example, as early as August 2017, DFRLab's botnet research tracked three seemingly unconnected accounts - NATO spokesperson Oana Lungescu, a suspected bot herder (@belyjchelovek), and an account with a cat avatar. (@gagarinprosti).

Such unique activity - for example, following the same unrelated users in a similar sequence - is more than just coincidence and therefore serves as a strong botnet indicator.

6. Location

A final indicator (particularly common among political botnets) is the location shared by many suspicious accounts.

Political zombie herders tend to use the position of the candidate or party they are promoting in an election to try and push their content trends in a specific constituency.

For example, a botnet promoting two PRI candidates in the state of Puebla before the Mexican election used Puebla as its location.

This was likely done to ensure that real Twitter users from the state of Puebla could see tweets and posts that were automatically amplified by the bot.

Are all bots political bots?

No, most bots are commercial bot accounts, meaning they are run by groups and individuals who amplify whatever content they are paid to promote.

Commercial bots can certainly be hired to promote political content.

Political bots, on the other hand, are created with the sole purpose of amplifying the political content of a specific party, candidate, interest group, or viewpoint.

DFRLab discovered multiple political botnets that coordinated operations to promote PRI party candidates in the state of Puebla ahead of the Mexican elections.

Are all Russian bots affiliated with Russia?

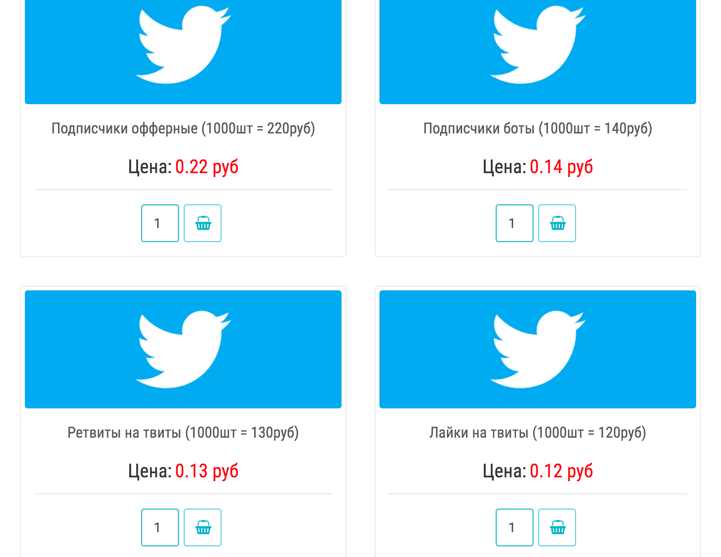

No, many botnets with Russian/Cyrillic IDs or usernames are run by entrepreneurial Russians looking to make a living online.

There are many companies and individuals openly selling Twitter, Facebook and YouTube bot accounts on the Russian Internet, as well as engagement, retweet and like services.

This is the same as China.

Although their services are very cheap ( $3 for 1,000 followers ), a zombie herder with 1,000 bots can serve 10 customers per day and earn over $33 per day.

This means they can earn $900 a month, which is twice the average salary in Russia.

For example, DFRLab observed that Russian commercial botnets amplified political content globally.

For example, a botnet was discovered ahead of the Mexican election that amplified the Green Party in Mexico. However, these bots are not political, and they amplify accounts ranging from Japanese tourism mascots to insurance company CEOs.

in conclusion

Bots, botnets, and trolls are easy to distinguish and can be identified using the right methods and tools.

However, one thing to remember is that an account cannot be considered a bot or troll unless carefully proven. ⚪️

This post was originally published by the Atlantic Council's Digital Forensic Research Lab (DFRLab). Donara Barojan is a Digital Forensic Research Associate at DFRLab .

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!

- Author

- More