📝📝Rebel of Reality Ep. 4|From Analogy to Digital

Video belongs to the medium of vision, and the "vision" here is from the perspective of human beings, and even the subsequent discussion of video, criticism of video, and research on visual culture, all focus on human society. In 1960, scientists at Bell Labs in the United States came up with a new idea:

How can I make my computer read photos too?

And this problem is not just a cross-media transformation, but an inversion of the nature of the image. Since the development of images, the media that carry images have become thinner and shorter, ranging from tin plates, silver plates, glass plates, paper and even celluloid; every photographer, scientist, entrepreneur, and engineer seems to be at the same The improvement of the image carrier is ambitious. However, after the carrier has reached the film negative, can there be a next step? Revolutionary technologies in the digital age seem to have brought new solutions, allowing scientists to digitize ideas.

╴

first digital image

The world's first digital image appeared in 1957, when Russell Kirsch, a computer engineer at the National Institute of Standards and Technology (NIST), used the first-generation computer "SEAC" (Standards Eastern Automatic Computer) to scan his son Waldon's. Photo, when his son was almost three months old. This image is sliced into 176 pixels on each side, so this nearly mosaic image has only 30,976 pixels. Compared to the current era of images, where everyone can shoot tens of millions of pixels with one camera, such a pixel is simply unbelievably low. Not only that, the scanning process at that time was also very complicated.

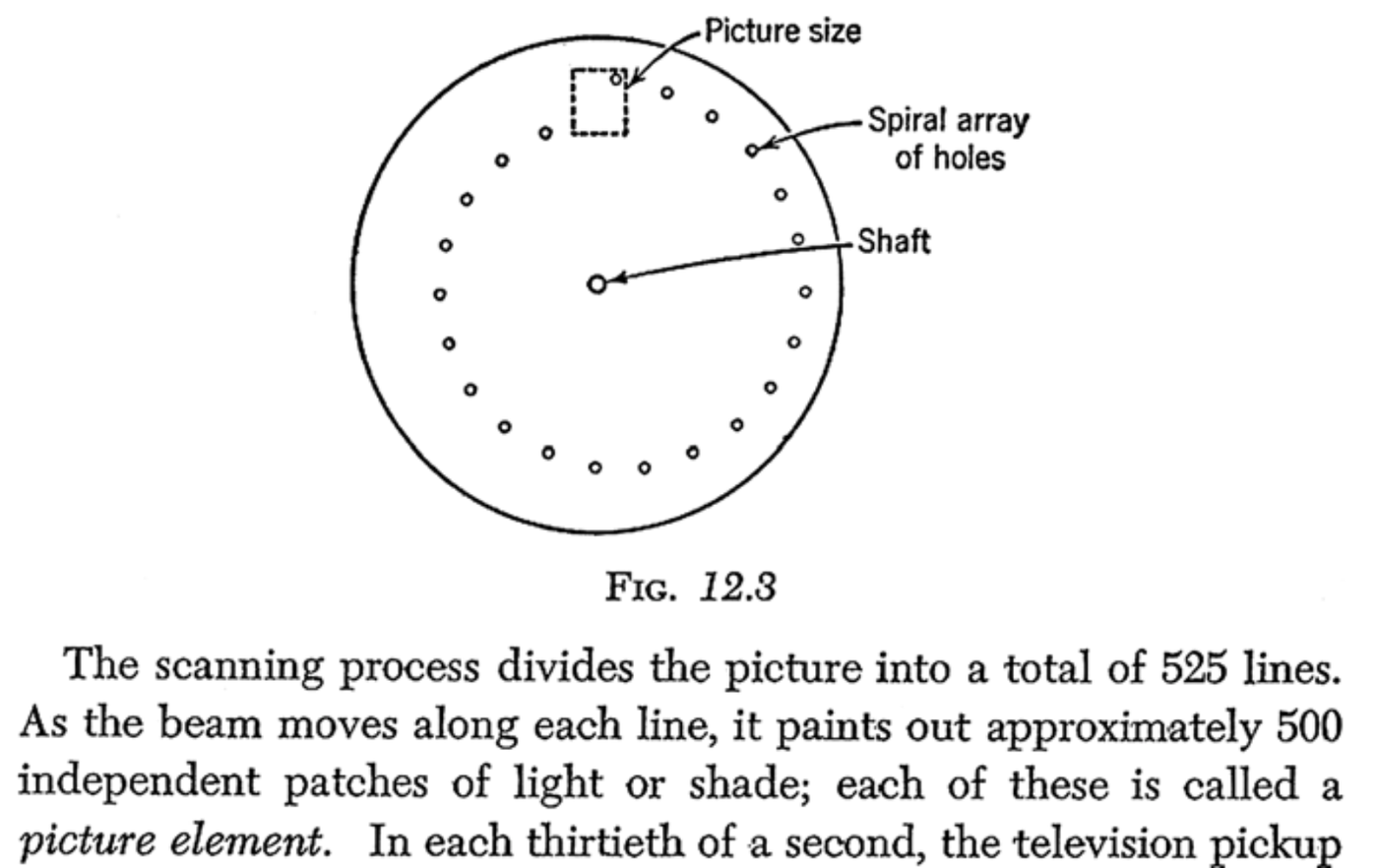

The human eye has optic nerves to perceive the surrounding, but machines do not have such developed sensory nerves; however, machines are good at processing data, so the method at the time was to quantify the original image into data that the computer can read. The reason why the human eye can distinguish colors in reality is because the cone cells in the human eye can distinguish different colors; in the 1960s, the photoreceptors at that time could only sense the intensity of light (bright and dark) and The wavelength of light (color rendering) cannot be sensed, which is why the first generation of digital images were black and white.

On the road to the improvement of digital images, scientists and engineers have always wanted to turn black and white images into a color world. However, due to the development of photosensitive elements, in addition to improving the image quality, the overall image is still monotonous. black and white. So, at this point we can ask a question

How do I convert my old black and white photos to color?

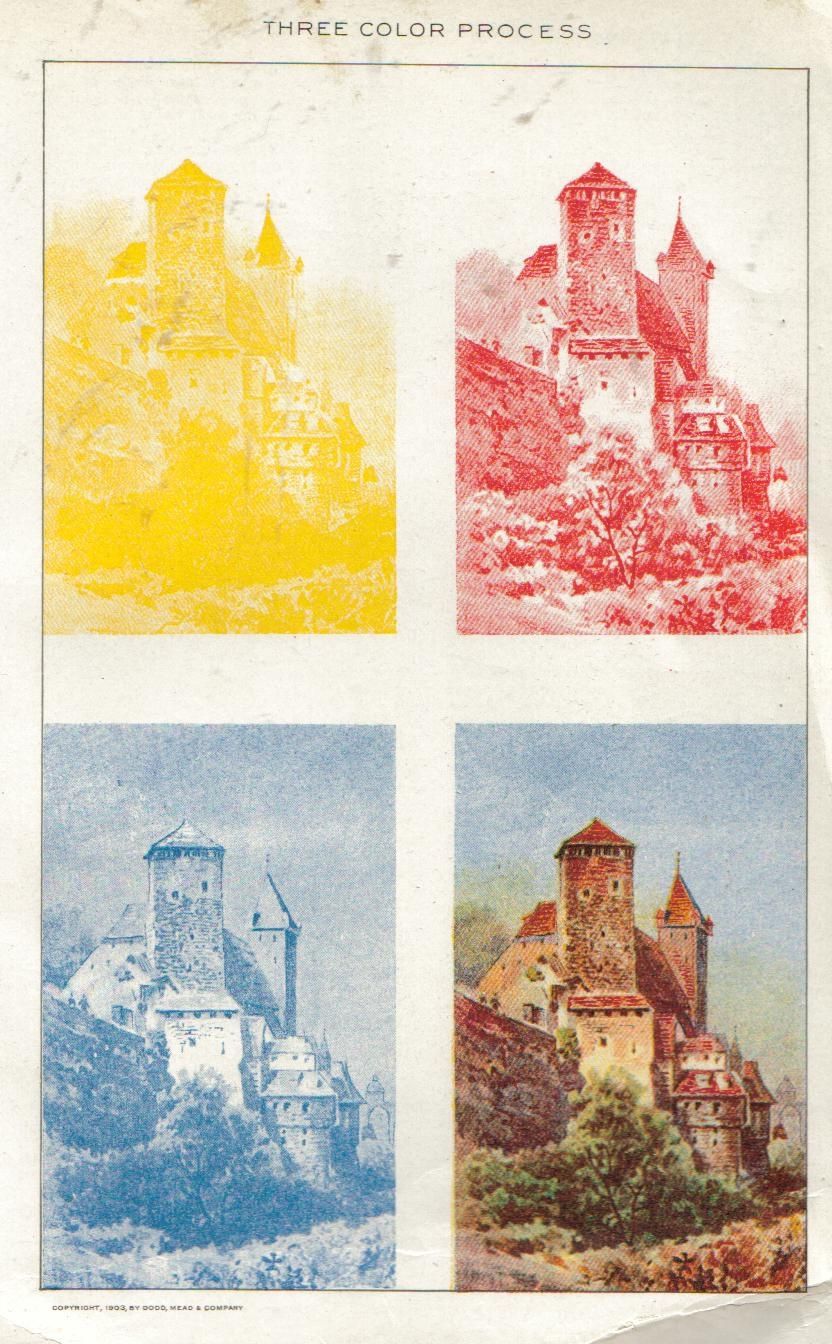

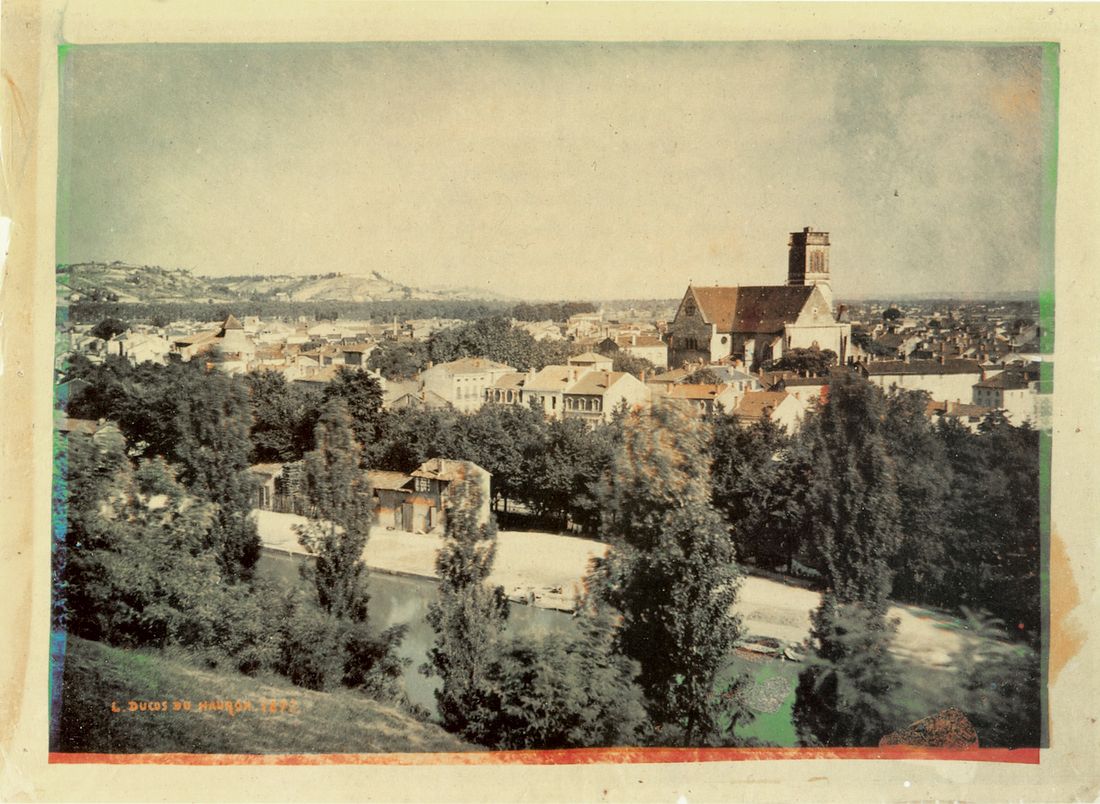

Let us return to the composition of color. The three primary colors of light are red, blue, and green, and the three primary colors of color are red, yellow, and blue . The colorful world seen by the human eye is presented by the combination of visual signals formed by the three primary colors of light entering the cone cells, while a color photo is formed by superimposing the three primary colors of color. Therefore, if the photos of the early silver salt era are to be converted into color photos, it needs to be done three times; that is, the images of red, yellow and blue are exposed separately, and the negative films of the three colors are superimposed on a color photo through post-production. . In fact, color photos came out earlier than we thought, about 1860; however, in the era of black-and-white analogies, such color photos were too time-consuming and material to process, so most photography at that time was Teachers and subjects prefer black and white photos instead. (Don't forget that there were some zealots going around claiming that photography would take people's souls, not to mention the effect of color photographs on them)

Can the digital age replicate the methods of the analog age?

The earliest engineers who tried to improve color digital images adopted the old method a hundred years ago. This time, the three primary color data of the image were collected three times, and finally the sampled signals were integrated and edited in the computer. It's true that color digital images can be produced this way, but engineers also encountered the same problems that photographers encountered a century ago, with too many supplies and too long. Furthermore, at that time, film photography already had color negatives (in 1972, there was the world's first Polaroid, the "SX-70" series developed for Polaroid, and it successfully entered the global photographic market), and The global mass market in the 1960s was about film photography rather than how to produce digital images. Digital imaging has indeed rewritten the meaning of images to people, but such cutting-edge technology could only be carried out in the laboratory at the time, and it was not until the mid-1970s that there was a new wave of innovation.

╴

Bayer Pattern

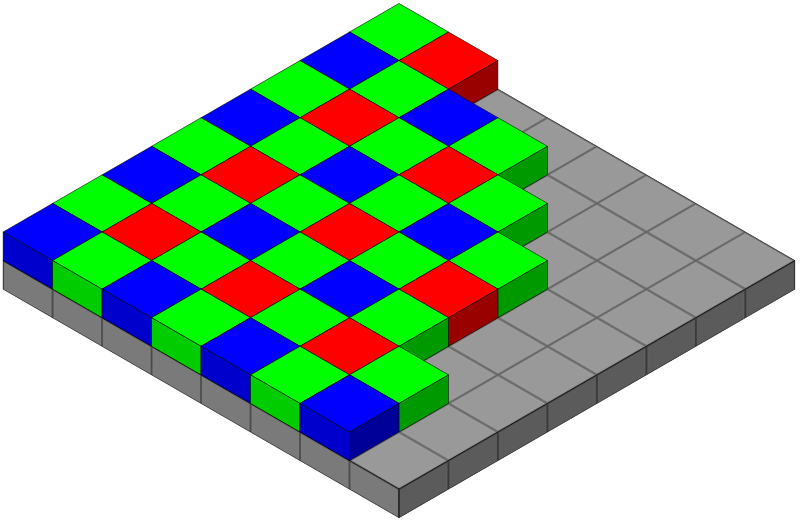

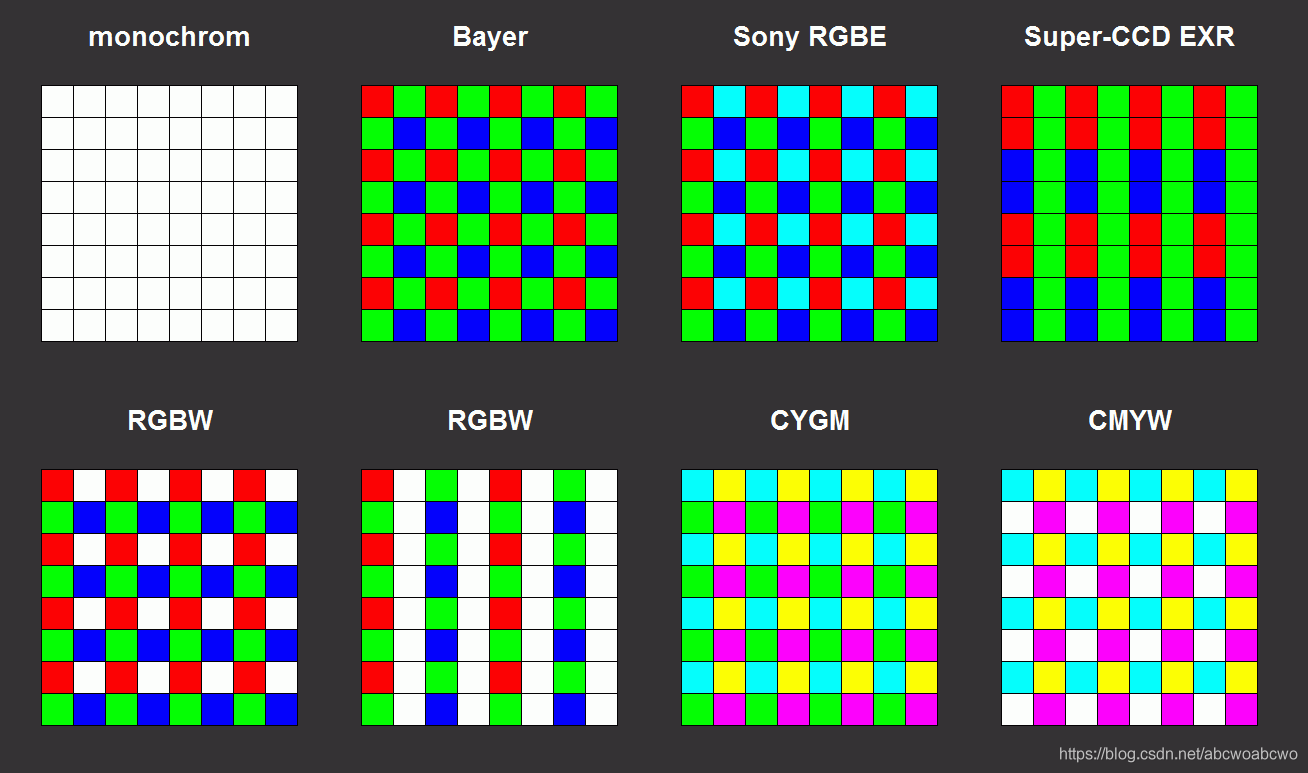

In 1970, Kodak's R&D engineer Bryce E Bayer proposed the "RGB model" to improve the complicated procedures of batch processing light sources in the past. Bayer developed a Bayer filter using this model as a photosensitive substrate in 1974 and patented it in 1976; Bayer described in the patent:

A Bayer filter is a "color image sensor array" consisting of alternating photometric and chromatic detection elements ... presented in a repeating pattern, with photometric detection elements dominating the array.

Unlike previous photosensitive modules, the Bayer filter has twice as many green elements in the array, in order to mimic the photosensitive pattern of the human eye in order to provide the sharpest full-color image. More importantly, the Bayer array breaks the previous imaging process that requires batch processing, that is, the distribution of red, blue, and green can be processed on the array at one time, and the light source is converted into a visual signal and then superimposed at one time. .

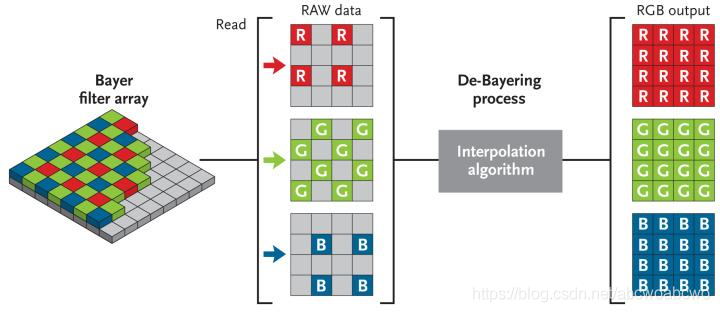

You can see that while such an array does shorten the processing steps, the world you see through this filter will appear to be covered in green cellophane. Therefore, the rays need to be edited after passing through the Bayer array; the engineer will put the sampled raw data (RAW data) in a logical calculation of a "De-Bayering Process" , and the collected three primary colors will be It can be evenly distributed on the red, blue and green matrix, so as to reconcile the excess green in the digital image.

Bayer proposed the RGB model and the Bayer array has always influenced the subsequent image processing technology. In many contemporary image processing software, digital photography sensor elements and even displays extended to LCD screens, it can be found that they all have RGB models in their design and Traces of a Bayer array.

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!

- Author

- More