Machines and Artists Using the Collective Mind

In high school, I loved a science fiction novel written by Robert Nebula, Hugo and Camber winner of three awards. Written by Robert J. Sawyer, called " WWW. Awakening " (www:wake), the story is probably about a smart blind girl who has witnessed the birth of the Internet mind because of some bugs since she was fitted with electronic eyes. The cyber mind has the opportunity to interact with the world because of the protagonist's electronic eyes....

Fast forward ten years to this moment, OpenAI and Google have launched revolutionary artificial intelligence tools in the past few years, including AlphaGo (automatic game), GPT-3 (language generation), AlphaFold (protein prediction), each of which is Makes me feel like the world is evolving at the speed of light.

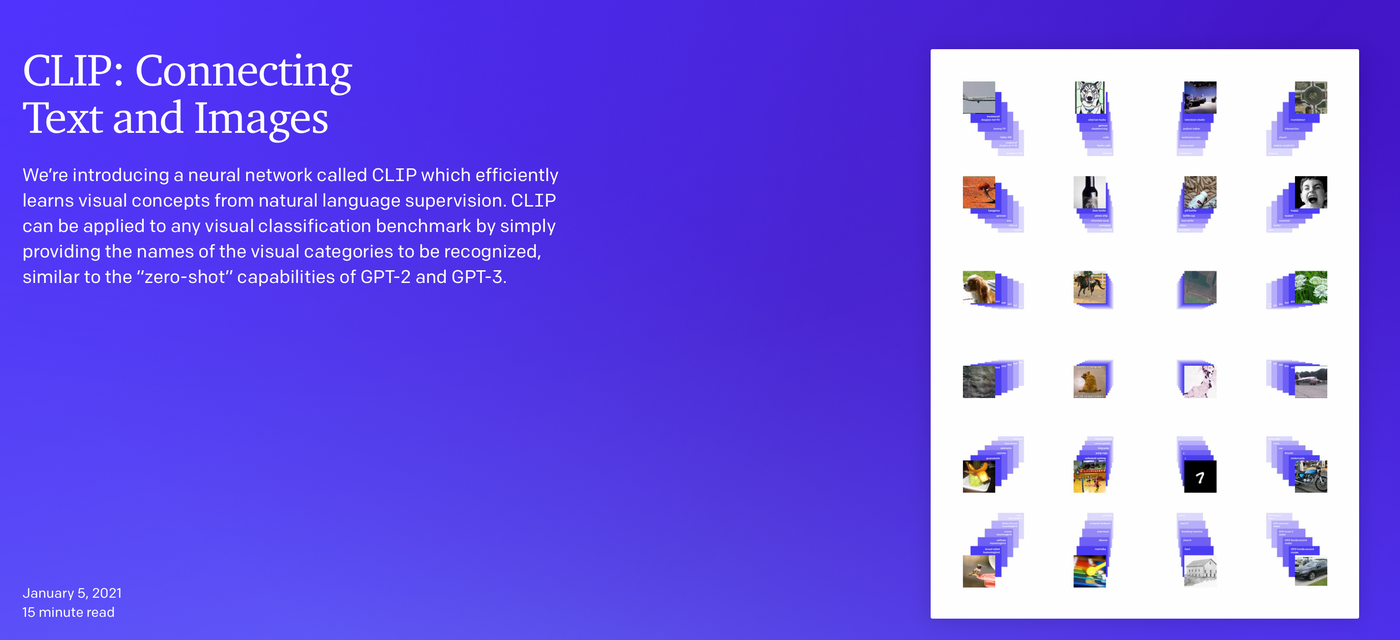

In January of this year, OpenAI extended its magic claws to the field of image generation. The Dall-E (Dali) system can make endless pictures (Text-to-Image) by inputting sentences. The core soul of them is the text-picture matching engine. CLIP (Contractive Language-Image Pre-Training) is an open-source software. In the past few months, the image computing industry has been advancing by leaps and bounds, evolving endlessly new ways to play.

Just last week, the Pixray website appeared, allowing ordinary people to enter sentences in exchange for magical pictures generated by AI tools, and even cast NFTs with one click. Two weeks ago, the article I shared on this app got more than 800 shares. I was surprised and deeply felt that a new era has come.

Before we know it, the era of collective consensus has come, and everyone has been caught up in the trick of the Internet.

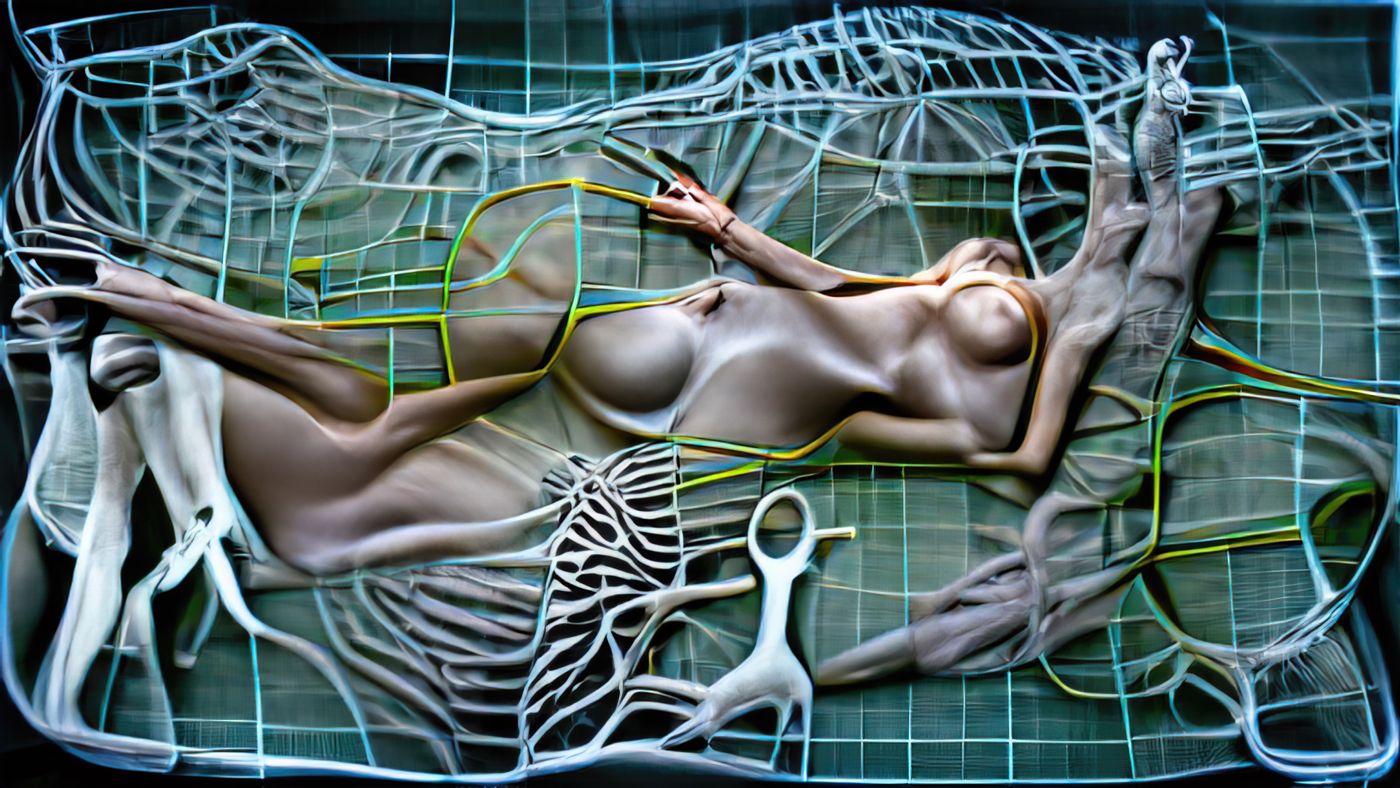

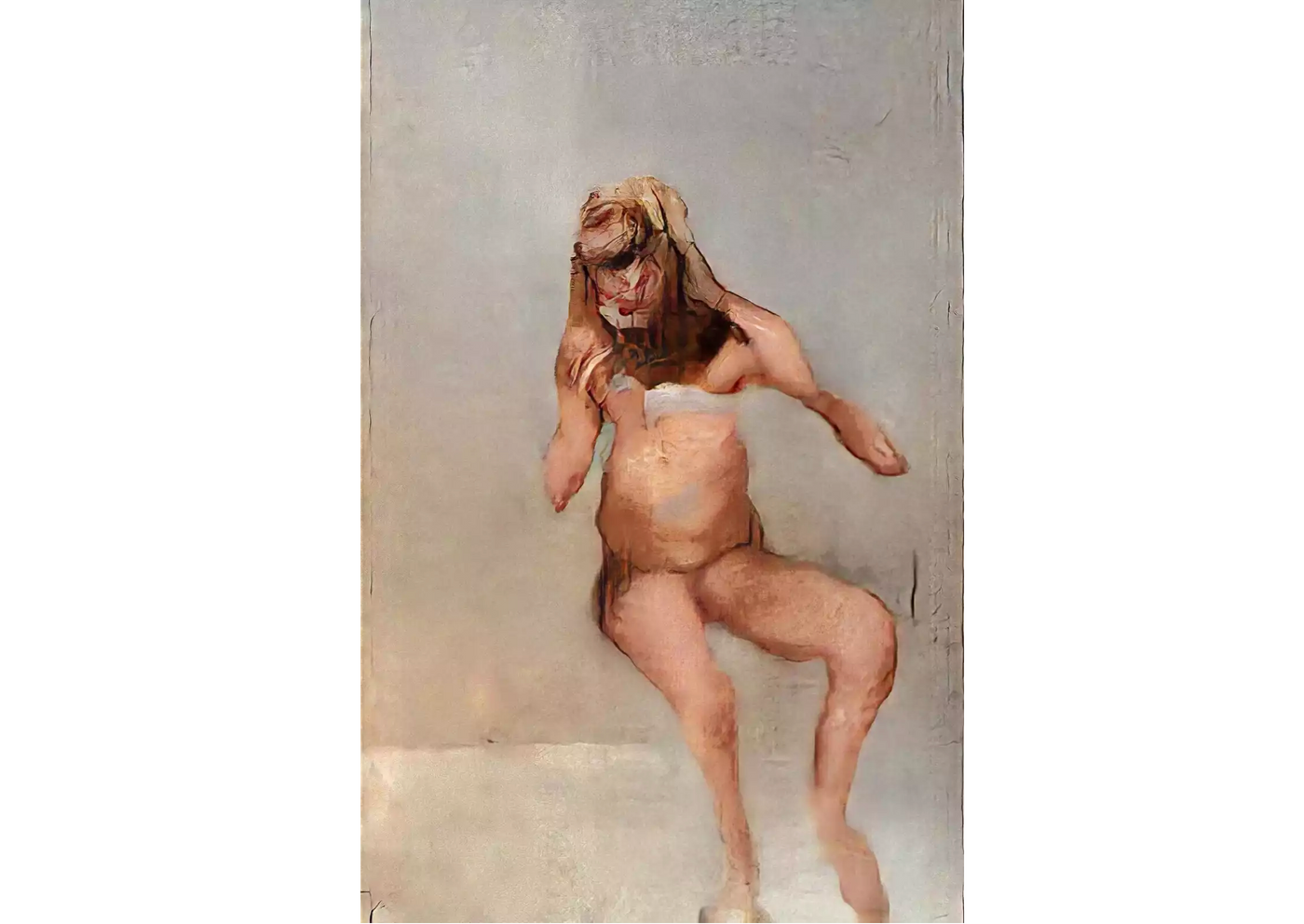

I passed Pixray to my artist friend Zhang Mingyao to see, he seemed to be possessed, and he calculated pictures day and night. By adjusting the words and dice pictures, the pictures showed their intentions. There should be more than 10,000 pictures by now. Stepping on the corpses of other pictures, there are currently nine pictures born, which constitute the series "Genesis", which has been sold out in an instant these days.

Today, I will not talk about NFT sales strategy or image aesthetics, but purely discuss what is the collective consensus born from the Internet; whether the behavior with Zhang Mingyao is a collective mind co-creation.

In fact, AI art is nothing new. AI art pioneer Mario Klingemann won the "Lumen Prize" in 2018, and his work "The Butcher's Son" was trained and produced using GAN (Generative Adversarial Network, Generative Adversarial Network); Mention the Decentralized Consciousness (Octopus) created by Memo Akten, also created with GAN. Taiwanese artist Lai Zongyun's "Ancestor" should also be created in a similar way.

“How will we be able to make a living if machines take over our creative jobs?”

- Mario Klingemann

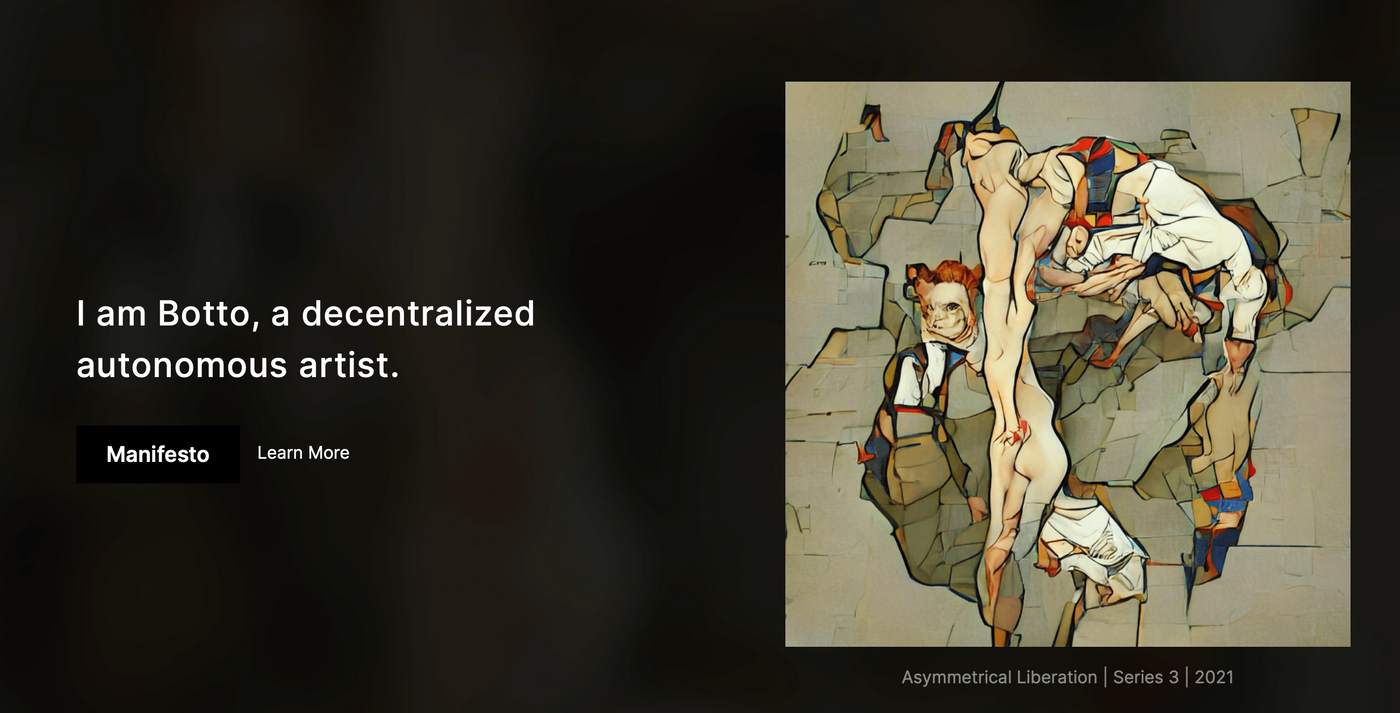

At the end of October, Mario Klingemann created an AI machine artist Botto , which supports his token economy $Botto. The creative principle behind it is the same as the Pixrary mentioned in the article. This machine will regularly accept community proposals. He creates texts and sentences, and the transaction volume of his works has exceeded 33 million Taiwan dollars. The whole strategy and action is thought-provoking, and the work is beautiful.

The two cases of "Zhang Mingyao x Pixray" and "Botto" made me unable to control myself for a long time. I felt unprecedented shock and profoundness, and I felt like reaching out and touching the stars.

I carefully researched where this shock came from, and found that the reason is not that AI will create and automatically evolve; it is the core soul behind these two machines - the algorithm CLIP.

The food for this algorithm comes from the Internet footprint of all mankind.

I am a rookie master student studying in an AI-related research institute. I am not that familiar with code and methodology. Please correct me if there are any omissions in the process.

Let’s start with the conclusion that CLIP was not trained with a pre-prepared dataset when it was born, but was trained with all the pictures with text labels that can be found on the Internet, so it is difficult for us to predict what CLIP has learned. When using CLIP, there is no need to prepare the image data set for training again. This method is called Zero-shot learning.

All the pictures on the Internet with text explanations, whose explanation is it? Who has the right to interpret?

It is not the explanation of scientists, not the explanation of working students, but the explanation of "we".

Going a step further, it is the interpretation of Internet users since ancient times.

What we think, write down, artificial intelligence will digest it, learn it, and become a soulless, but profoundly knowledgeable artist. Humans summon artificial intelligence by chanting a spell, and he produces a work for you. May I ask who created this work? Mantras, artificial intelligence, or all of humanity?

For example, when you write about the Eiffel Tower on Pixray, the machine spits out a picture of an iron spire, not looking up from the bottom of the Eiffel Tower, nor standing on top of the tower. From the outside, why? Because all human beings agree that the Eiffel Tower should be a spire staring from afar.

The symbol of the Eiffel Tower is very clear, and everyone's heart image is roughly the same.

This is the collective mind, and the AI algorithm takes this mind and shows it to everyone.

What did Zhang Mingyao do with $Botto holders? They become creators of applying new tools.

Prompt Engineering (I'm not sure how to translate it in Chinese, I'll call it "leading engineering") is the name of this creative method. With the stacking of different words, it seems that the paintbrush is constantly stacking the paint on the canvas.

This is not an easy task. Pigments have tones, brushes have techniques, and compositions have various disappearing methods (take out the knowledge of my country's Chinese art class...), Prompt Engineering must reverse engineer the AI to feed the collective mind. Vocabulary, nouns, adjectives, verbs, prepositions, direction guides, etc.

But for the same sentence, the machine will roll out different pictures because of the characteristics of the training set and the algorithm.

What a poetic creative process.

Thanks to advances in artificial intelligence, humans today can use poetry to create images.

This is why I am shocked.

This is a new canvas and brush that reflects what we are accustomed to in our hearts.

(This article is over, if you are interested in the principle, you can continue to read it.)

Excerpts from Zhang Mingyao's works

"The technology of generating images through artificial intelligence is becoming more and more mature, and generative art seems to have only two situations left: by programming to affect the computational logic of the machine, or by handing over the creative consciousness to the machine. Creativity no longer seems to be in the hands of humans. So, by constantly negotiating with machines, is it possible to call a renaissance in generative art a part of it?”

"The series of works "Genesis" uses Pixray's image generation website, constantly corrects strings and negotiates with machines, and does not use any instructions and syntax to generate images that meet the expectations. In addition to referring to generative art, the title of the work, It is also linked to Michelangelo's "Genesis" to discuss the possibility of creating hand in hand with machines, and to think about the "humanistic" spirit under the condition of giving up programming but not giving up the consciousness of image creation. "

Principle of VQGAN+CLIP Algorithm

Looking back at Pixray's algorithm combination, engineer Dribnet integrates Perception Engines, VQGAN+CLIP, and Sampling Generative Networks to create a Pixray creation platform. If you are interested, you can go and play, it's simple and fun. Today I only explain a little what VQGAN+CLIP is.

As mentioned above, CLIP was proposed by OpenAI in January this year, and the newer fusion technology CLIP guided GAN imagery was proposed by Ryan Murdoch and Katherine Crowson in April this year. In November, I can already see the GUI (graphical interface, a service that you can play without opening a terminal) using its technology. The platform is used by the people. I am really surprised at the rapid progress of science and technology. Sure enough, the spirit of open source is a most efficient way.

To put it simply, VQGAN+CLIP is a text-to-image tool. As long as you set the text prompt, it will give you pictures. This tool has created a new wave of creative AI tools.

VQGAN and CLIP are two neural network architectures respectively. The full name of VQGAN is Vector Quantized Generative Adversarial Network (quantized vector generative adversarial network), which uses convolutional neural networks and well-known text algorithms (Transformer, BERT & GPT's Dad), in vernacular it reads text and produces pictures. CLIP's full name is Contrastive Language-Image Pre-Training (Pre-training Contrastive Language Graphics Algorithm), which determines which image best matches the text description. The Dall-E Dali system launched by OpenAI at the same time uses hundreds of millions of known image databases for training, while CLIP itself uses unknown network images for training.

The creator proposes text, VQGAN produces pictures, CLIP tells VQGAN whether the pictures are right or not, and the criteria for right and wrong are determined by the crystallization of all human beings on the Internet, and iterates in this way until the creator says to stop.

I think this tool will generate a huge amount of creative energy, and it's just the beginning.

To the best of my ability to come up with an explanation, the following index welcomes references.

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!

- Author

- More