Let's geek and art.

Performance analysis of mmap and disk I/O in different situations

From the literal point of view, mmap (memory mapped file) seems to save the huge overhead (expensive overhead) of doing I/O with the disk each time, and improves the efficiency of disk I/O by using the main memory as a cache.

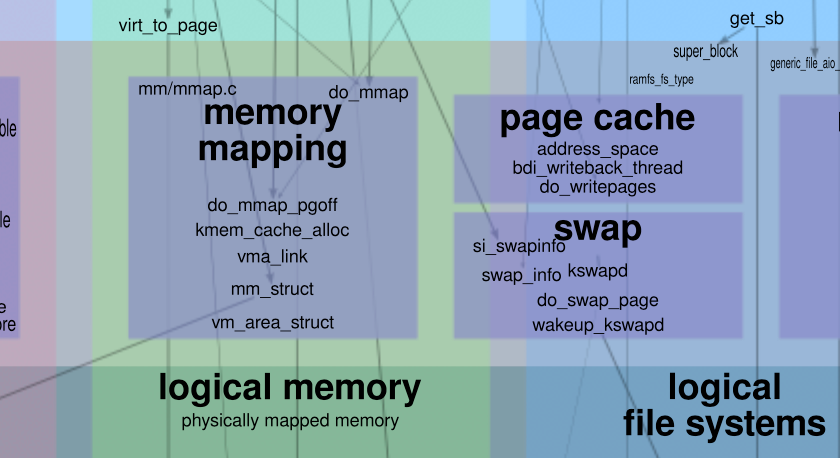

But this kind of cognition is completely wrong, because the OS (operating system) has provided a mechanism such as page cache for disk I/O to realize the above-mentioned cache optimization based on main memory .

In this way, we can't help but ask further, why does mmap exist? What optimizations does it offer? In what kind of scenarios, mmap will bring better performance to the program? And under what circumstances will the introduction of mmap bring greater overhead to the program?

As mentioned above, due to the existence of the page cache, the cache function provided by mmap cannot be used as a reason for its performance due to disk I/O. Therefore, we need to examine the data pipeline of these two mechanisms separately.

For mmap, it maps the disk partition to a virtual memory address through the system call mmap() . It should be noted that mmap adopts the lazy execution optimization mechanism of copy-on-write, and does not allocate a physical memory address in the first mapping stage. It waits until the first access to this virtual memory address, and indirectly triggers the allocation of physical memory address by raising a system exception such as page fault.

That is, each time a new disk part is mapped, it will cause a page fault when it is accessed for the first time. The reason why page fault is mentioned separately here is because page fault usually has high expensive overhead, even higher than the data copy between user/kernel space mentioned below.

For disk I/O, it first needs to allocate the corresponding main memory cache for disk, that is, page cache, for disk I/O to do batch read/write optimization. Obviously, for the OS, disk as an external hardware resource can only interact in the kernel space. The page cache needs to interact directly with the disk, which means that the page cache is the data container under the kernel space.

For applications, they can only trigger interaction with disk through system call read() and write() , and they are under user space, which means that data under kernel space such as page cache needs to be copied to the application data buffer under user space. read() requires copy from kernel space --> user space, and write() requires copy from user space --> kernel space.

In this way, if we simply examine the use of data, that is, the part of data read, then under what circumstances will the mmap and disk I/O mechanisms lead to better performance?

( In the face of engineering problems, "which solution is better" is usually not a good question. A better way to ask questions should be to ask each other under what circumstances will they show better performance.

The latter formulation makes it easier for you to keep an open mind, and to understand the usage scenarios, boundaries and applicable mechanisms of each solution in a more in-depth and detailed manner, rather than mindlessly blowing away a compromise solution in a specific situation.

It should be noted that in the field of engineering, there is always only a balance based on a specific situation, and there is no silver bullet. )

Situation 0x01

Assuming that every time we read in new data, that is: new disk data, then this means that for the use of these new data, mmap needs to bear the overhead caused by page fault every time, and disk I/O needs to bear the overhead The overhead of user/kernel space copy brought by read() . Since each time is new data, obviously these read data cannot be reused, and each time will bear these two kinds of overheads over and over again.

As we mentioned before, the overhead of page fault is quite huge, usually higher than the overhead of user/kernel space copy (refer to Linus's answer in the email-list: http://lkml.iu.edu/hypermail /linux/kernel/0802.0/1496.html). So, in this case, every data access means that disk I/O will be mmaped efficiently. So disk I/O must be a much better solution in this situation.

Situation 0x02

Based on this, let's consider the deformation of "situation 0x01": instead of reading new data each time, the data of the same disk is continuously read repeatedly (assuming that this part of the data read can be accommodated by a memory page; it cannot accommodate case, we will continue to analyze and discuss later). Let's analyze the data pipeline under the two mechanisms.

For mmap, since each access is the same disk data part, it only needs to bear the first page fault overhead, and subsequent accesses can directly reuse the data already in memory, that is, it becomes More efficient memory access. Therefore, the overhead of N accesses of mmap is equivalent to: 1 page fault + (N-1) memory accesses.

For disk I/O, the read() system call is called every time to access the same data (of course, you can argue, since it is the same data access, why not use disk I/O once, and then follow up Is it saved in a variable for reuse in memory? For some application scenarios, you cannot make such a judgment at the beginning; secondly, such optimization belongs to the optimization of the application layer, and we At present, we only focus on the discussion of the underlying data flow. ), which means that we need to bear the overhead of user/kernel space copy every time. Therefore, the overhead of N accesses is: N user-kernel-data-copy.

Obviously, when N is greater than or equal to 2, the performance of mmap will be much better than that of disk.

Situation 0x03

Next, let's consider a variant of "situation 0x02": the same data is read continuously, but it is accessed very infrequently, such as once a day.

On the surface, the data pipeline that this situation goes through should be exactly the same as "situation 0x02". However, memory resources are very precious, and the OS optimizes the use of memory accordingly. When a block of memory has not been used for a long time, it will be swapped off by the OS to the disk, and the freed memory will be used by other tasks.

At this time, the disk part from mmap will be swap off to disk every time because it has not been used for a long time. When using it again, it must be reloaded into memory, and then go through processes such as page default interrupt. At this time, "case 0x03" is equivalent to "case 0x01", that is, for each access, mmap needs to go through page default interrupt, and disk I/O needs to go through user/kernel space data copy. So naturally, disk I/O has become a better choice.

Situation 0x04

Finally, let's consider a more subtle situation, a variant of "situation 0x02": the same data is continuously read, but the data is larger, or the memory pressure is larger, so as to trigger the OS to Partial memory swap off operation.

At this point, memory has to import this data into memory in batches for access. The overhead of this situation is more subtle, because it will trigger another page default interrupt of mmap due to swap off from time to time depending on the state of memory pressure.

At this point, compared to the need to go through user/kernel space data copy for every disk I/O, whose overhead is bigger? We don't know, it depends on the specific computer hardware and runtime memory pressure:

- When the overhead of intermittent page default interrupt is greater than each user/kernel space data copy overhead, disk I/O is a better choice;

- And when the intermittent page default interrupt overhead is less than the user/kernel space data copy overhead, mmap is a better choice.

In summary, the situation in which mmap and disk I/O are lower in overhead depends on two parts:

- Part I : The page default interrupt overhead caused by mmap's "first" access.

- Part II : The user/kernel space data copy overhead of disk I/O "every" access.

The way to reduce the overhead of "Part I" can be to reuse data in memory, while avoiding swap off caused by low frequency usage and memory pressure, which will lead to page default interrupt overhead again.

However, if it is impossible to clearly distinguish between “Part I” and “Part II” whose overhead is larger, it can only be guided by the “empirical data” of multiple tests, and there is no general and definite theoretical guidance.

Remarks

1. According to some materials, Kafka's high throughput comes from the use of mmap, which is incorrect. Kafka only makes the log index file into mmap, but does not make the log file storing the original data into mmap.

It can be seen from the above discussion that if the log file is made into mmap, page default interrupt will be triggered continuously during the writing phase, and its efficiency will be far lower than disk I/O. If we have to talk about the high throughput of the data persistence part, it must come from the page cache mechanism of the OS, which makes disk I/O efficient. mmap does the opposite in this case.

Due to the presence of the page cache, normal disk I/O can usually be a better solution in most cases.

2. An excellent example of using mmap for optimization is ElasticSearch [1] , which uses mmap to store index [2] (the corresponding disk file is called segment).

As a search engine, the most important data structure of ElasticSearch is the inverted index, which needs to be persisted to disk for storage [3] . But obviously, the frequency of using the inverted index is also extremely high, and each search needs the inverted index to complete. In this way, it is obviously extremely inefficient to use disk I/O for read operations all the time.

Therefore, ElasticSearch chooses to map segment disk to mmap for optimization. At this time, the official document specifically pointed out that the swapping function of disable OS is required [4] . From the perspective of this article, it is extremely necessary to stop the OS swap off operation. Because once a segment is swapped to disk, when it is reloaded to memory, it needs to go through the overhead of disk page default interrupt. And it will be much higher than ordinary disk I/O.

3. In addition to providing performance improvement for data reuse in specific situations, mmap can also serve as a bridge for communication between processes. Because it maps the memory to which the disk is not private to any specific process, it becomes the share data between each process.

Because of this, if you look at it from the perspective of the cache, it will not invalidate the cache due to the restart of a specific application (and then cause the kill and redistribution of the process), saving the cache from cool to warm overhead.

(For a format more suitable for reading on a PC, click "Read the original text")

Citation link

[1] ElasticSearch: https://www.elastic.co/guide/en/elasticsearch/reference/6.3/index.html

[2] Use mmap to store index: https://www.elastic.co/guide/en/elasticsearch/reference/6.3/vm-max-map-count.html

[3] is persisted to disk for saving: https://www.yuque.com/terencexie/geekartt/es-index-store

[4] Requires the swapping function of disable OS: https://www.elastic.co/guide/en/elasticsearch/reference/6.3/setup-configuration-memory.html

recent review

" Notes: A Dialogue with Li Lu, The 13th Columbia University China Business Forum "

" Rational Investment" Is Considered a Reason for Metaphysics

Different applications of the reverse problem

If you like my article or share, please long press the QR code below to follow my WeChat public account, thank you!

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…