華文學術圈開放及可重製自耕農。研究之內致力實踐,研究之外積極推廣。相信訊息暴量混亂的時代,人人要有自行"料理"資訊的能力。

Teaching assignment: How to use simulation research to analyze the evidentiary strength of small-sample studies

Assignment goals

Small-sample studies with inspiring titles fill many scientific literatures. Now that most scientists realize the crisis of recognition (Baker 2016), learning to evaluate the strength of research evidence based on the statistical information of papers can enhance the quality of research papers read by researchers , thereby increasing the ability to design rigorous studies.

This article uses the R package faux (DeBruine 2021) developed by Professor DeBruine, Department of Psychology, University of Glasgow, UK, to demonstrate how to estimate the statistical power of simulated experimental data based on the statistical information of weight loss reported by Panayotov (2019), and evaluate this paper strength of evidence.

Experimental parameters

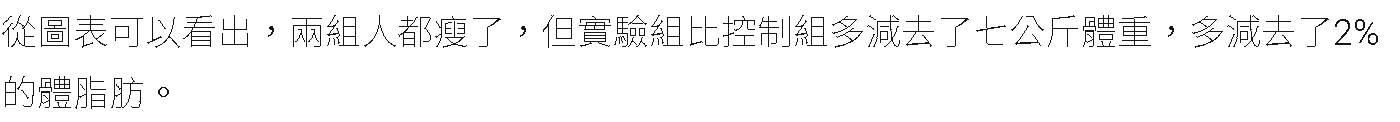

The original paper of Panayotov (2019) is an open access paper authorized by cc, not sure if there is a prior pre-registration, and the original material was not provided. The author of the paper tested 14 adults whose BMI values belonged to the obesity range, and assigned them to the experimental group and the control group with 7 people each. Two groups of participants took an eight-week weight control course, and the diet menu was the same as the exercise schedule. The treatment of the experimental group was told that the menu was a low-calorie diet, while the control group was told the exact information. The author of the paper assumes that the experimental group can lose about 6 kg after eight weeks of courses (“Theoretically this should cause a weight loss of about 6 kg in 8 weeks.”).

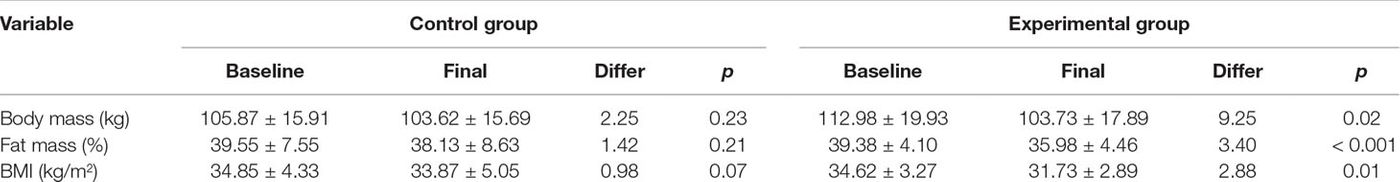

Panayotov's measurement indicators include body weight (kg), body fat percentage (%), and BMI (kg/m^2), which are measured at the beginning of the course (baseline) and the end of the course (final). The test method of weight loss effect is t-test, which confirms the differences in the indicators between the two groups of participants at two times, and the significance level is 0.05. The paper does not indicate whether the test method is one-tail or two-tail . Statistical information is shown in the table below.

mock data test

The weight loss effect is the difference in the three indicators before and after the course of the experimental group, so the statistical information provided in Table 2 of the original paper is used, and faux statistics are used to create simulation data.

Firstly, the weight information is used to demonstrate how to create simulation data. The pre- and post-test data come from the same observation subject, and there is a preset correlation between the data. Generally, the correlation is set to 0.5, which means that the measurement of the before and after time comes from a situation that is not completely independent of each other and not completely correlated.

(Please refer to the original blog for the simulation code and report)

The total test force is about 0.189 . The above simulation results show that if the before and after measurements are not completely independent of each other and not completely correlated, the chance of success in losing 9 kg of weight is about 20% if you find people with the same conditions to take the course.

Weight Loss Utility Test Force Estimation

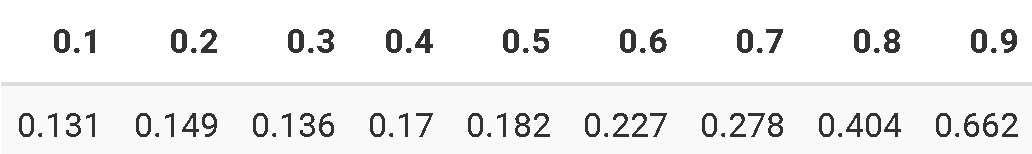

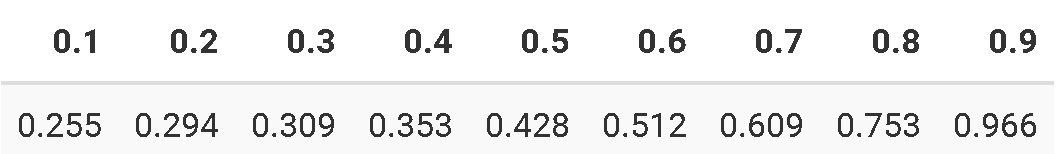

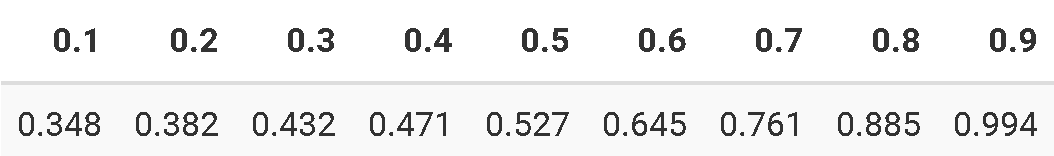

Not sure about the degree of independence or correlation of the before and after measurements, we extend the method shown above, the correlation coefficients of the before and after manufacturing measurements are 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9 and other nine kinds of 1000 simulation results, and then total The testing power of various measurement indicators.

weight

The analysis results of the simulated data of body weight measurement showed that when the correlation between before and after measurement reached 0.9, the success rate of reproduction under the same conditions was only 66%. Note the high correlation between the before and after measurements, indicating that the two time measurements are not independent events.

body fat percentage

The analysis results of the simulation data of body fat ratio measurement show that when the correlation between before and after measurement reaches 0.9, the success rate of reproduction under the same conditions can reach 95%. Note the high correlation between the before and after measurements, indicating that the two time measurements are not independent events.

BMI

The analysis results of the simulated data of BMI measurement show that when the correlation between before and after measurement reaches 0.9, the success rate of reproduction under the same conditions can reach 95%. Note the high correlation between the before and after measurements, indicating that the two time measurements are not independent events.

target source

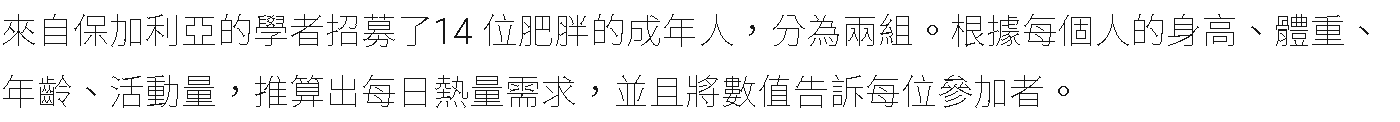

2021/6/3 I learned from the Facebook fan group of Wow Sai Psychology that Dr. Wang Siheng published a scientific proof in Tianxia Magazine: You can lose weight even if you think about it? , this article is from the first chapter of Dr. Wang's new book One Minute Fitness and Slimming Classroom (2), which will be released the next day. I noticed that the text description in it met the criteria of "surprising but vulnerable little research". The screenshot summary article reads as follows:

issues to think about

Ritchie (2020) pointed out that today's researchers are subjected to various realistic incentives to deviate from the spirit of science, and the motivation for producing scientific research reports is not simply to expand the field of human knowledge. Such motivations often lead to biased "research strategies", the most extreme being academic misconduct such as fraud and plagiarism. More researchers adopt the strategy of designing small sample studies that can yield novel discoveries, and today's peer review system, As well as the research funding subsidy units, such as Panayotov (2019) provide the opportunity to qualify for research.

Subject research developed to cater to public preferences is another powerful biased inducement, as Singal (2021) pointed out that books on the subject of self-fulfilling prophecy have an output value of nearly 13.2 billion US dollars in the US book market. Psychology and sociology researchers are relatively easy to obtain funding and have more opportunities for media exposure. Voices calling for a review of the quality of research are not easily heard in these arenas.

There is a similar phenomenon in Taiwan's best-selling book market. Authors who have the opportunity to write and publish will collect such research information and use it as a selling point for their books. However, compared with the United States, Taiwan's media has not yet seen stable voices offering opposing opinions, and an atmosphere of dialogue and introspection has not yet been formed. This article only uses one case to point out the hidden problems. In addition to expecting the authors of relevant books and the media that assist in publicity, they can carefully select the research papers that are used as the basis for the argument. It is also expected that more and more readers will have the ability to judge, just as researchers will determine research strategies in response to the preferences of journals and funding sources, and best-selling authors will explore readers' preferences and write articles corresponding to the quality of readers.

Advice for readers

Although most readers have not received complete professional training, when they see popular science articles discussing psychological factors, as long as they pay attention to the following characteristics, they can judge whether the evidence of the research paper cited by the author is credible or not.

- The number of participants does not exceed a hundred. The more emphasis is placed on the study of psychologically induced conditions, the effect size is usually overestimated, and at least a hundred people have the minimum evidence power.

- Studies report more than one measurement variable. Studies in which the research procedure is consistently reproducible usually focus on changes in only a few measures, and studies with small samples or first attempts often collect a variety of measurements.

- Can the original paper of the research report be made publicly available? Is there any public information? Not having publicly available data opens the door for small-sample studies to be corrupted: Measurements that do not meet assumptions can be hidden. This is the research strategy of cherry picking. Relatively, we can reasonably doubt the evidentiary power of this study.

references

Baker, Monya. 2016. “1,500 Scientists Lift the Lid on Reproducibility.” Nature News 533 (7604): 452. https://doi.org/gdgzjx .

DeBruine, Lisa. 2021. Faux: Simulation for Factorial Designs . Zenodo. https://doi.org/10.5281/zenodo.2669586 .

Panayotov, Valentin Stefanov. 2019. “Studying a Possible Placebo Effect of an Imaginary Low-Calorie Diet.” Frontiers in Psychiatry 10: 550. https://doi.org/10.3389/fpsyt.2019.00550 .

Ritchie, Stuart. 2020. Science Fictions: How Fraud, Bias, Negligence, and Hype Undermine the Search for Truth . First edition. New York: Metropolitan Books ; Henry Holt and Company.

Singal, Jesse. 2021. The Quick Fix: Why Fad Psychology Can't Cure Our Social Ills .

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…