自由軟體工作者,公民記者,關心開放政府與政府政策形成的過程。

From Elon Musk's acquisition of Twitter, see the governance issues of social platforms

Someone on Twitter named the richest man, Elon Musk, and asked him: "Would you consider building a new social media platform? A platform that can open up the algorithmic source code, make free speech and compliance with the spirit of free speech a priority, and make one-sided A platform where political propaganda is minimized, which I think we desperately need.” Elon Musk responded, “Seriously considering it!”

I believe these few statements are the elements that many people expect from a social platform. At present, the "algorithm" is the core technology and the core secret of each technology platform. It determines what kind of content we will see. If we open up the source code of the algorithm, we have the opportunity to know why we see which articles and why we can't see other things. If a platform abides by the principle of "freedom of speech", then we can speak freely without being censored or even suspended for no reason; if the platform can minimize political propaganda, then we will not see political propaganda that we don't like.

Are these three elements possible? Let's break it down slowly.

Should the algorithm release the source code? The pros and cons should be weighed

The first element is that the algorithm should expose the source code. These social platforms use algorithms that initially hope to filter articles so that users are willing to stay. However, through the process of training and learning, the algorithm has found a good way to retain users: take advantage of human weaknesses and only show users what they like. This leads to an availability bias, which makes people mistakenly think that the articles they see are the face of the whole world, or strengthen the so-called stratospheric effect, as long as they disagree with their own opinions, they will continue to ignore them. Many people believe that such an algorithm should be made public and let everyone choose whether to use it or not.

Algorithms are the commercial interests of many platforms, because they can affect the content users see and make paid content easier to see, so platforms do have incentives not to disclose algorithms; however, in the general direction, I still prefer to disclose Algorithms, after all, after the algorithm is published, everyone will know why the information is pushed to the front, and what information has been missed, or you can choose your favorite algorithm.

However, there are other risks to public algorithms that I think should be taken into account. The first risk is that the disclosure of the algorithm will lead to content farm operators sniping at the loopholes in the algorithm and spreading their content everywhere; it is conceivable that totalitarian countries may also use the loopholes in these algorithms to push content. Used to influence elections in democracies.

Content farms and advertisers have been studying how to use algorithms to increase exposure since Google started using algorithms to filter search results. In the earliest days, Google looked at the number of exposures of keywords, and as a result, some webpages with repeated keywords were posted, and whole articles that were meaningless but could get high scores in the algorithm were searched. That is to say, if the public algorithm has loopholes, social platforms or search engines will inevitably be sniped by content farms, and the result will be full of content farms and advertisements. Although Google will now explain several important elements, it will not disclose the complete algorithm.

Furthermore, many people's imagination of "public algorithms" may be too naive. The algorithm that ultimately determines which information should be disseminated and which should be downgraded is obtained through machine learning, and the material of these machine learning is the massive amount of information on the platform. In other words, if there is only the original source code of the algorithm and no information, I am afraid that it is impossible to know what the final algorithm looks like, and sometimes even the platform itself cannot grasp it. Facebook once got the algorithm wrong , which caused fake news to be pushed out instead.

Moreover, the disclosure of the algorithm also has many aspects to be discussed, such as, what situation should be disclosed? Who should it be disclosed to, and how much should it be disclosed? Once the algorithm is made public, it is maliciously used, but this malicious use can make the platform profitable. What should the platform do? If the platform chooses not to act, does the user have a channel for relief? These issues actually need to be discussed carefully.

Of course, the disclosure of the algorithm can also allow the public to work together to strengthen it, but who will win the cooperation between the public and the sniping of the content farm? Just as an open source program has loopholes, it may be exploited by hackers, and it may also be corrected by other developers. Therefore, after opening the algorithm, it is still uncertain which side will win. However, based on my personal belief in open source code, I still tend to believe that publicizing the algorithm source code and allowing everyone to discuss it may be a better way to promote public concern and collaboration.

Is it still possible to abide by freedom of speech to reduce political propaganda?

The second element, "observing freedom of speech," should be viewed alongside the third, "minimizing political propaganda." In fact, these two elements are in conflict: some speech, which may be considered by one group of people to be guaranteed by freedom of speech, is considered by another group to be political propaganda that should be degraded.

Why are there two conflicting statements? I think that's the paradox of social network users these days. People are often dissatisfied when their speech is restricted; but at the same time, some speeches are not pleasing to the eye, hoping that these speeches will not be seen again. This is actually the core question that all social platforms will encounter: which speech should be banned? Which speeches should be opened up, and what measures should be taken in the middle?

Some remarks should be dealt with

First of all, freedom of speech does not mean that you will not be held responsible after posting. The so-called guarantee of freedom of speech means that the article has not been reviewed before it is published, but once the speech causes damage, the party concerned should bear corresponding responsibility, and may be punished or even prosecuted for it. This is not an infringement of freedom of speech, but requires the parties to take responsibility for their speech. But of course, if there is any punishment, it should comply with the principle of proportionality, and should clearly inform the parties of the reasons and reasons for the punishment, and provide the parties with appropriate relief channels.

"Unrestricted freedom of speech" that never deletes speech is not feasible. In the most extreme cases, child pornography, sexually intimate images that are not allowed by the parties, etc., are harmful to the parties every time they are circulated. I think most people agree that such posts must be deleted so as not to be widely publicized. It will cause more harm to the parties after the transmission. It is precisely because the appearance of some articles will harm the reputation of the parties concerned or defraud others, so not all speeches should be protected, and there must be a mechanism for taking them off the shelves.

Some conspiracy theories, if not dealt with properly, may also spread on the platform and cause harm. The famous Pizza Gate conspiracy theory is an example. In October 2016, some people began to spread conspiracy theories that multiple top U.S. Democrats were involved in human trafficking and child pornography. These conspiracy theorists claimed that some children were hidden in the basement of COMET Pizza, causing restaurants such as COMET Pizza to be attacked. to harassment. After many conspiracy theorists on social platforms such as 4chan, in December of that year, 28-year-old Edgar Maddison Welch broke into the COMET pizza shop with a gun, trying to find the kidnapped children in the basement, but absurdly found The store has no basement at all. That is to say, the continuous spread of unregulated conspiracy theories may create a perception that is different from reality, and thus lead to violent incidents.

Truth has limits, but lies have no limits. The reason why many articles are sensational is that the content is false or extreme, which makes it more sensational than the factual statement, which in turn attracts more people to read and is favored by the algorithm and spread widely. On page 399 of the book " Making the Truth ", Zuckerberg, the CEO of FB, is quoted as saying that on social platforms, the more extreme and sensational an article is, the more attractive it is, and the more likely it is to attract people to spread. If nothing is done, the facts will hardly trump the extreme sensationalist rhetoric that floods the platform.

FB algorithm struggles to understand the ambiguity of human language

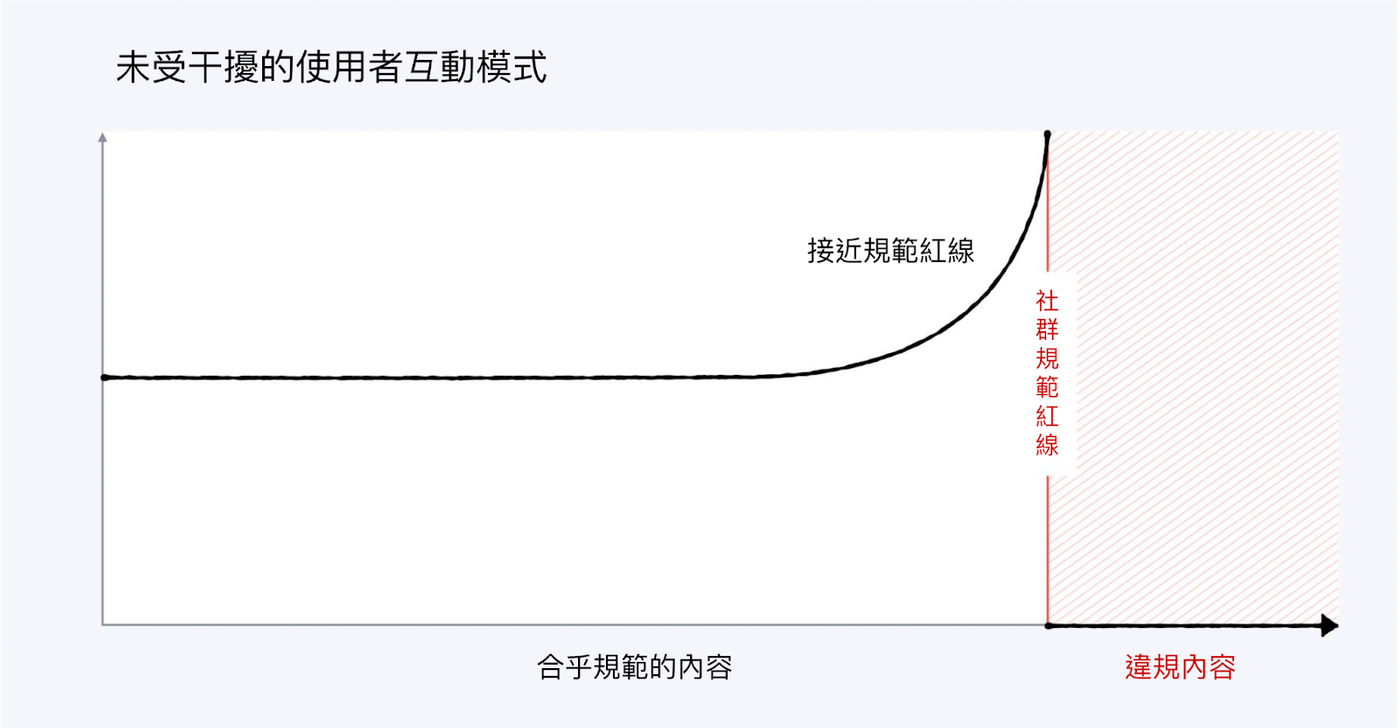

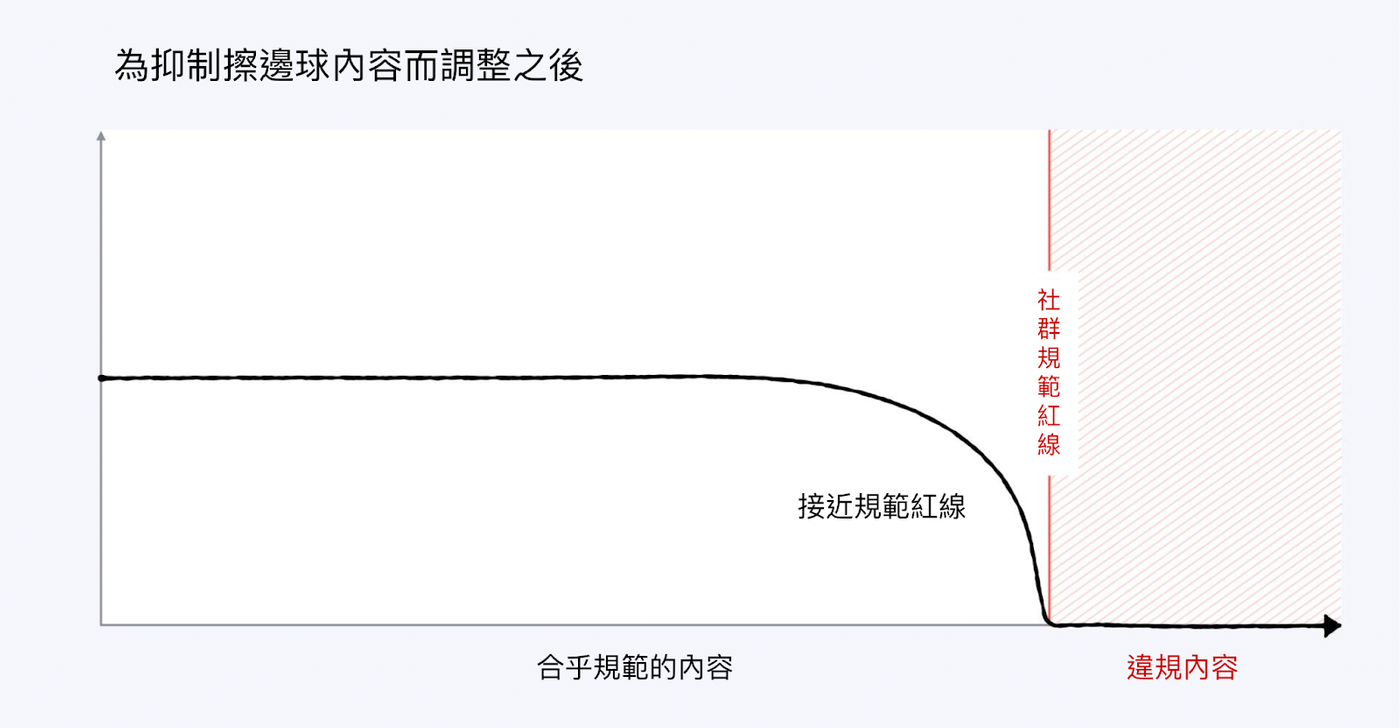

FB tries to use algorithms to solve the problem that extreme sensational articles are more attractive than facts. Ideally, these more extreme articles should be subject to more restrictions. Although articles will not be deleted, they will not be pushed by algorithms. In this way, the second and third elements mentioned above are achieved: the article will not be deleted, but the political propaganda will be reduced to a minimum.

However, FB's algorithm looks like a questionable attempt. There are many people's articles that were determined to be illegal and hidden by the algorithm, causing dissatisfaction. There are two aspects to this problem, one is the problem of the platform, and the other is the user. On the platform side, algorithms do appear to be the tools needed to assist a small number of humans in reviewing posts, since all posts cannot be reviewed instantly. The question is, what do algorithms use to learn? If it is a censor with a political stance (such as bringing in censors from China), or a whistle-blower mechanism that can mobilize the masses to report articles, the algorithm may learn a questionable mode of censorship. In addition, people's cognition is very complex, and some articles are actually anti-strings or irony. Can algorithms learn such detailed human brain mechanisms? It seems difficult. Therefore, the intervention of algorithms and the problematic review mechanism often cause FB to improperly remove articles that are actually not problematic.

Moreover, the algorithm has become a black box after training with a large amount of data, which makes it difficult for FB to specify why the article was punished. FB often does not provide specific reasons and reasons for dealing with such issues, nor does it provide an objective third-party audit and relief channel, which makes it difficult for FB to convince the public in dealing with such issues, and naturally arouses the dissatisfaction of many people.

Dissatisfied users with restricted speech

On the user side, this mechanism may have dealt with the articles that should be removed, but it has caused dissatisfaction among users. Why? Because the subject of the content may not think there is a problem with the article -- but the article does have a problem, such as it may promote hatred, or the verification is not true and there may be disinformation concerns. In such a situation, the person who posted the article or those who supported it would feel dissatisfied. If the previous algorithm is added, users will only see articles that are close to their own position, and naturally only see articles close to their own side being removed from the shelves, and they will feel that the social platform has a political stance.

Such problems also arise in the detection of fake news. I believe everyone agrees that messages should be dealt with if they may convey misinformation, or if the content is not verified or falsified. However, if they encounter an article that is close to their own, but deviates from the facts, or that the review organization finds an article that is fake, many people will feel angry, and they will also believe that the review organization has a specific position. But in fact, many of the International Fact-checking Network (IFCN)-accredited checking organizations conduct checks on various positions—but the checks on some positions are often not seen on social platforms.

From this point of view, the dissatisfaction of many people is, simply put, this: I want to be able to speak uncontrollably, but I also want to not see political propaganda that I don't like (because what I like is not political propaganda). As you can imagine, if the social platform really conforms to such an idea, there are only two possibilities: one is that it becomes a single story and everyone else leaves the platform (or this page), and the other is that the social platform maintains various stratospheres , while those with different views do not interact with each other, and everyone slowly becomes extreme in their own stratosphere.

Fake accounts create a trend that does not exist, but it is difficult to deal with

In addition to the above three points, there is another troublesome issue: fake accounts. Many democratic countries do not have an online real-name system, because the real-name system can easily bring about the suppression of freedom of speech, but this also leads to many people using fake accounts to operate, for example, through likes or fake interactions, allowing algorithms to misjudge that certain articles are very important. important, or mislead other users into thinking that such an argument has a large number of supporters. For example, some specific pages on the old BBS station of PTT, if they hold a large number of fake accounts, can use tweets to promote specific articles to make the articles appear more popular than other articles. How should fake accounts be traced and authenticated, should they be deleted, or should the weight of their participation in discussions be reduced? This is a point that needs to be discussed and thought about together.

It's not about freedom of speech, it's about listening

If you really hope that social platforms can become a place for rational discussion, the biggest focus is not freedom of speech, but listening, that is, really reading or understanding the meaning of the other party. To talk, listen first. If everyone doesn't want to listen and just wants to talk, then in the end there will be no dialogue but only quarrels. As for the articles or speeches issued, the parties, while enjoying freedom of speech, are also responsible for the content they produce.

How can social platforms encourage listening? I find it difficult myself. If you want to listen, you must be willing to let go of your ego, but in this age where everything is accelerating, few people have the time and leeway to let go of their own prejudices and let other people’s thoughts roll around in their hearts. However, this is not impossible. The human brain has two mechanisms for inputting information, one is reading with the eyes and the other is listening with the ears. If you use visual reading information on the Internet, sometimes there is too much relevant information, and you may not be able to really focus. But if it is matched with hearing and listening, sometimes there will be different effects. Perhaps, using different mediums to absorb information gives people a chance to calm down and have the opportunity to promote more listening and dialogue.

Can market mechanisms hold users accountable for their own content? can be difficult

As for being responsible for your own words, one is a punishment for problematic content, and the other is a reward for good content. In terms of punishment for problematic content, in addition to the ban on social platforms for spamming false news, judicial mechanisms in the real world can also prosecute for defamation or dissemination of inappropriate content. I also agree that content that really shouldn't appear, such as child pornography, sexually intimate images, etc., should be banned through the formulation of laws. But the next step may be tricky, after all, there is always talk in a gray area. In the past, the media had also reported a lot of bloody content in order to attract more people to read it, but later, through the promotion of media self-discipline, this situation has been improved to a certain extent. Perhaps, we can also invoke a similar spirit, and through the promotion of digital literacy literacy, help users to check and verify the content to a certain extent when absorbing information and forwarding it.

In terms of rewards, recently on the Web3 platform, some people hope to use the mechanism of "writing good articles and earning income" to encourage users to send good articles and good discussions, thereby creating a good discussion environment. However, how to establish this mechanism is actually a big problem. In the past magazines and newspapers, editors would check and reward users with royalties, which was handled in a human and centralized manner. But if you want to establish a mechanism on the platform, how to avoid the aforementioned fake account wash evaluation? How to avoid extreme and emotionally manipulated articles to be more eye-catching and attention-grabbing? The core concept is to use the market mechanism to encourage the production of good content, but the problem is that articles that the market likes are not necessarily valuable, and the market sometimes fails. It would be scary to rely solely on belief in the market without seeing these possible scenarios.

To put it another way, the mechanism of Write2Earn has already appeared on the Internet, that is, through the method of content farms, it allows writers to write eye-catching but likely fake articles, attract traffic, get advertising revenue, and then distribute the profit to the writers that generate traffic. hand. Such a mechanism has been abused, producing many fake health care articles that harm people's health, and there are also content farms colluding with totalitarian countries, trying to influence the political cognition of people in democratic countries with articles. The model of exchanging articles for income can encourage good content, but it is easier to encourage “content with traffic”, and the latter can easily become a hotbed of content farms. If there are more content farms like writing articles in exchange for income, I don’t think it’s a situation that everyone is happy to see.

To go further, in fact, existing platforms such as Facebook have long been Read2Earn, but it is not the people who write articles that make money, but the social platform itself through the algorithm to keep people on the platform, and then obtain traffic and advertising revenue. While this platform gains benefits, the price is that users’ opinions are biased under the influence of algorithms, which in turn leads to social tearing and democratic challenges. Social platforms are trading democracy as an external price for their own gain.

Starting from people, we can build a good platform

At this point, you should find that the operation and regulation of social platforms is not as simple as you thought at the beginning. And the complicated reason behind this is not actually digital technology, but people themselves. The human brain is biased, and when faced with massive amounts of information, it is easy to encounter many problems; at the same time, human beings are inherently animals whose emotions and positions take precedence over rationality, and "rational speculation" is inherently contrary to human nature. Complexity and challenges in platform operation. Under such circumstances, if you still expect the "free market" to achieve the imagined beautiful environment, I think it is very likely that you are looking for fish.

Going back to Elon Musk's recent rhetoric, I think he probably doesn't understand the various challenges of running a social platform yet. The social platform is like a pond. The water should not be too turbid, but even if it is clear, there will be no fish; each adjustment is like adding ingredients to the pool, trying to form a new balance. It's a big challenge to figure out how to balance it. I think as Elon Musk goes deeper into this issue, he should start to think differently.

Facing the chaos of social platforms, what can we do ourselves? I think the most important thing is that we can’t always think about “how can social platforms satisfy me” from our own perspective, but we should think about it in reverse. What mechanisms can help us let go of our attachments and learn to listen and talk? What kind of mechanism can help you learn to verify and see different opinions before speaking, and then take responsibility for your own words? Starting from such a problem awareness, perhaps we have the opportunity to find a suitable social platform for discussion.

(Thanks to Chen Fangyu for assisting in reviewing this article)

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…