个人频道:https://t.me/justAboringchannel |PM友好型 | BUPT 瞎看点什么,留下点什么。

About Machine Learning: An Overview

Essentially part of the concise neural network tutorial (completed by Microsoft Club) notes (escape

You have a model - maybe it's not perfect (it's not for sure) anyway it is - you can use it to make some predictions or classify something, that's your purpose . So you throw a value or something into the model - initialization - so this thing you throw in starts to calculate forward in the model, and finally gets a value, which happens to be: you know what you throw in The correct value corresponding to that thing - obviously there is a gap ( loss ) with the result calculated by the model, so you let this loss value return to the model and let it calculate in reverse to fine-tune the model to make the model more perfect - this is The process of machine learning

take a chestnut

Purpose: Guess the number in B's heart ;

Initialization: Jia guess 5;

Forward calculation: A new number guessed each time;

Loss function: B compares the number guessed by A with the number in his mind, and draws a conclusion of "bigger" or "smaller";

Backpropagation: B tells A that it is "smaller" and "bigger";

Gradient descent: A adjusts the guess value of the next round by itself according to the meaning in B's feedback.

What is the loss function here? Just "too small", "a little too big", very imprecise! This "so-called" loss function gives two pieces of information:

1. Direction : big or small

- 2. Degree : "too", "somewhat", but vague

- or black box

- Purpose: Guess an input value such that the output of the black box is 4;

- init: input 1;

- Forward computation: Mathematical logic inside the black box;

- Loss function: at the output, subtract 4 from the output value;

- Backpropagation: Tell the guesser the difference, including the sign and value;

- Gradient descent: On the input side, the next guess is determined based on the sign and value.

- Build a neural network and give the initial weight value. Let's first assume that the logic of this black box is: z=x+x2;

- Input 1, according to z=x+x2, the output is 2, and the actual output value is 2.21, then the error value is 2−2.21=−0.21, which is small;

- Adjust the weight value, such as z=1.5x+x2, then input 1.1, the obtained output is 2.86, the actual output is 2.431, then the error value is 2.86−2.431=0.429, which is too large;

- Adjust the weight value, such as z=1.2x+x2, then enter 1.2...

Adjust the weight value and enter 2 again...

If the error value is positive, we lower the weight; if the error value is negative, we increase the weight

- All samples are traversed once, and the average loss function value is calculated;

- And so on, repeat the process of 3, 4, 5, 6 until the loss function value is less than an index, such as 0.001, we can consider that the network training is completed, the black box is "cracked", and it is actually copied, because the neural network does not The real function body in the black box cannot be obtained, but only an approximate simulation.

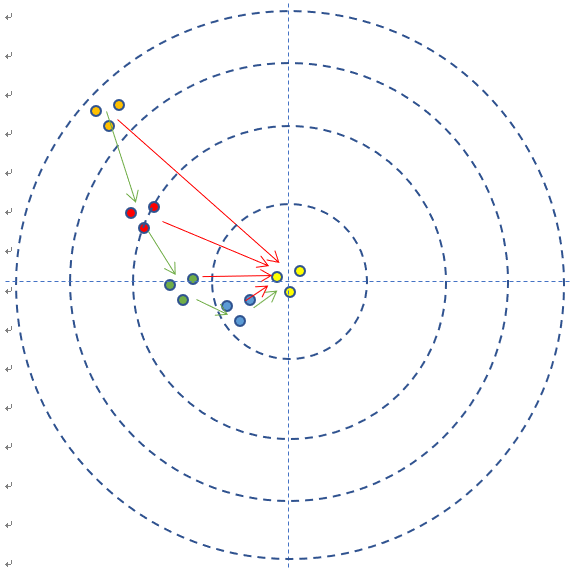

Or a chestnut that shoots a target

- Purpose: to hit the bullseye;

- Initialization: just shoot a shot, you can hit the target, but remember the posture of the rifle at that time;

- Forward calculation: let the bullet fly for a while and hit the target;

Loss function: number of loops, deviation angle

The loss function here also has two pieces of information: distance and direction - so, the gradient, is a vector! It should tell us both the direction and the value.

- Backpropagation: pull the target back and look at it;

- Gradient descent: Adjust the shooting angle of the rifle according to this deviation.

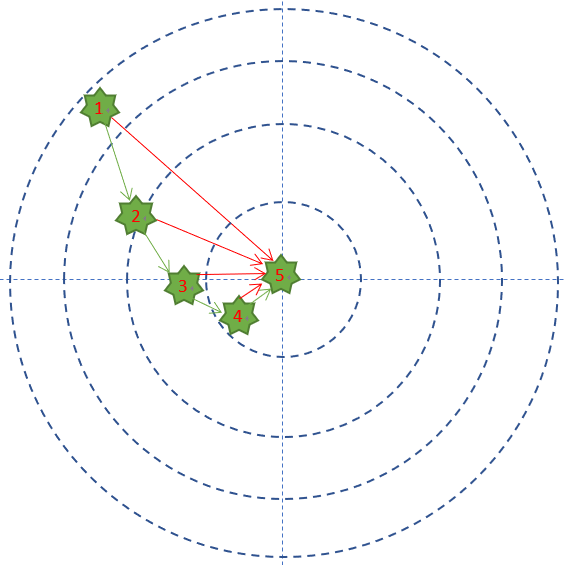

- The difference between the impact point and the bullseye of each test shot is called the error, which can be represented by an error function, such as the absolute value of the difference, as shown in the red line in the figure.

- A total of 5 shots were tried, which is the process of iteration/training 5 times.

- After each shot, the process of pulling the target back to see the impact point, and then adjusting the angle of the next shot is called backpropagation. Note that there is an essential difference between pulling the target back and looking in front of it. The latter is likely to be life-threatening because there are other shooters. An inappropriate analogy is that, in the concept of mathematics, when a person runs in front of the target and looks at it, it is called forward differentiation; when he pulls the target back and looks at it, it is called reverse differentiation.

- The value and direction of each adjustment of the angle is called the gradient . For example, adjust 1 mm to the right, or 2 mm to the lower left. The green vector line in the picture.

- In this example, multiple samples can be described as burst shots, assuming that 3 bullets can be shot in a row at a time (good guy direct shot), each time the degree of dispersion is similar

- If 3 bullets are fired in a row each time, the sum of the gaps between the impact points of the 3 bullets and the bullseye is divided by 3, which is called the loss, which can be expressed by the loss function - in this example, if it is measured by the score, That is to say, the feedback results obtained by Xiaoming range from a difference of 9 points, to a difference of 8 points, to a difference of 2 points, to a difference of 1 point, to a difference of 0 points . the way of the gap. That is the role of the error function . Because there is only one sample at a time, the name of the error function is used here. If there are multiple samples at a time, it is called a loss function.

In fact, shooting is not so simple. If it is a long-distance sniper, air resistance and wind speed must also be considered. In the neural network, air resistance and wind speed can correspond to the concept of the hidden layer .

- A brief summary of the basic working principles of backpropagation and gradient descent:

- initialization;

- forward calculation;

- The loss function provides us with a way to calculate the loss;

- Gradient descent guides the direction of network weight adjustment based on the loss function approaching the point with the smallest loss;

- Backpropagation transfers the loss value to each layer of the neural network in reverse, so that each layer adjusts the weight in the reverse direction according to the loss value;

- Go to 2 until the accuracy is good enough (e.g. the loss function value is less than 0.001).

Concise overview ends spicy

above

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…