Introduce SUSE Enterprise Storage 6 (part 2)

Pool, Data and Placement Group

Why using Object Store? What is it?

Files and Directories usage has been around for a long time. Modern file size and the numbers of it are getting higher and higher. Especially if you are looking from the view of an enterprise infrastructure or internet service provider. Object storage was introduced to address these issues. There is no theoretical limit number of files and much more suitable for the large files as well. Over 70% of OpenStack Cloud is using ceph and the object store is becoming the standard backend storage for the enterprise.

Ceph using the object as all data storage with a default size of 4MB, each object has a unique ID across the entire cluster. In a separate partition, metadata of the object are store in a key, value pairs database. There is three access protocol we mention earlier, they are :

- Object ( native library, or radosgw )

- Block ( RBD native kernel or user space Fuse RBD, or iSCSI )

- File ( CephFS, NFS Ganesha, SMB/CIFS )

What is Pools?

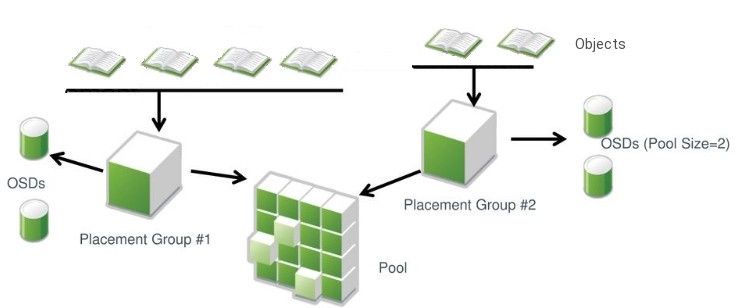

It is not for swimming for sure. It is more like a book store or library in which objects are books. All the objects are organized under the logical concept of the pool. And each logical concept has a way to manage their objects

- Size of the Replication or Erasure Coding setup

- PG size

- CRUSH map ruleset for data placement

The default Replication size needs to be 3, mean 300% of the original data size, or above for the production environment. It provides 2 fault-tolerant in the storage pools, which means you can have two storage hardware fail at the same time without worrying about data loss. Erasure Coding is using a mathematical way to break data into the size of K and parties with M chunks which provide M fault-tolerant. Just like how CD/DVD store their data. Assuming the same level of fault-tolerant 2, and let the original data break down into 2 as well. Therefore you will have 200% of the original data size. If we moving the K up to 4 and M remain 2. It will consume 150% storage compare to the original data but the fault-tolerant remain the same. Now you may ask, why wouldn’t anyone use Erasure coding to save space then? The answer is the higher the K is, the more CPU require to perform this data calculation. Meanwhiles, when data do need to recreate, it is multiple times slower than using just Replication, since it only needs to copy from the replicas. Before Luminous ceph did not support RBD and CephFS over Erasure Coding Pool ( EC Pool ). EC Pool also does not support the omap feature, but RBD and CephFS require the omap feature to store metadata. So you require to put metadata into a Replication pool with the EC Pool with ec_overwrite enable and bluestore.

$ ceph osd pool set allow_ec_overwrites true

PG size

The pool contains a number of Placement Groups, which is preset when the pool is being created. Traditionally the number can only increase, but I explain in another article before about autoscale and PGs

Replication Pool

Creating a pool is simply putting name and placement groups number in remembering the pg_num need to be the power of 2 so you don’t have a problem going split and merge for the PG.

$ ceph osd pool create rep-pool 16 16

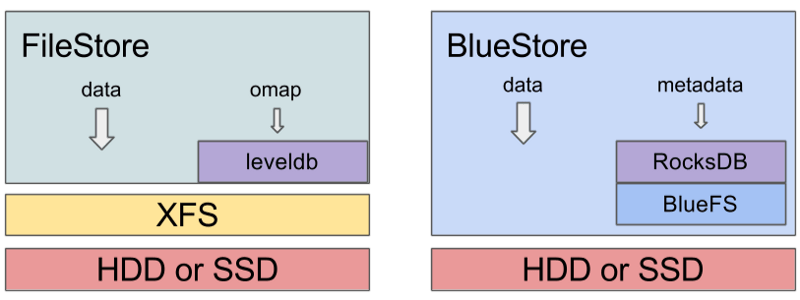

FileStore and BlueStore

The Bluestore is the default back store engine for SES6. It reduces double-writes and provides data on-the-fly compression. It uses the block device directly without overlaying on top of another filesystem (XFS) like Filestore. The RocksDB also provides better read and less overhead. And the BlueFS is a just enough filesystem for RocksDB to store the key/value files. Since it is not using the system filesystem, BlueStore creates its own memory and cache and work with OSD daemon directly, by default it caches the reads operation.

The default bluestore_cache_autotune is enabled to reduce fine-tuning cache behavior difficulty and confusion.

$ ceph config get osd.* bluestore_cache_autotunetrue$ ceph config get osd.* bluestore_cache_size0$ ceph config get osd.* bluestore_cache_size_hdd1073741824 #1GB$ ceph config get osd.* bluestore_cache_size_ssd3221225472 #3GB

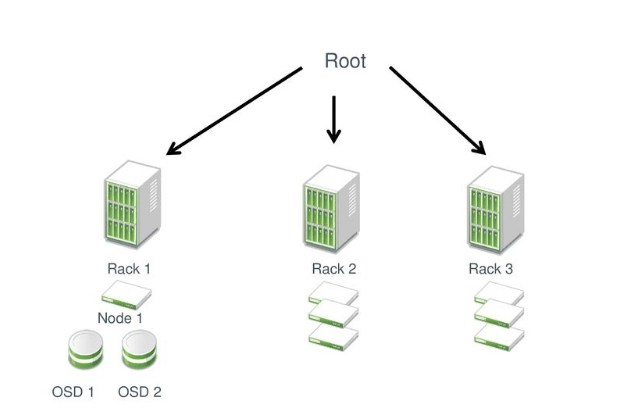

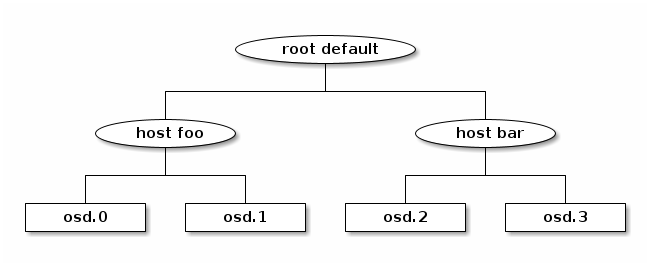

CRUSH map

Controlled Replication Under Scalable Hashing (CRUSH)is a list of ruleset definitions of how the OSDs are organizing data. This map provides a configuration to manage individual pool data distribution. Meanwhiles, the ruleset could be reused for different pools. It helps you define the physical layout of the cluster and the relationship between OSDs, nodes, racks, data centers, etc. It also includes a class definition to support different storage media type e.g. HDD, SSD, NVME. As a matter of fact, you can define a fast HDD and a slow HDD class as well.

I find it much easier to edit the CRUSH map text file by hand then using CLI. But both are available. The dashboard still not having GUI for creating a CRUSH map yet.

$ ceph osd getcrushmap -o $ crushtool -d -o

Within crushmap.txt file you can also see the following:

- tunables: basically that’s the default behaviour configuration

- devices: a list of OSDs which is all your HDD, SSD, and NVME etc.

— “device class” is where you define it

$ ceph osd crush set-device-class <hdd-class> <osd-name>

$ ceph osd crush rm-device-class <osd-name></osd-name></osd-name></hdd-class>

- types: a list of “location” (physical or logical) to define your architecture

— osd, host, chassis, rack, row, pdu, pod, room, datacenter, zone, region, root - buckets: a map that show how is your hardware architecture related to each other.

— It using types to draw out the architecture and relationship between buckets

— It is like a tree, or multiple trees. ( use the class and shadow cli )

$ ceph osd crush tree$ ceph osd crush tree — show-shadow

- rules: This is the policies of how data should be distributed

—

$ ceph osd crush rule ls $ ceph osd crush rule dump

Should I write an individual topic about EC Pool vs Replication Pool or put it in here? Also, a full CRUSH map ruleset setup may need another article as well. Thanks for reading please Like, Share and Comment.