Understand "Key Parts of Web3 Infrastructure"

Introduction

With the rapid emergence of cross-chain bridges, next-generation testing frameworks, and other cryptographic protocols, how to effectively blueprint blockchain infrastructure remains a big question for users, developers, and investors. "Blockchain infrastructure" can include a variety of different products and services from the underlying network stack to consensus models/virtual machines. We will also do a more in-depth analysis of the various "core" components that make up the L1/L2 chain in future articles (stay tuned!). In this article, the specific goals we explore are:

- Provides a broad overview of other key components of blockchain infrastructure.

- Break these components down into clearer, easier-to-understand subsections.

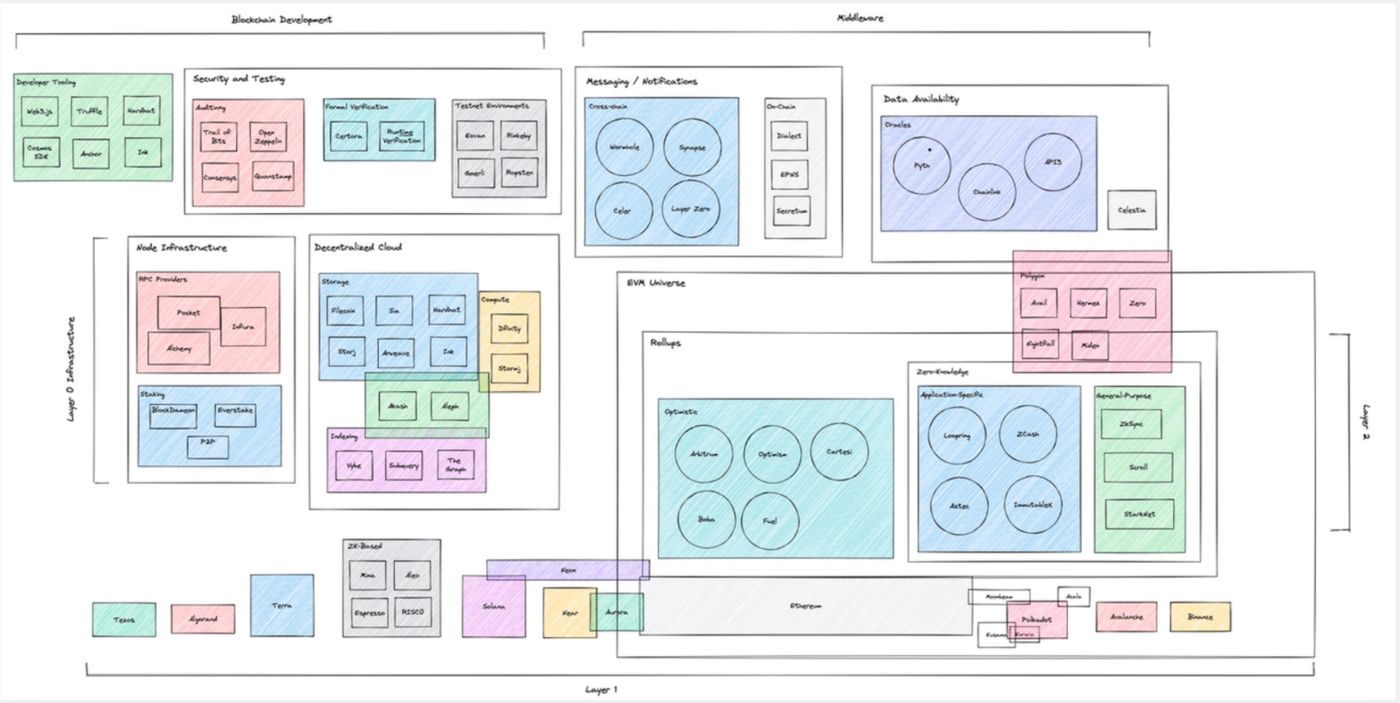

Infrastructure diagram

We define an "ecosystem" of blockchain infrastructure as a protocol designed to support the development of L1 and L2 chains in the following key areas:

- Layer-0 infrastructure

- (1) Decentralized cloud services (storage, computing, indexing)

- (2) Node infrastructure (RPC, staking/validator)

- middle layer

- (1) Data availability

- (2) Communication/Message Transmission Protocol

- blockchain development

- (1) Safety and testing

- (2) Developer tools (out-of-the-box tools, front-end/back-end libraries, languages/IDEs)

Layer-0 infrastructure

Decentralized cloud service

Cloud services are critical to the development of Web2 - As application computing and data storage requirements grow, it is critical for cloud service providers to quickly provide data and computing in a cost-effective manner. And Web3 applications have similar requirements for data and computing under the concept of blockchain. Therefore, the decentralized version of the cloud computing service protocol came into being.

Decentralized cloud services have 3 core parts:

Storage - Data/files are stored on servers running with multiple entities. These networks are also highly fault tolerant because data is replicable or exists across multiple servers.

Computation - Just like storage, computation is also centered in the Web2 paradigm. Distributed computing is concerned with distributing computation across multiple nodes for greater fault tolerance (if one or a group of nodes fails, the network can still serve requests initiated with minimal disruption to performance).

Indexing - In the Web2 world, data is already stored on a server or set of servers owned and operated by an entity, and it is relatively easy to query this data. Since blockchain nodes are distributed, data may also be scattered across different regions in isolation, often under incompatible standards. The indexing protocol aggregates this data and provides an easy-to-use and standardized API to access this data.

- Two projects that provide storage, computation and indexing: Aleph and the Akash network

- Professional projects in the subdivision field: such as The Graph for professional indexing, and Arweave/Filecoin for professional storage.

Node infrastructure

- Remote Procedure Calls (RPCs) are a core function of various types of software systems.

They allow one program to call or access a program on another computer, which is especially useful in blockchains, which have to handle a large number of incoming requests from machines running in different regions and environments. Network protocols like Alchemy, Syndica, and Infura provide this infrastructure as a service, allowing developers to focus on high-level application development rather than on the underlying mechanisms that involve relaying or routing node calls. Alchemy masters and operates all the nodes like many RPC providers, but for many in the cryptocurrency community, the dangers of centralized RPC are obvious - it can jeopardize the entire blockchain by introducing a single point of failure validity (i.e. if Alchemy fails, applications will not be able to retrieve or access on-chain data). Recently, there are also decentralized RPC protocols like Pocket that can solve this problem, but the effectiveness of this method has yet to be tested on a large scale.

- Staking/Validator - The security of a blockchain relies on a distributed set of nodes validating transactions on the chain, but there must be a node that is actually running to participate in the consensus.

In most cases, the time, economic cost, and energy required to run a node are so high that many opt out to rely on others to take responsibility for on-chain security; however, this behavior also brings serious The problem - if everyone decides to pass security to others, no one will come to verify. And services like P2P and Blockdaemon run the infrastructure and let inexperienced or underfunded users participate in consensus through crowdfunding. Some argue that these staking providers introduce an unnecessary level of centralization, but the other situation could be worse - without these providers, the barriers to running a node would be extremely high for the average network participant, and may instead lead to a higher degree of centralization.

middle layer

data availability

Applications are heavy consumers of data. In the Web2 paradigm, this data typically comes directly from users or third-party vendors in a centralized manner (data vendors benefit directly from collecting and selling the data to specific companies and applications - such as Amazon, Google, or other machines learning data providers).

DApps are also heavy consumers of data, but require nodes to provide this data to users or applications running on-chain. It is also extremely important to provide this data in a distributed manner in order to minimize trust issues. There are two main ways that applications can access high-fidelity data quickly and efficiently:

- Oracles (such as Pyth and Chainlink) provide access to off-chain data streams, enabling encrypted networks to interface with legacy/outdated systems and other external information in a highly reliable and distributed manner, including high-quality financial data (i.e. asset prices). This service is critical for expanding DeFi into a wide range of areas such as trading, lending, sports betting, insurance, and more.

- The data availability layer is a chain that specializes in ordering transactions and providing data to the chains it supports. Typically, they provide users with a high probability of confirmed data availability proof that all block data has been published on-chain. Proof of data availability is the key to ensuring the reliability of the Rollup sorter and reducing the cost of Rollup transaction processing. Celestia's data availability layer is a good example.

Communication and Messaging Protocol

As the number of Layer-1s grows and their ecosystem grows, so does the need to manage composability and interoperability across chains. Cross-chain bridges allow otherwise siloed ecosystems to interact in meaningful ways - similar to how trade routes help connect different regions to usher in a new era of knowledge sharing. Wormhole, Layer Zero, and other cross-chain bridge schemes support universal messaging, allowing all types of data, information, and tokens to flow across multiple ecosystems - applications can even make arbitrary function calls across chains, making them Ability to tap into other communities, other protocols without having to deploy elsewhere. Other protocols like Synpase and Celer are limited to cross-chaining of assets or tokens.

On-chain information transfer remains a key component of blockchain infrastructure. As DApp development and retail demand grow, the protocol’s distributed ability to interact with users will be a key driver of growth. Below are a few potential areas where on-chain information transfer might apply.

- Notification of applying for tokens

- Allows in-wallet messaging

- Announcements/Notices Regarding Important Agreement Updates

- Track notifications of critical issues (e.g. risk metrics for DeFi applications, security breaches)

Several well-known projects developing on-chain communication protocols: Dialect, Ethereum Push Notification Service (EPNS), XMTP

blockchain development

Safety and Testing

Blockchain security and testing is a relatively underdeveloped new thing, but it is undeniably critical to the success of the entire ecosystem. Blockchain applications are particularly sensitive to security risks, and since they often directly secure assets, a small error in design or implementation can often lead to huge financial losses.

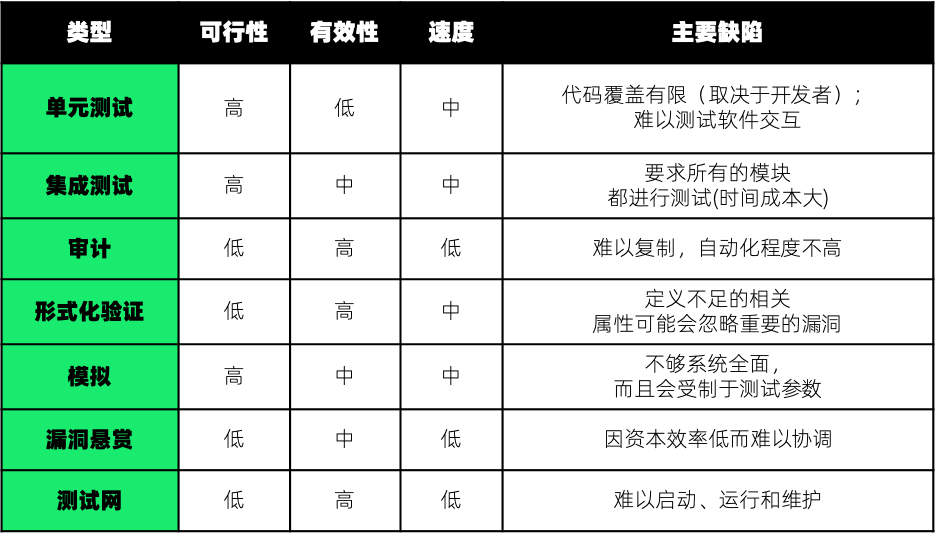

7 main security and testing methods:

- Unit testing is a core part of the test suite of most software systems, and developers write various useful unit testing frameworks to check and verify the smallest testable unit of software. Some of the popular ones on Ethereum include Waffle and Truffle, and the Solana chain has the Anchor testing framework.

- Integration testing focuses on testing various software modules as a group. Although this testing paradigm is widely utilized outside of blockchains, it also has value in blockchain development, as libraries and high-level drivers are often Various ways interact with other low-level modules (e.g., a typed library interacts with a set of underlying smart contracts), so testing the flow of data and information between these modules is critical.

- Auditing has become a core part of the security process for blockchain development. The protocol relies heavily on third-party code auditing to check and verify every line of code to ensure the highest degree of security before releasing smart contracts for users to consume. Trail of Bits, Open Zeppelin, and Quantstamp are a few trusted teams in contract code auditing (audit services are in high demand and wait times are often months).

- Formal verification is about checking whether a program or software component satisfies a certain set of properties. Typically, someone writes a product specification detailing how the program should work, and the formal verification framework processes and checks the specification into a set of constraints. Certora is a leading project that uses formal verification as well as runtime verification to enhance the security of smart contracts.

- Simulation – Agent-based simulation systems have long been used by quantitative trading firms to backtest algorithmic trading strategies, and given the high cost of blockchain testing, simulations provide a way to test various assumptions and inputs. Chaos Labs and Guantlet are typical examples of securing blockchain protocol platforms using scenario-based simulations.

- Bug Bounties - Bug bounties help address large-scale security issues by leveraging the blockchain's decentralization philosophy. Token rewards incentivize community members and hackers to report and fix critical issues. As such, bounty programs play a unique role in turning "gray hats" into "white hats" (i.e. turning those involved in hacking for funds into those who address bugs and secure protocols). In fact, Wormhole now has a bug bounty on Immunefi worth up to $10 million (one of the largest software bug bounties ever), and we encourage everyone to contribute to the cause of cybersecurity!

- Testnet (testnet) - The testnet provides a demo with the same operating environment and similar working principles as the mainnet, allowing developers to test and debug parameters in the development environment. Many test networks use the PoA consensus mechanism or other consensus mechanisms to optimize transaction speed with fewer validators, because the currency on the test network has no real value, so the test network does not have any program that allows users to obtain tokens through mining, and It is obtained through the tap. There are also many testnets built to simulate the behavior of mainnet L1 (eg Ethereum's Rinkeby, Kovan, Ropsten testnets).

Each of the above schemes has its own advantages and disadvantages, of course, they are not mutually exclusive, but the corresponding test schemes are usually used for different stages of project development.

Phase 1: Unit testing is done while building the smart contract.

Phase 2: Integration testing becomes especially important for testing the interaction between different modules when a higher level of abstraction is established.

Phase 3: Code audits are generally conducted near the testnet/mainnet release or large-scale feature release.

Stage 4: Formal verification is usually matched with code auditing and serves as an additional security guarantee. Once the program is up and running, subsequent processes can be automated, making it easy to pair with continuous integration or continuous deployment tools.

Phase 5: While the application is running on the testnet, check throughput, traffic, and other scaling parameters.

Phase 6: Launch a bug bounty strategy after mainnet deployment to leverage community resources to find and fix issues.

Summary of test plans

developer tools

The development of any technology or ecosystem depends on the success of its developers , especially in blockchain.

We divide developer tools into 3 main categories:

Out-of-the-box tools

The SDK (for developing a new L1 layer public chain) helps abstract the process of creating and deploying the underlying consensus core. Pre-built modules allow increased development speed and standardization while maintaining flexibility and customization. The Cosmos SDK enables the rapid development of the new PoS public chain in the Cosmos ecosystem. Binance Chain and Terra are excellent examples based on the Cosmos chain and can be regarded as industry models.

Smart Contract Development - There are many tools on the market that can help developers create smart contracts quickly. For example, Truffle Boxes provides easy-to-use Solidity contract instances (voting, MetaCoin, etc.), and the community can also expand and supplement its resource library.

Frontend/Backend Tools

There are already many tools that make application development convenient and easy, as well as deploying applications to the chain (ethers.js, web3.js, etc.).

Upgrades and contract interactions (e.g. OpenZeppelin SDK) - There are various tools specific to different ecosystems (e.g. Anchor IDL for Solana contracts, Ink for Parity contracts) to handle RPC requests, publish IDL and generate clients.

Language and IDE

The programming model of the blockchain is usually very different from the traditional software system, and the programming language used for blockchain development is to promote the development of the blockchain programming model. Solidity and Vyper are widely used on EVM compatible chains, while other languages such as Rust are widely used on chains such as Solana and Terra.

Summarize

“Blockchain infrastructure” can be an over-defined, hard-to-understand term — it tends to be a collection of products and services that cover everything from smart contract audits to cross-chain bridges .

Discussions about cryptocurrency infrastructure are either too broad or confusing, or too specialized for the average reader, so we hope this article serves as a bridge between those new to crypto and those looking for a more specialized interpretation proper balance.

Of course, the field of cryptography is rapidly evolving, and the protocols mentioned in this article may not even constitute a suitable interpretation in the next two or three months, but even so, we believe that the main goal of this article (that is to explain the concept of "infrastructure" It is easier to understand and digest) will have greater significance in the future, and we will certainly continue to update and supplement the clear and accurate views as the blockchain infrastructure landscape develops.

-------------------------------------------------- --------------------------------------------------

Original https://jumpcrypto.com/peeking-under-the-hood/ by Rahul Maganti (Vice President of Jump Crypto)

Content translation: Xiaochengzi Proofreading: medici Typesetting: Teacher Qiu

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!

- Author

- More