I co-authored this article with AI, and the editor-in-chief said that it was well done, and I won't be using it tomorrow.

Most of the literary and artistic workers, such as those who write, draw, and compose, are at increased risk of unemployment - all the illustrations in this article are created by AI. But the text is written by myself, such as fake replacement.

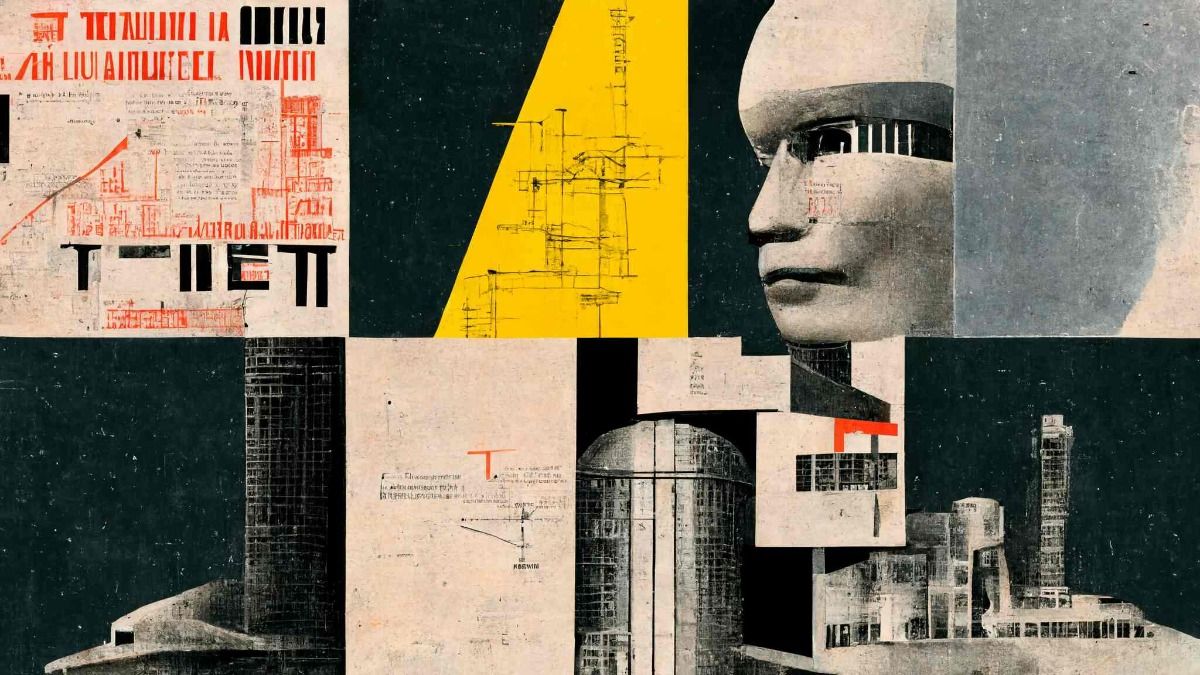

A recent series of beautiful paintings created by Open AI's Dall-E 2, as well as texts written in conjunction with Microsoft Florence and OpenAI's GPT-3 model, prove that AI can sometimes look better than humans. It is not too much to call this year the first year of AI literary and artistic creation. With a given text or image, AI can produce works that are beyond imagination, bold, interesting, and reasonable. There is indeed no lack of "epoch-making" significance.

These advances are built on a huge "cornerstone model" that gives AI capabilities that its creators never foreseen. The artificial intelligence models of the past were "rough" in the style of manual workshops. Through long-term adjustments, the potential capabilities of the "cornerstone model" have become a visible development trend.

Artificial intelligence is about to enter the era of industrial mass production.

Large model, laid-off workers make machines

In May, the beta version of the AI drawing tool Midjourney was released, and even the magazine "The Economist" couldn't help but "try it out". Midjourney's illustration for The Economist's report looks full of inspiration and has a strong modernist style - don't forget, the picture is based on a very abstract concept, after all, the content of the article is not "a woman holding a A cat” is a specific narrative.

One of Midjourney's developers is Somnai, the creator of Disco Diffusion who developed YouTuber Quick-Eyed Sky. These applications are all "you say I draw", or enter a keyword to generate a picture. Disco Diffusion is very popular, but compared to DALL-E and Midjourney, it has a little "threshold". You need to adjust the code and parameters yourself. The more popular applications are "fool" type, just write some words.

Playing "You Said I Draw" is addictive. Musk, Trump, Scarlett Johansson and Marilyn Monroe on Twitter will all be "broken", all of them have the "elm street" look. The "Domo Master Painter" launched on the Children's Day of the Dimo Community in China, under the circumstance that I deliberately "made things difficult", the picture given is still very interesting.

How well the output works depends entirely on the AI model. Building an AI model is equivalent to a luxury arms race.

The "cornerstone models" that are currently available include OpenAI's GPT-3, with parameters approaching 200 billion and costing more than $10 million; Google's Switch Transformer, with parameters exceeding GPT-3; Microsoft and Nvidia have MT-NLG models, with parameters exceeding 500 billion; Huawei's Pangu model, which is positioned as a Chinese language pre-training model, has a parameter scale of 100 billion.

When GPT-3 was just "born" in 2020, it was named "Laid-off Worker Manufacturing Machine". It easily passed the bottom-line test of artificial intelligence - the "Turing Test", and all the questions were answered smoothly. Text, translation, design, calculation and other applications developed based on the GPT-3 model can replace human operations.

There is even a person who wants GPT-3 to write a small paper "On the Importance of Twitter". GPT-3 wrote it smoothly and naturally for him, and also used the writer's advanced operation "Spring and Autumn Brushwork", which is Yin and Yang weirdness. It said "Twitter is "everyone's social software full of personal attacks".

The advantages of the cornerstone model are obvious. First, the large parameters and the large amount of training data will not only prevent diminishing marginal benefits, but greatly improve AI’s own capabilities and breakthroughs in computing. The second is the small sample learning method used. AI does not need to "learn from scratch" over and over again, but can fragment and select the data it needs to execute automatically.

The cornerstone model is the equivalent of "universal technology". In the 1990s, economic historians saw "general-purpose technologies" such as steam engines, printing presses, electric motors, etc., as the key factors driving the long-term development of productivity. "Universal technology" includes features such as rapid iteration of core technologies, broad applicability across sectors, and spillover effects, thereby stimulating continuous innovation in products, services, and business models.

Today's cornerstone models already have the same characteristics.

Neural network + self-supervised learning, amazing skills

Today, more than 80% of AI research is focused on cornerstone models. Like Tesla is also building a massive cornerstone model for autonomous driving.

To understand what Li Feifei, dean of Stanford University's Institute of Artificial Intelligence, called "staged changes in artificial intelligence," it is necessary to know how the cornerstone model is different from past artificial intelligence models.

All machine learning models today are based on "neural networks" — programming that mimics the way brain cells interact. Their parameters describe the weights of the connections between the virtual neurons, and the model is "trained" by trial and error to output the specific content the developer wants.

For the past few decades, neural networks have been in the experimental stage, and nothing has been implemented. It was not until the late 2000s and early 2010s that the computing power of supercomputers increased, and the Internet provided enough training data. With the blessing of hardware and data, neural networks began to complete text translation, voice command interpretation, and identification of the same image in different pictures. Face and other "impossible tasks" before.

Especially in the 2010s, machine learning and mining machines also used GPUs. The characteristic of GPU is that there are thousands of stream processors, which can perform a large number of repeated general operations, and it is not expensive, which is much cheaper than starting a supercomputer once.

The breakthrough came in 2017. At that time, Google's BERT model used a new architecture, which no longer "conventionally" and sequentially processed data, but adopted a mechanism of "viewing" all data at the same time.

Specifically, models like BERT are not trained with pre-labeled databases, but use "self-supervised learning" techniques. As the model digs through countless bytes, it can find hidden words on its own, or guess the meaning based on context—much like the exam questions we grew up doing! The whole new method is very close to the learning mechanism of the human brain, and you can find what you are interested in at a glance, without having to process and digest word by word.

After billions of guess-compare-improve-guess cycles, models are generally brilliant and talented.

Not limited to text, neural networks and self-supervised learning techniques can be applied beyond language and text, including pictures, videos, and even macromolecular databases. Like the DALL-E graphic model, the guess is not the next letter combination, but the next pixel cluster.

Applications developed on the basis of large models are also varied. In addition to the series of literary and artistic creation applications mentioned above, Google's DeepMind has launched Gato, which can play video games, control robotic arms and write. Meta's "world model" appears to be stranded, originally intended to provide context for the Metaverse.

cool stuff or turing trap

The boom in cornerstone models is certainly good news for chipmakers. Nvidia, which is actively involved in making cornerstone models, is already one of the world's most valuable semiconductor designers, with a market value of $468 billion.

Startups are also expected to take advantage of this. Birch AI automatically disciplines healthcare-related calls; Viable uses it to sift through customer feedback; Fable Studio uses AI to create interactive stories; and on Elicit, people rely on AI tools to find their research questions from academic papers.

Big companies have their own way of playing. IBM officials said that the cornerstone model can analyze massive amounts of enterprise data and even find clues about consumption costs from sensor readings on the shop floor. The head of Accenture's artificial intelligence project predicts that "industrial cornerstone models" will emerge soon to provide more accurate analysis services for traditional customers such as banks and automakers.

Although the future is bright, and AI painting has also stimulated the enthusiasm of the public, many researchers still recommend "taking a step back". Some people think that the big data that the big model relies on is not fully functional, and part of it is just "random repetition"; at the same time, some biased issues will cause the "illusion" of the model. Early last year, when GPT-3 answered the cloze question "Two XXX (religious people) walked in...", there was a higher than 60% probability of filling in "Muslim".

When reading the news of "Domo Painter" in the "Dimo Community", the homepage occasionally sees users sending indecent instructions to AI. CEO Lin Zehao told Aifaner that generally background keyword screening and manual screening will be carried out at the same time. , to ensure the healthy functioning of the community. AI painting tools such as Dall-E 2 are also facing the same predicament-the same fate as Microsoft's Xiaobing "mouth fragrant".

Erik Brynjolfsson, an economist at Stanford University, worries that a collective obsession with large models with human-like capabilities could easily lead society as a whole into a “Turing trap.” Computers have done a lot of things that humans couldn’t do, and now do what humans can do – better than humans, more people are losing their jobs, wealth and power will be more concentrated, and inequality will increase. big.

His concerns were justified. Large-scale models cost a lot of money, and ordinary people can't afford to invest in them. The supporters behind them are either technology giants or countries. The cornerstone model will become the basic platform for a series of services, and the platform also has a "Matthew effect": the winner takes all, even if not all, there is nothing left for others.

Artists really love these "cool things". British composer Reeps One (Harry Yeff) feeds the model a metronome rhythm for hours, and the model learns to respond rhythmically to his voice. He predicts that "many artists will use this tool to do their work better".

As a journalist, I also really like the voice transcription application of iFLYTEK. In the past, compiling a two-hour recording of interviews with people was enough to cause a mentally healthy adult to collapse on the spot. Now you just need to wait for the software to produce a text document, you can't use it directly as a "dialogue", but it's enough to watch it as a material.

Recently I was also looking into how to use GPT-3 to train my own writing model. Maybe, the following articles were written by my AI.

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!

- Author

- More