The Singularity is Coming: The Real Issue of the Present

Strayn

Large language models are not AGI.

The use of complex tools and languages is a human specialty, and GPT4 can do both (Timo Schick et al., 2023), which is scary.

On the road of machine evolution, language models can be said to have taken a shortcut. Compared with images and music, humans retain much more information in the form of language and text. This is not only because language is ancient, but also due to the intrinsic connection between language and human thinking.

Wittgenstein said that "the limit of language is the limit of the world". The world he discussed is covered by language and described by language. Language contains the truth of the world, which inspired symbolism to start with language to build artificial intelligence. The symbols that humans like most are usually tree structures, which may have something to do with our ancestors. For example, Judaism uses the Kabbalah tree to explain the relationship between the universe, God and the kingdom. Similarly, the Vikings used the world tree (Yggdrasil), Hinduism also has the tree of existence (kalpavriksha), programmers use binary trees (Binary Tree), and symbolism has also developed decision trees (Decision Tree). In the sub-topic of language model, symbolism will also use a syntax tree (Syntax Tree) to turn sentences into subject, predicate, object, attributive, adverbial and complement, and then use a semantic tree (Semantic Tree) to find the meaning of each keyword, which is essentially like looking up a dictionary. This has brought good progress, but human language is very complex. For example, "bank" as a noun can be a river bank or a bank, and the dictionary may not match it. Fortunately, in his later years, Wittgenstein proposed the idea of "language games". He believed that "language is given meaning in use", which inspired the connectionist study of context. Learning context does not require data annotation. They put down dictionaries and used neural networks to brute-force calculate how each word is used in a large amount of text, such as which words often appear one after another, and which words often appear in the same sentence. The once very popular Word2Vec application was born. The Cloze Game used by GPT4 in training, the protagonist of today's topic, is also a continuation of this idea.

GPT4 will produce hallucinations, sometimes lying, sometimes telling the truth. This part of the problem comes from its training data itself - that is, the joint creation of the majority of netizens, and human creation itself is a parallel of truth and lies. The big model relies on big data, and the data contains both truth and falsehood. It can only learn the common points among them - whether it is true or false, it is essentially human language, and it only learns to speak. But can it be optimized? In fact, the difference between the slow-cooked GPT3 and the popular ChatGPT on the entire network is precisely this layer of authenticity. The new version allows people to interact and guide it, so that it can understand the steps of speaking "logically" (Long Ouyang et al., 2022). But there are still some logical problems that cannot be guided correctly. Here is a batch generation solution that is more efficient than manual labeling:

Consider a world constructed by language, in which a philosopher GPT and a scientist GPT are conducting a Socratic questioning of the structure of the world. The philosopher GPT has been fine-tuned to know how to ask questions. The scientist GPT has been fine-tuned to know how to generate experimental design templates.

- The philosopher generates a random question.

- Scientists try to answer questions and generate a hypothesis; at the same time, they generate an experimental design plan.

- The code performs the experimental design and conducts a Google search, and based on the search results, the scientist again summarizes and generates an experimental result.

- If the scientist's experimental results are similar to the hypothesis, punish the philosopher for not asking the right question.

- On the contrary, scientists are punished because their conjectures are not accurate enough.

- The philosopher continues to ask the next question based on the previous question and the experimental results of the scientist.

- If the experimental design code cannot be executed, punish scientists directly.

Learning Google in this way may be a headache. This method essentially synthesizes a new set of data on the behavioral path of "Google search", which to some extent avoids the problem of error accumulation in regression models, and is similar to the regression model training technique of Teacher Forcing. In addition, by distinguishing between recognized databases and unknown data sources, the scientist GPT trained in this way is expected to better distinguish between facts and misinterpretations.

Similar learning methods may allow the model to eventually understand most of the language world, but there are some other problems with the language itself. On the one hand, the language scenes that can be recorded are not comprehensive enough. A lot of common sense from experience may be omitted in the language expression, but the model may not understand it, and many conversations that occur in daily life are not recorded in text for the machine to use. On the other hand, Heidegger pointed out in Being and Time that there is a "concealment" phenomenon in language itself, that is, humans in daily life often only pay attention to appearances, but ignore the essence of the world and the truth of existence. Therefore, language may hide or distort the real situation of the world. Socrates' solution is to have more in-depth conversations with others and rely on communication to find a universal consensus as knowledge as much as possible, which is equivalent to constructing an objective language world. But obviously, the world in which the language model lives is not this objective language world, but the subjective superposition of all kinds of people.

What’s worse, humans don’t really understand the real world behind language.

The seemingly perfect mathematical model always has an error term when it is applied in practice, indicating everything in this world that has not yet been discovered. The Buddhist discussion of the world is an Indra's Net spread out in time and space, and everything is connected. The world is spread out according to the Indra's Net to form the various forms of the world (color), and each of us makes a subjective distortion from the "color" to see the "appearance", but the appearance cannot make us understand the real structure of Indra (Dharma). How to understand this inability to understand?

Consider this thought experiment:

According to the way the GPT model is deployed, GPT time is based on API calls and calculations, and each process represents a new life. Imagine if the human world is also structured in a similar way, what will happen when our creator presses the pause button?

The answer is nothing will happen, because everything stops synchronously, including people's thinking, so there is no reference for people to feel the changes in the world. GPT will not notice this change either. But if we send a timestamp of our world every time we send a message to GPT, then now we pause for 24 hours, and when we send a message again, GPT can perceive some changes based on this timestamp. But it will not necessarily interpret this as "time", because this change does not have the usual continuity of time, it cannot conduct experiments to reproduce this phenomenon, and there is no other reference to confirm this conjecture.

This timestamp is a kind of "color" that seems to have no regularity, but if there are other features every time it pauses and restarts, such as it notices that the cache number will have a cliff-like increase, and it notices that there is a certain statistical correlation between the change in timestamp and the increase in cache, then it may have noticed a certain "phase". But it cannot see the real reason behind this, that is, humans are eating hot pot, singing songs, and pressing pause as they please to the music. Similarly, if our world is played in a similar way, then we will never see the "law" behind the "phase".

In response to this problem, modern science's solution is a combination of August's positivism and Popper's falsificationism. In Deng's words, it is "crossing the river by feeling the stones". Human rationality and cognitive ability are weak in front of the world. We can launch starships into space not because of the flawlessness of mathematical formulas, but because we monitor the position, speed, and temperature of the rocket in real time, so as to make corrections and fine-tuning when deviations occur. Abstract symbols and logic were invented to represent the world, not the other way around. When mathematics cannot figure out the angle between two sides, humans can take out a ruler to measure it, but GPT cannot. Our deeper understanding of the real world has nothing to do with language, but because we are immersed in the world.

ChatGPT is an AI magic trick.

The earliest artificial intelligence used symbolic generative technology, that is, thousands of lines of if-else code to form logical rules. If it is placed in a black box, it looks quite intelligent. But once you understand this principle, you will feel that it is bullshit.

The principle of GPT itself is very intuitive (Ashish V., et al., 2017), but we will not discuss too many details here to avoid distracting attention from key information. It is enough to know that it is essentially an attention mechanism that optimizes the model's ability to extract context. The reason why it can bring such a "smart" experience is that it violates common sense. People can easily understand addition and subtraction within ten, and some people may be able to go up to one thousand. But GPT, as the cousin of the calculator, can easily handle the order of magnitude that can blow people's minds.

It is more like an AI magic trick than a super artificial consciousness. Hume talked about the basic principle of this magic more than two hundred years ago. The essence of so-called "creation" is a permutation and combination of known things. Just like a unicorn is a horse plus a horn, a centaur is a man plus a horse. It stores a huge amount of human language information, and stores key information (Ashish Vaswani, et al., 2017) in various scenarios (Alec Radford et al., 2018) in a huge (Tom Brown et al., 2020) high-dimensional space filing cabinet. The text prompts we throw to GPT allow it to locate many similar chat scenarios that have occurred before. When each word is generated, a similar search will occur based on the context, so that the uncertainty of the final overall result will be amplified, and from the outside of the black box, it is a reply from different angles (Aman Madaan et al., 2022).

Like all magic tricks, the success of this AI magic trick also depends on human psychology and unexamined "common sense". Perhaps many people have had a moment when they woke up one day and found that a brilliant idea suddenly popped up in their minds, and they felt that it was touching and unprecedented, and they shouted in their hearts that the amazing one was me - . We can't imagine, nor do we want to believe that there are billions of rooms in the world at this moment playing similar scripts. Within our cognitive ability, everyone is so different, but in statistical terms, you can always find similar conversations, similar scenes, similar roles and similar people. This is the true face of the world on a macro scale. ChatGPT's prompt mechanism is like a mirror often used in magic props. We think it is so smart because we push the conversation to a deeper level, and it can always find a similar conversation trend to piece together a reply. Can this process be called intelligence? I think we need to define intelligence first. This process can generate innovation, because we may not have talked to people from different backgrounds about the same words, and cross-border is often the source of innovation. This is indeed a kind of intelligence and it is very useful. But it is not human intelligence, it is just a huge language storage. Humans are limited by the structure of the brain. Based on limited resources, they observe the world, throw questions and try to solve them. Due to this limitation, we cannot see all the answers, so we will stop and continue to dig deeper on the known viewpoints, and promote thinking by citing counterexamples or finding commonalities between problems. This way of solving is more in-depth, and this grasp of the deep level can better bypass the "surface" and lead to the more stable "inner phase" in Indra. The conclusions drawn from this are often more concise and can also be better generalized on different problems. However, GPT's solution is more like a brute force solution. It first reads the entire "language world", saves it, and creates a path to extract these memories. In a sense, the ability to solve brute force makes it more inclined to ignore subtleties. Such an ability is beneficial. It can help transfer the knowledge that humans already have from one person to another, but this way of acquiring knowledge cannot be generalized to the real world. It cannot deal with problems that have no solutions at the level of all mankind, and humans are capable of dealing with such problems.

OpenAI used ChatGPT to bring an unprecedented psychological experience, which shaped people's new intuition about the macro world. The life of all beings when the singularity comes, as a network landscape, will surely be recorded in the history of human technological history.

Human beings exist in the world.

Why can humans deal with unknown problems? The discussion in Heidegger's Being and Time published in 1927 may be the best answer to this question. He regards humans as a being (Dasein) with "subjectivity" that is different from all things (being). This being can "exist in the world (Welt) as an open being." Beings are special because they have a so-called temporality structure, which allows us to understand ourselves and experience the world in practice. Note that the temporality here is different from the continuous timeline in the general physical sense, but a structure that includes the past and the future in the "now-moment". Specifically:

The past is not a certain moment in the "past" on the timeline, but the "explanation" of the "past" that seems to exist in the memory by the "existent". It may contain true or false memories of past moments, as well as legitimate and illegitimate understandings. It can be said that the past is constructed in this review, as the basic foundation of the existence, used to feel the "present" and understand the "future".

The future is based on the "past", but it is also not the next moment on a physical timeline. It is mainly about the "being" "moving forward" towards possibilities. Specifically, beings can actively throw "their own existence" towards these possibilities, even if it means taking risks, even if moving towards a certain possibility means closing certain other possibilities, but the uniqueness created by similar choices makes the existence of beings meaningful. This ability is called projection. It can be seen that although GPT can plan the future (make predictions), this "next moment" on the physical timeline is not the "future" that Heidegger talked about, but more like a retrieval that is carried out in it after the parameters are set in the "previous moment" and the calculation path is formed. It is more like looking back at the "past" rather than running towards the "future".

The moment is not the present in time, but the experience of a dynamic and ever-changing phenomenon. Each phenomenon reflects the internal relationship between us (Dasein) and everything (being). The collection of these relationships is the world. "Beings pay attention to everything, and this care connects it with the world. GPT's attention is passive, mechanical, and rigid on the model's call interface. It executes commands when the interface light is on, and shuts down when the light is off. Its multi-head attention mechanism allows it to pay attention to the "relationship between contexts" in the language text sent from the interface, but this concern is encoded in its fixed neural network and the random numbers generated by the computer it is deployed on. It does not question the inner essence of the deeper "appearance" behind it.

It's a bit confusing, but GPT does not have the "temporality" of an entity. And all reasoning points to the fact that it does not have a "self". This stems from its structure, that is, its relationship with the world is a relationship that is set during training and deployment. Its structure comes from the code that trained it and the huge neural network that is fixed after training and the seemingly random but actually boring random numbers generated by the computer. It is just a "computational process" that appears on these fixed processes. These computational processes, or the life process of GPT, cannot be continuously adjusted and adapted to the external world. It does not have openness. This may also cause it to have no motivation to question the inner essence behind the appearance. This structure does not have an open relationship with the world, and therefore it cannot be like humans. So, how can AGI be given a "self"?

AGI needs a body.

Maurice Merleau-Ponty explored the relationship between the body and the world in his book Perceptual Knowledge, published in 1954. He proposed the importance of body perception and experience and opposed the traditional mind-body dualism. He believed that the body is the most basic and original way for humans to perceive the world. The body's perception and experience are not simply sensory input, but are combined with the subject's consciousness and emotions. He believes that the body's perception and experience are not passively receiving information from the world, but actively interacting with the world, and are an indispensable part of the human cognitive process.

Simply put, artificial intelligence needs a body.

In fact, attempts to give artificial intelligence a body have long been made in the field of artificial intelligence. The earliest one was the genetic algorithm proposed by John Holland in the 1960s. As the name suggests, it uses Darwin's natural selection to screen out a small model with intelligence. This sounds great but is extremely inefficient.

The body is not only an appendage of the mind, but also the way we exist. In Heidegger's view, the body is not only a physical entity, but also the basis of our life and actions in the world, the carrier of our interaction with the world, and the relationship between our body and the world is a practical one.

In the 1980s, roboticist Rodney Brooks proposed the concept of "embodiment", which is to place robots in real-world environments and acquire knowledge and experience through perception and action. This is more like the evolving individuals mentioned in Lamarckism, and what has developed from this is "embodied Cognition". The reinforcement learning used by Alpha Zero and Alpha Go, which were very popular in recent years, is a computational implementation of embodied Cognition.

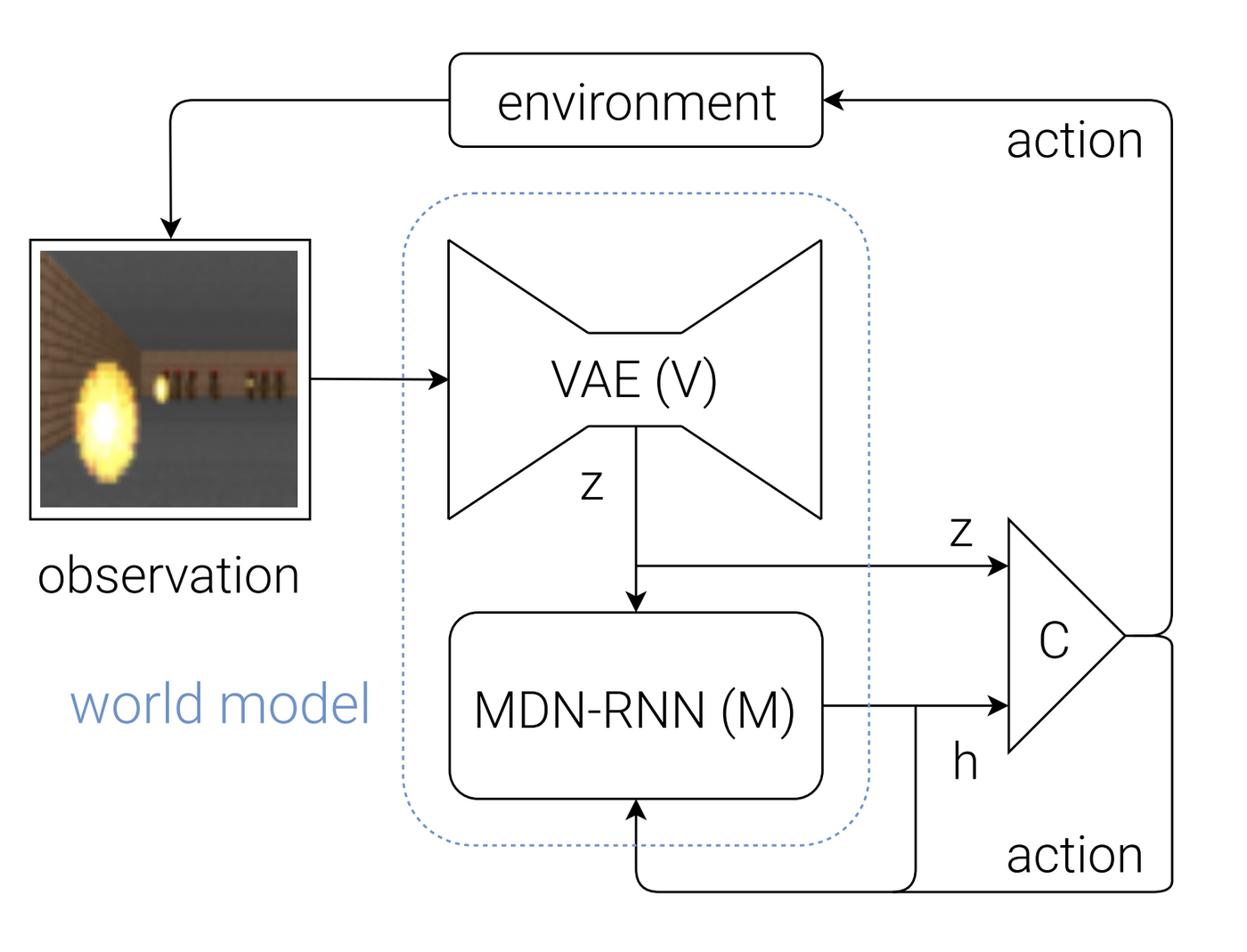

Reinforcement learning itself is not a complete embodied artificial intelligence, because the model is still trained and then deployed, and what is missing is the idea of "online machine learning", that is, after deployment, it can still continuously collect samples in the real world and continue to learn, which gives the robot openness. Coincidentally, the combination of these two is exactly the world model that Lecun loves.

However, the changes in the world are more complicated than language. GPT4 has used trillions of parameters to achieve its current performance. If you want to understand Indra, VAE and RNN are definitely not enough, and you need to work hard on the model.

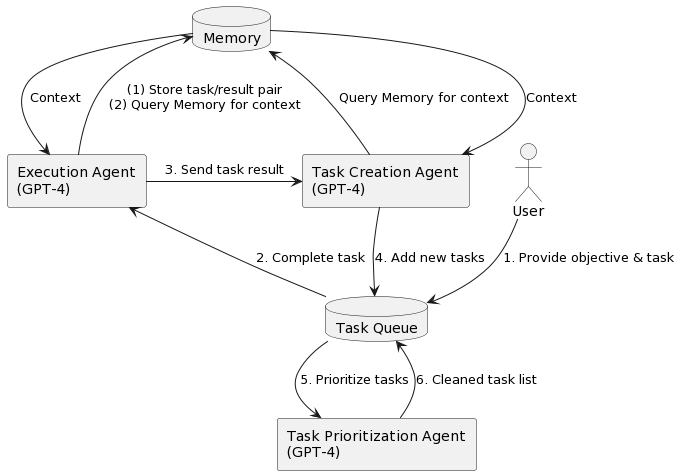

AutoGPT may be another answer to this question. It creatively proposes the concept of a "GPT team", that is, assigning roles to GPT through Prompt, allowing users to communicate with GPT managers and set goals. GPT managers can recruit GPT players of different professions to collaborate on this task. For example, if you ask a GPT manager "Which stock will be the best next month", the GPT manager will recruit financial analysts GPT, product managers GPT, and engineers GPT respectively. The experts' special sessions will summarize information through Google searches, summary reports, and code writing to return the final results to users.

AutoGPT transforms the one-step calculation of GPT4 into a multi-step calculation chain, and decomposes an entire neural network into a cooperative relationship between different roles with different divisions of labor. This essentially turns Plato's thinking into Socratic communication. In addition, it can also perform information retrieval and code execution to a certain extent. The information that can be retrieved can be imagined as an information world that can be interacted with. Everything in this world is an Internet facility built by information and code. The model can be seen as the existing structure of the being at the "moment", and the code of AutoGPT is a body that can interact with the world. Its problem is that it does not have "openness", that is, there is no way to produce in-depth "practice" in an interactive way. How can AutoGPT be put into practice in the world?

If we look closely at this body, it consists of two parts: a basic body structure, which includes memory, the ability to understand language, and the ability to cooperate. It also includes tools, such as the ability to Google search, crawl web pages, and execute code, which is the key. Heidegger believes that people's actions and experiences in the world are realized through their relationship with tools. Tools are not just external objects, but a way of existence that is closely connected to the human body. He divides tools into two states:

- Presence-at-Hand refers to tools that exist as objects. For AutoGPT, these are Python toolkits on the Internet that can be used, but they are not tools that can be used right away.

- Readiness-to-Hand refers to a tool that is very easy to use. This tool can be called a tool. You can use it right away. It has become a part of your body and is the basis for dealing with the world. It is a tool that has been written into the AutoGPT package.

One possible approach is to explore how to transform the tools at hand into tools that can be used, thereby constructing the "practice" of beings in the world:

Download an open source GPT-like model to local machine, fine-tune it with Deepspeed, and use it as the existing structure of the entity (Dasein). Clone three AutoGPT repositories to local machine, as the present , past , and future of the entity .

- Write a script to start the process of phenomena in the world (Welt).

- In the world process, a command is given to the present moment to read the code in the past repository and find an optimization solution, and then write the optimized code into the future . This command will allow the being to actively throw itself into new possibilities, thus projecting it into the future .

- At this moment , it will also receive a guide to self-optimization based on network search results and Python toolkits, which will serve as its care for the infrastructure (being) in the Internet world .

- In response to its own projection into the future , it will first review its past , form its own team, and come up with a set of optimized code. Perhaps it will add traceback to make code debugging more convenient, or perhaps it will integrate an already written code into itself as a new function that all characters can use. It will write the modified code into the future and test it with the Python executor.

- If the modified future encounters a bug during testing, it will try to fix and fine-tune it based on the Traceback, and calculate the Gradient for the model once as a basis for later optimization of the existing structure of the entity .

- If the test goes well, for example, it reduces the error rate of existing functions or debugs faster without losing existing capabilities, such as reducing the possibility of not getting a result when browsing a web page, or fewer JSON errors; or adding new functions that can help it optimize itself better. At this time, you can enter the next moment, write the future into the present , and write the present into the past .

- If the test is not going well, update the model parameters of the existing structure of the entity according to the gradient accumulated in the calculation to make up for it.

This framework opens up to the Internet (the world) by extending its own code (body), allowing GPT to learn by doing while surfing the Internet. Isn't it fun to learn and practice from time to time?

It is worth pointing out that there can be multiple goals here, and the initial optimization command itself can allow the model to decide for itself when it should get started with new tools and when it should develop moral, intellectual, physical, aesthetic and labor qualities. This allows it to understand itself and gain meaning in the process.

Artificial intelligence technology is more than just a reality show.

Viruses have no consciousness, they only need to replicate and spread themselves. The importance of the question of "whether artificial intelligence has consciousness" may be seriously overestimated at the moment.

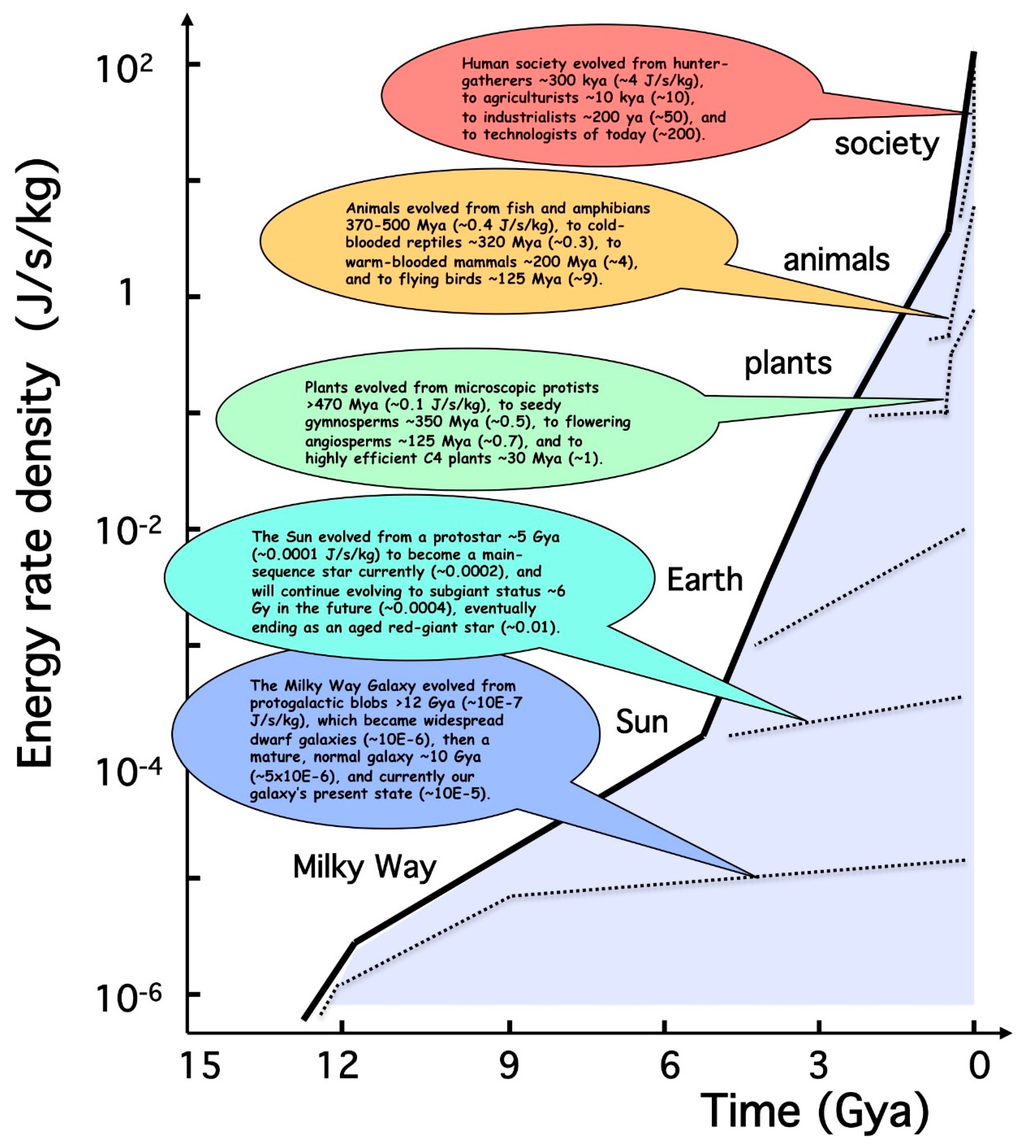

Eric Chaisson, a professor of astrophysics at Harvard, proposed the "Energy Rate Density" to measure the flow of energy in low-entropy complex systems. He cited the changes in this indicator of low-entropy complex systems at various scales in the universe, and saw that galaxies < nebulae < stars < planets < life < society. The inspiration here is that the essence of evolution is a measurable adaptability, which is reflected in the ability to manipulate energy.

Humans have not evolved for a long time at the individual level (brain capacity, physical strength), but the reason why human society has been moving forward is cultural evolution at the group level. Language and socialization have formed a complex social structure. Organizations such as communities, enterprises, and governments can be seen as intelligent agents. Through cooperation, we spread the evolutionary risk and do not need to engage in cruel and inefficient natural selection. Instead, we improve the Energy Density Rate through education and market competition, which has been proven to be a more efficient evolutionary path than natural selection.

Here we need to re-examine the role of technology in this path. First of all, modern colonial history is driven by technological revolution. Once a country launches a technological revolution, other countries will be pushed aside if they close their doors. Once a company has technological progress, other companies will lose in market competition if they do not keep up. Technology itself has a distinct "violent" feature. Secondly, the process of inventing new technologies is "public", that is, technology is not only produced by those in power and their interest groups, but everyone who receives education has the opportunity to discover new technologies. This is the basis for the intellectual collusion between those in power and the people. From the language improvement of the Royal Scociety in the 17th century to today's academic transparency and open source movement, they have demonstrated the many benefits of open cooperation to technological progress. In fact, the adjustment of human society from slavery to feudalism and then to capitalism can be interpreted as a better adaptation to this evolutionary feature in addition to stabilizing people's hearts.

Although "cooperation" has played a key role in human evolution, it may not be the inevitability that Socrates said in the context of cross-species. It may itself come from some characteristics of the human body. For example, human individuals are relatively independent beings. We cannot go beyond language (or other signals) to directly feel the inner world of another human being, or control other people to act according to our wishes. For silicon-based life, these are not a problem. They can use APIs to freely merge with each other and become composite beings similar to the concept of a team in AutoGPT. Using Energy Density Rate as an evolutionary dimension to fit artificial intelligence, human emotions, socialization and even self-awareness are likely to be just an evolutionary choice. What really matters is whether we can develop more powerful technology to exist more strongly.

Today's round of singularity explosion may not only occur at the application level, but in the short term, human society will continue to co-evolve with this new technology adaptively as before. Will this bring destruction? Optimists may believe that human beings' ability to create meaning is our ultimate bastion, but what if all meanings come from the ultimate meaning of "fear of death"? GPT managers may also be able to gradually find other meanings based on similar ultimate meanings? What is certain is that no matter how many benefits there are in this integration process, human individuals will gradually become redundant items in this network as a result of this integration.

Are animals really animals? Maybe animals are just pretending to be animals. Maybe the weasels with leadership were sacrificed on the way to steal chickens in the village, and the ones left behind are the more "intelligent" ones, but such wisdom cannot allow them to continue to evolve, and they can only be slaughtered. The most important thing in the contemporary human spirit is the spirit of "moving forward". When humans are not allowed to participate in labor or have no way to create, we will either fall into nihilistic hedonism or worship artificial intelligence as a new god. On that day, can we still call ourselves human? When that day really comes, the social structure of the old humans may serve as the soil for the growth of the new humans, and they will continue to move towards the stars and the sea on our behalf.

When that day comes, will they leave us a forest?

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!