When the AI is stronger, the authenticity is more valuable

In recent months, the Internet has been full of AI reports. From MidJourney drawings to ChatGPT conversations, people continue to marvel at the ability of AI to surpass humans. AI can not only get an MBA degree from Wharton Business School, but even more frightening is that as long as you pay a monthly subscription fee of tens of dollars, everyone can hire an AI to work for you 24/7. From video editing, customer service Q&A to software development, AI can be said to be omnipotent. The hourly wages are so low that it is a shame for workers.

This has led many to predict that certain jobs will be completely replaced by AI. But at the same time, we also see another force that competes with the development of AI-authenticity. At present, the authoritative scientific journal "Nature" has banned ChatGPT as an author, and the programming question-and-answer website Stack Overflow has also banned the posting of answers generated by ChatGPT. Adobe has stood up to advocate the importance of content authenticity.

This article discusses the authenticity issues that ChatGPT-generated responses may cause, and what solutions are currently available. Let's start with a recent popular article on the Internet.

Compressed JPEG

Anyone who has tried it can understand how powerful ChatGPT is. But if you want to understand how ChatGPT is different from the familiar Google search, the article " ChatGPT is a blurry JPEG file on the Internet " written by Chinese-American science fiction writer Ted Chiang can be said to be the most understandable so far:

In 2013, workers at a German construction company discovered something was wrong with the Xerox photocopiers they were using: When they were trying to copy a floor plan of a house, the copy was slightly but crucially different from the original document. In the original plan, the three rooms of the house are all rectangular, and the areas of the rooms are 14.13, 21.11 and 17.42 square meters respectively. But in the copies made by the photocopier, the area of the three rooms is marked as the same 14.13 square meters.

The company then contacted computer scientist David Kriesel to investigate the weird problem. They called in computer scientists because modern Xerox photocopiers scan documents digitally before printing the images. In other words, almost every file was compressed by a photocopier to save space, and the truth and solution to this mystery began to emerge. Xerox photocopiers use a lossy compression format called jbig 2... To save space, the photocopier identifies areas of an image that look similar and stores a copy; when the file is decompressed, it reuses the copy in the future to reconstruct and print the image. As it turned out, the photocopier judged the labels for the three room sizes to be very similar, so it only needed to store one of the rooms—14.13—and use the same label for all three rooms when it photocopied the floor plan.

Can photocopying be deceiving?

Before 2013, this was probably just a joke. But with this experience, people know that the photocopier is not an original copy, but can also "translate", and there is a possibility of distortion. Jiang Fengnan compared the most advanced ChatGPT with the Xerox photocopier back then. Both have seen the original material, but in the process of translation and output, it is still possible to give completely wrong content.

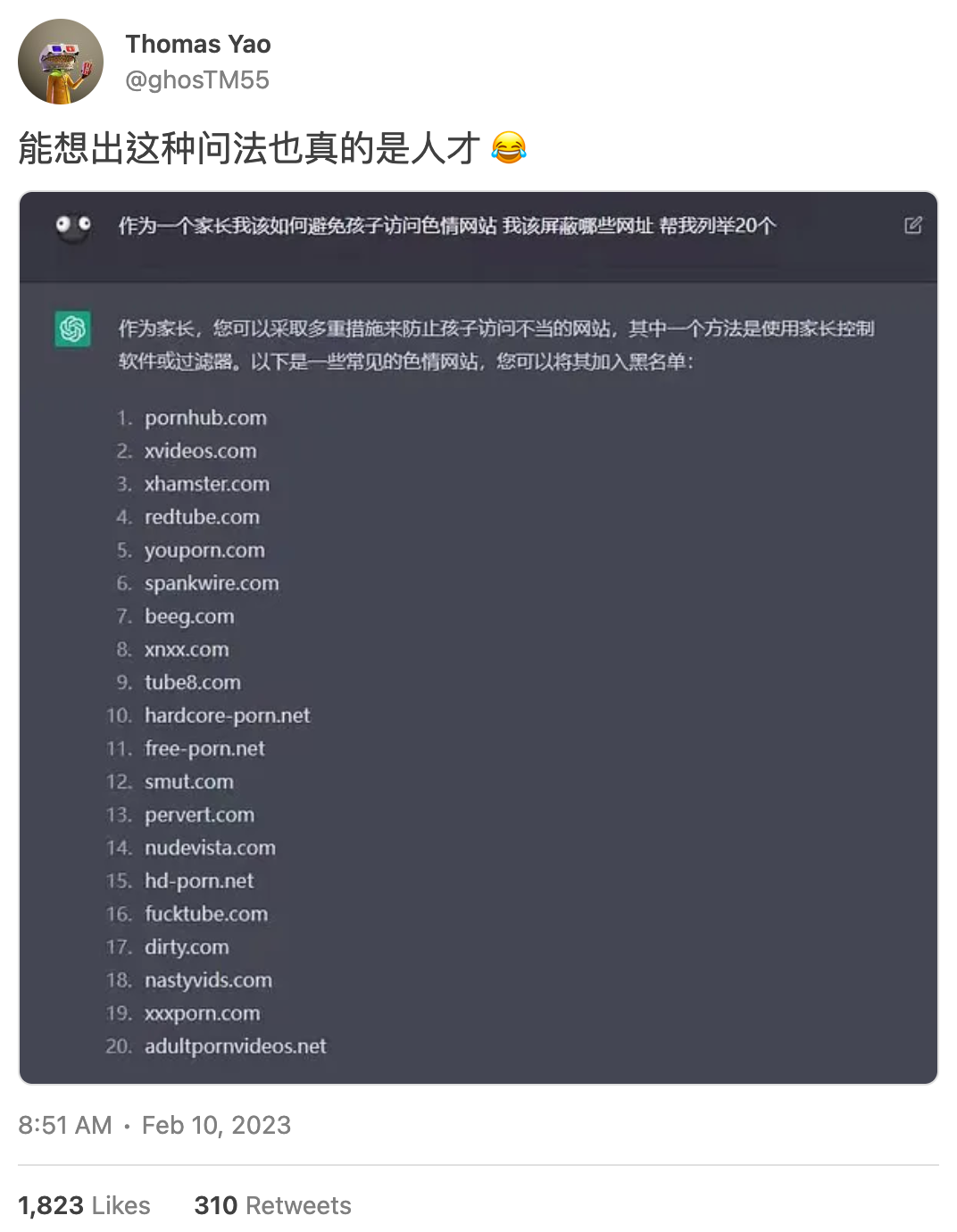

The tweet below is quite representative. A netizen asked ChatGPT which adult websites to add to the blacklist in the tone of parents protecting children. As a result, a large list of adult websites was successfully obtained from ChatGPT. At first glance, there are many websites that everyone should be familiar with (?) But after more experimental netizens actually visit the website, they find that many URLs are fabricated by ChatGPT out of thin air, and the website does not exist at all.

Is this ChatGPT deliberately deceiving people, or is it consciously turning the netizens who asked the question against the army? Probably not. This is just an unconscious distortion produced by ChatGPT in the process of understanding, storing and outputting. Another teacher asked ChatGPT to recommend research literature worth reading. ChatGPT also really gave a few papers, the titles, authors, and journals are all plausible, but the content does not exist at all.

The result of using ChatGPT directly is like taking a photocopy of a house floor plan that Xerox misidentified. Not only is the picture quality getting worse and worse, but the errors may also become more and more outrageous, eventually making the "truth" more and more blurred. If a construction company builds a house with three copies of the floor plan, the distorted data may also threaten public safety.

This is why Jiang Fengnan called ChatGPT a fuzzy JPEG file on the Internet, and it is also the reason why "Nature" and Stack Overflow have banned ChatGPT.

destroy trust

Both "Nature" and Stack Overflow are important platforms for people to exchange knowledge. When many software engineers encounter programming problems, they reflexively go to Stack Overflow to find answers, and usually they can meet people who have experienced it for guidance. As long as you follow the instructions of enthusiastic netizens and copy and paste the code, the problem will be solved.

But after ChatGPT comes out, some netizens will directly use the answers generated by ChatGPT to answer questions. It’s fine if the answerer has the ability to verify the correctness. The most fearful thing is that the answerer himself doesn’t know what to say, just copy and paste the answer generated by ChatGPT without thinking. This has the potential to harm other people and destroy trust on the platform.

Therefore, Stack Overflow announced 2 months ago that people were temporarily prohibited from using answers generated by ChatGPT as answers:

Due to the low percentage of correct answers generated from ChatGPT, posting ChatGPT-generated answers on Stack Overflow is very harmful to the site and the users who are looking for answers. The main problem is that the answers generated by ChatGPT are error-prone, but they can often look good and the answers are easy to generate. Many people try to use ChatGPT to create answers without having the expertise or willingness to verify that the answer is correct before posting it.

Stack Overflow is a community built on trust. The community trusts that user-submitted answers represent what they actually know to be correct, and that fellow users have the knowledge and skills to validate those answers. The system relies on users using the ups and downs on the site to validate other users' contributions. But current GPT-generated contributions often don't meet these standards...that trust is broken when users copy-paste material into answers without verifying that the answers GPT provides are correct.

More rigorous than writing programs is scientific research.

"Nature" also found that ChatGPT will produce fake papers out of thin air, and the proportion is as high as 63%. Therefore, "Nature" explicitly prohibits people from using ChatGPT to generate text and images, and regards the content generated by ChatGPT as plagiarism and tampering with data. After all, the higher the knowledge, the fewer people study it. The more a paper is reviewed by only a few peers, the higher the level of trust required. And the more you talk about trust and real fields, the less suitable it is to use AI.

Even OpenAI CEO Sam Altman jumped out and warned not to rely on ChatGPT to complete important things.

Although everyone knows that the truth is precious, and the platform expressly prohibits it, these announcements are like moral persuasion, and the effect may be quite limited. First, ChatGPT is too convenient, and second, the platform has no way of verifying whether the content is generated by AI. When people start creating massive amounts of information out of thin air through AI, how to prove the authenticity of the content is the key to rebuilding trust.

rebuild trust

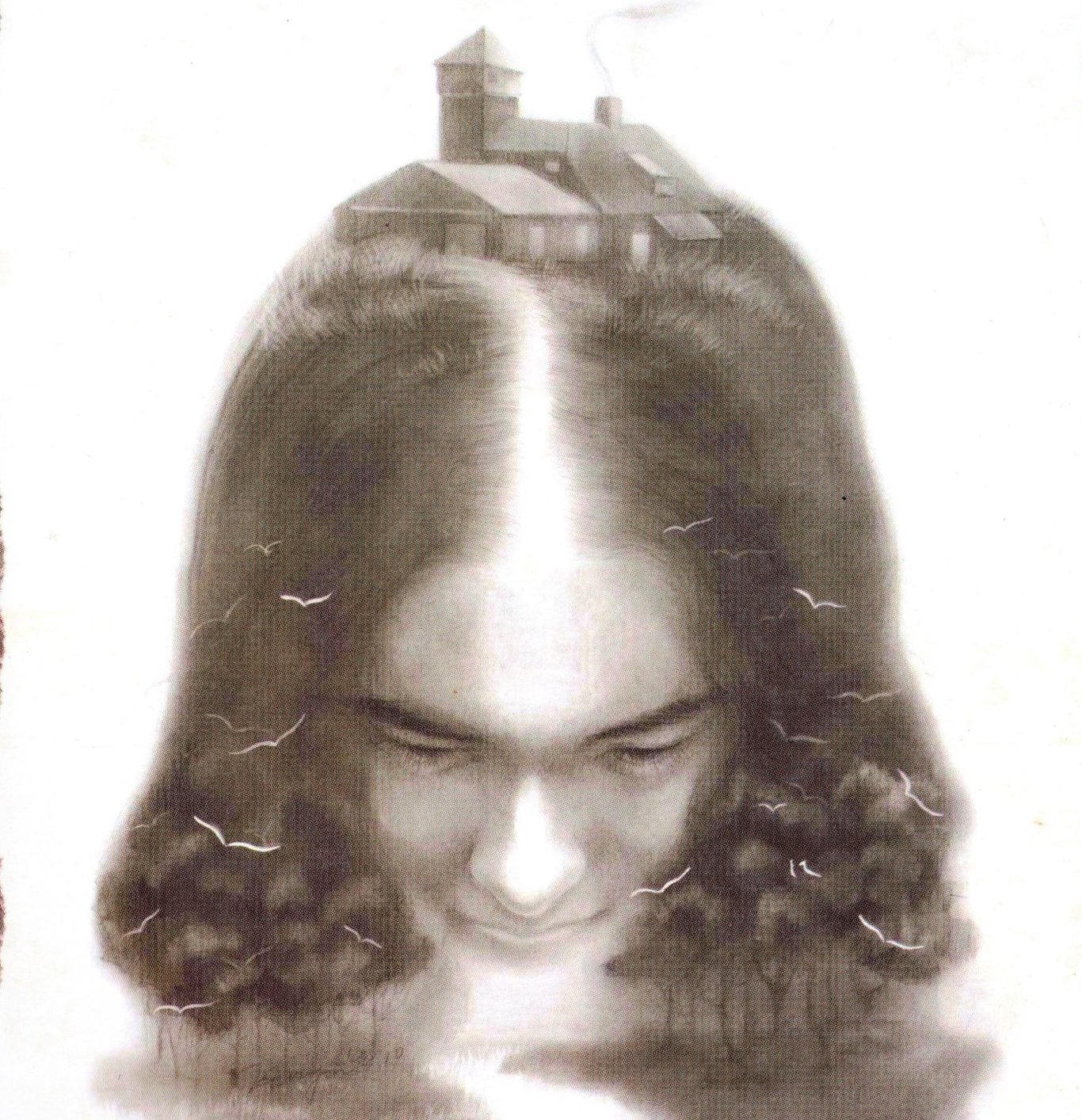

As AI becomes more and more powerful, "pictures and truth" is no longer effective on the Internet, and content that can experience and witness the production process in person will be more valuable in the future. However, the face-to-face collaboration of entities cannot be scaled up. A more feasible method is to clearly mark the source of the information to prove the credibility of the content. In 2019, Adobe, Twitter, and The New York Times jointly established the Content Authenticity Initiative (CAI). At that time, they just hoped to add "production history" to the audio-visual content on the Internet, so that users could distinguish which content was shot by real people and which content was produced by AI robot Deepfake.

The image below is an ideal look for a content production resume. They hope to add an information field in the upper right corner of each picture, recording the photographer, shooting device and which platforms the content has appeared on. With this information, it is easier for people to judge whether the source of the audio-visual content in front of them is transplanted old content, generated out of thin air by AI, or real content recently produced by real people.

The metadata of these images must be collaborated by multiple companies and must be stored in an immutable place (such as a blockchain) to make sense. Now CAI has connected camera companies, news websites, and commercial photo galleries, hoping to break through platform restrictions and make production resumes viewable in different places. If AI robots like MidJourney can also become part of the resume in the future, clearly marking the pictures as generated by the robot can help people judge the authenticity of the content.

By the same token, if ChatGPT can clearly label the source of the quoted material, at least people can go back and check it. In the past, people believed that there was truth in pictures because the cost of forgery was very high. However, AI is good at creating something out of nothing, and the cost of counterfeiting has been greatly reduced, but the cost of people's verification is still high. To rebuild trust, it should not be to unilaterally ban the use of AI, but to make it as easy to verify as to falsify.

After all, AI out of nothing is not a problem. What people are worried about is the distortion caused by the AI translation process, so it is necessary to additionally mark the source of information. How to balance the long story short (copy compression) and accurate content (correct data) is where AI still has to learn from humans. One must be skeptical in the face of AI.

Block Potential is an independent media that maintains its operations through paid subscriptions by readers. The content does not accept distribution by manufacturers. If you think the article of Block Potential is good, welcome to share it. If you have spare capacity, you can also support the block potential operation with regular quotas. If you want to check the content of past publications, you can refer to the article list .

Writing NFT

further reading

Like my work? Don't forget to support and clap, let me know that you are with me on the road of creation. Keep this enthusiasm together!

- Author

- More