河北-->天津 --> 西安-->香港-->英国 大学教书,偶尔写作,愿意思考,诚心分享。

Machine awakening? The current dangers of artificial intelligence

Let's talk about artificial intelligence today.

The hot artificial intelligence in recent years is actually not a new concept. In 2016, Google's AlphaGo defeated Lee Sedol in the Go game, and artificial intelligence once again attracted the attention of all walks of life. Google DeepMind's breakthrough in artificial intelligence has also contributed to the application of artificial intelligence technology in all walks of life.

Face recognition, speech recognition, text recognition, machine translation, automatic driving systems, recommendation systems, and other applications based on artificial intelligence are changing related industries and changing our lives. At the same time, people are once again concerned about the potential dangers of artificial intelligence.

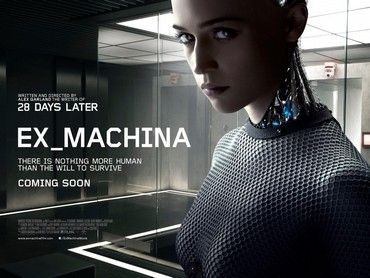

Talking about the dangers of artificial intelligence and machine learning, we often think of the awakening of machines. Machines that originally served humans began to resist, and even turned against humans. However, the challenges of AI and machine learning to human beings, at the current level of development, it is obvious that the awakening of machines is not what human beings should be worried about.

But the widespread adoption of machine learning also does pose a real threat to humanity. The two main applications of artificial intelligence in real life today are image recognition and recommendation systems. Among them, image recognition, especially face recognition, is facing severe regulation. People worry about information leakage on the one hand, and misuse of personal information on the other. With the deepening of supervision, the invasion of personal privacy caused by face recognition has not yet become a major threat.

Recommender systems are another important application of machine learning. Basically, all kinds of web services we use now use AI-based recommender systems. We are often surprised by the accuracy of system recommendations. Open the shopping software, and the things in your mind may have appeared in the recommendation box. I just finished watching a video, and a lot of videos popped up, and before I knew it, more than half a day had passed. Artificial intelligence recommends the most suitable products and services to users by observing and analyzing user behavior, which seems completely harmless to humans and animals. It certainly won’t face much regulation, but that’s why such AI systems pose a real threat to the human individual.

To a certain extent, artificial intelligence is reshaping our consciousness and deepening group biases, especially in spaces where freedom of speech is limited. Human decision-making is actually an algorithm, in which personal experience and the experience of others acquired through audio-visual are the key to individual decision-making. As people spend more and more time reading videos and web pages, even more than interacting with reality. Compared to social activities, people are more willing to hide at home and watch videos. Virtual cyberspace takes up even more time than people interact with the real world. In this case, these audiovisual content will gradually change our cognition just like the real world, which will also affect our decision-making to some extent. On the websites that host these audiovisual materials, artificial intelligence technology is introduced to guide users to discover the content and take up as much of the user's time as possible. For websites, the purpose of introducing artificial intelligence is not to improve the neutrality of information, but to increase the click-through rate of websites and videos. One example is news sites based on AI recommendations. Artificial intelligence quantifies click-through rate, reading time, likes, comments, and forwarding, including likes, tags users, and pushes categories and topics, keywords, sources, interests, age, and locations that users are interested in, etc. . If users only rely on such websites to obtain information, gradually artificial intelligence will only push news that conforms to the user's values. In this process, the user trains the artificial intelligence through actions such as clicking; but on the other hand, the artificial intelligence is also further strengthening the user's thinking mode. A prejudiced mind, by finding like-minded people, will further reinforce the prejudice. Due to the simplification of information obtained, the process will be greatly accelerated, so that artificial intelligence has also completed the strengthening and even training of personal consciousness. When this thought pattern begins to influence the user's decision-making, the user will not be able to distinguish whether the decision is his own subjective decision or the result of the user's domestication by artificial intelligence. Strengthening user bias is obviously the most beneficial strategy for artificial intelligence information push. In the past, due to the diversity of people's access to information, the impact of different information will help to change the prejudice of users. Nowadays, people get information from a single website, such as Toutiao, etc. All kinds of information lacking neutrality, even false information, because it conforms to the prejudice of most people, it is easier to spread, and thus more likely to affect people's thinking. So to a certain extent, artificial intelligence is changing people's thinking patterns, solidifying them, and even strengthening group thinking bias. This process of domestication is gentle, even pleasant. But this stereotyped thinking mode is very harmful to individuals. The content pushed by the artificial intelligence made him think that most of the people in the world are his peers, thus gaining group recognition in the virtual world. But the reality is not so. The isolation of cognition and reality will inevitably have an impact in a certain way at a certain moment, and cause individual judgment and decision-making mistakes.

Another question is can artificial intelligence technology change these defects and avoid labeling users? The answer is no. The recommendation system itself is to tag users. Compared with the diversity of people, labels are always limited. So at first Google used artificial intelligence technology to classify emails. But tagging customers and pushing relevant content for the tags is undoubtedly the most effective for marketing. This also means that this technology will be more widely adopted.

If you find that your views and ideas seem to be becoming more and more correct, it is difficult to see voices that contradict them on the Internet. In most cases, it is not "doing what you want without breaking the rules", but the website blocks you for you that you don't like the sound of.

How can individuals combat this domestication of artificial intelligence?

1. Develop diversified information acquisition channels, and abandon news push media for the purpose of clicks.

2. If it is unavoidable, try to use an anonymous identity to log in to avoid artificial intelligence collecting too much information and labeling individuals.

3. Continuously learn and create content to create diversity of thinking with your own thoughts and knowledge.

But sadly, this process of domestication cannot be avoided because entire societies are being domesticated. After all, we cannot escape the group effect. Freedom of speech and the ability to normally collide with each other on public platforms, speculation is the key to combating this kind of domestication.

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…