connect the dots.

Define the dimensions of the computational "space"

In The Sciences of the Artificial , the psychologist and political scientist Herbert A. Simon describes "designing" as "the space of alternatives" ” (space of alternatives) . Aside from his other views on design, Simon's metaphor about the search space may inform how we approach designing digital products and systems to support them. Simon might lead us to ask: What is the "space" of computation and the dimensions that define it?

Traditionally, the computation we consider is not a spatial choice, but rather an improvement over time. Moore's Law. faster. cheaper. more processors. more memory. more megapixels. higher resolution. more sensors. more bandwidth. more equipment. more applications. more users. more data. More "engagement". more everything.

Over time, more and more things become less and less. The first computer was so large that it took up an entire room. Over the past 50 years, computers have shrunk so much that they can fit on a desktop and then fit in a shirt pocket. Today, computers on chips are embedded into the "smart" devices around us. And now, they are starting to blend into our environment, becoming invisible and ubiquitous.

Early computers were rare and expensive. In the 1960s, the University of Illinois released a film boasting an astonishing 30 digital computers! The slogan of the early personal computer world became "one person, one computer" . Today, it is commonplace for a person to own multiple computing devices. In fact, your car probably contains a dozen or more microprocessors.

Clearly, what we think of as "computing" has changed and will continue to change. No wonder most of our computational models are incremental: timelines.

Computational historical model

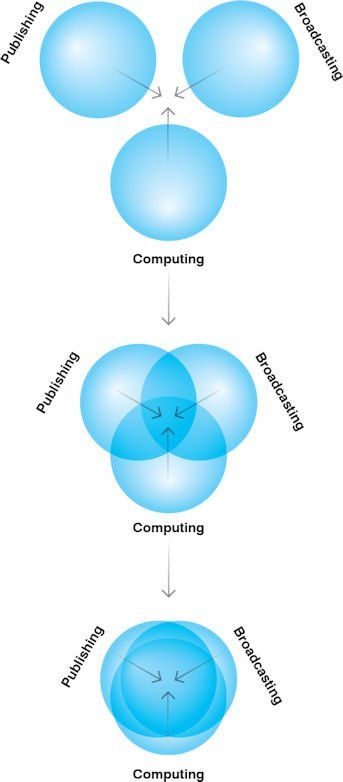

In 1980, Nicholas Negroponte , co-founder of the MIT Media Lab, called the future of computing "convergence ." He described publishing, broadcasting and computing as three rings, noting that they are starting to overlap and will soon converge. The idea that computing would become a medium, blur the lines between industries and become a platform for communication, started to take root. Wired is a close second. Then there's the internet.

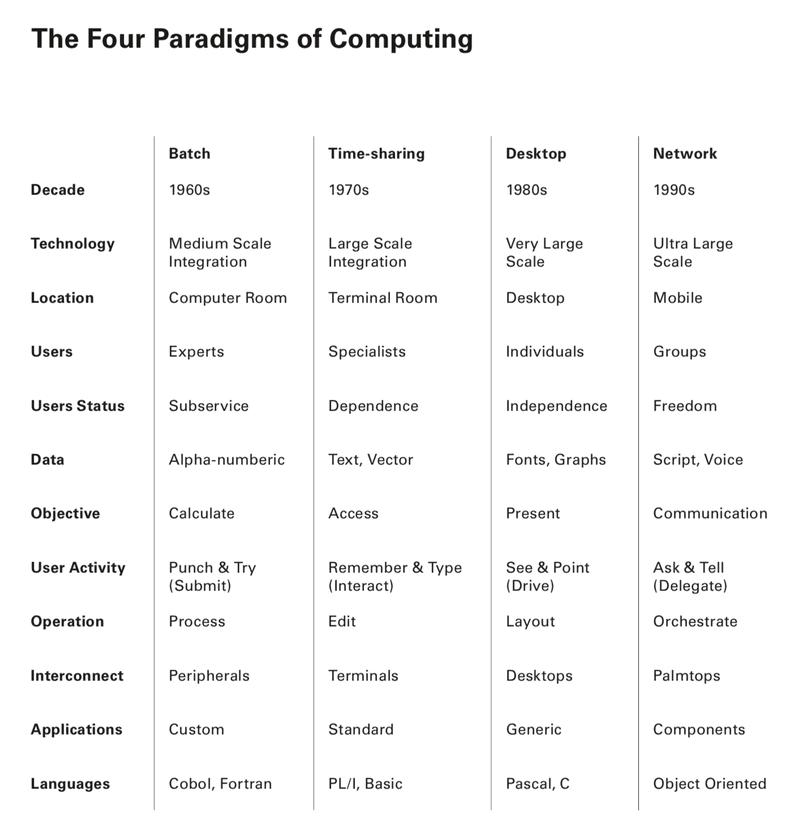

In 1991, Larry Tesler , Apple's senior vice president of products, wrote, "Computers started out as clunky machines served by the tech elite and evolved into desktop tools that obey individuals. The next generation will actively engage with users. Collaboration.” He described a change that occurs every decade. He exemplifies "The Four Paradigms of Computing" - "batch, time-sharing, desktop, and network" in an era analysis table.

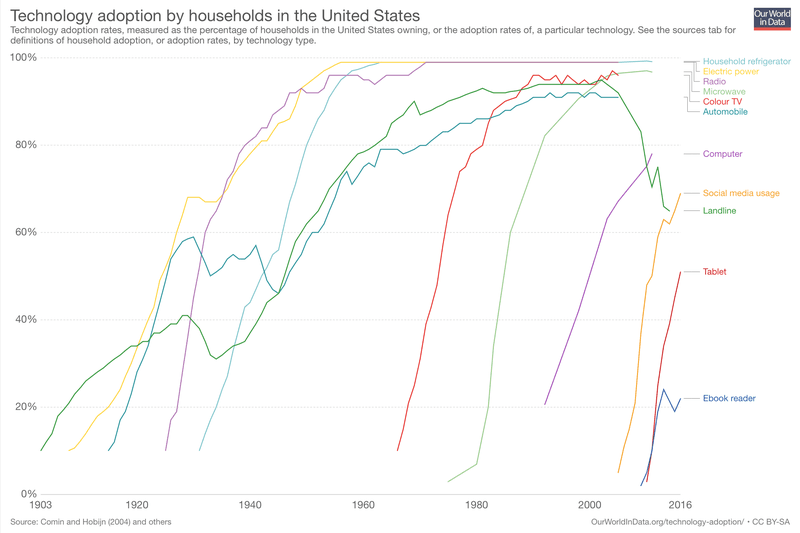

In 2018, Our World In Data updated its graph of consumer technology acceptance from 1900 to 2016. This chart shows that technology adoption is in "waves" - much like economist Joseph Schumpeter predicted. The rate of adoption is accelerating. For example, it took about 35 years for radios to reach 90% acceptance, while cell phones took less than 10 years.

These three ways of understanding the role of computing in our lives are time-based. In the form of a linear sequence, the changes made by the computer are recorded on the time axis. Emerging trends are often described as natural developments or extensions of sequences, whether virtual reality, blockchain or AI. For example, Google CEO Sundar Pichai announced a new set of products under the slogan "AI First", which followed "Mobile" [First], followed by "Internet", followed by "AI First" "PC".

The focus on timelines is understandable given the acceleration of change - especially as entrepreneurs, investors and the media are under pressure to identify new things. However, we have other options. As Simon suggested, we can look at the space of possibilities.

Computational "space"

Using the metaphor of space, the central question becomes: What is the dimension? What are up and down, front and rear, left and right? The content below is not an answer, but a suggestion.

analog and digital

Early computing devices were "analog"; for example, the Antikythera Mechanism, Charles Babbage's Difference Engine, and Vannevar Bush's Differential Analyzer. Likewise, the early communication systems, the telegraph and the telephone, were analog. Some readers may recall that in the early days of network computing, modems converted digital computer signals into analog telephone signals and back again.

In the 1940s binary digital computing appeared, and by the 1960s it had become the standard, the basis upon which the concept of "modern" computing was built, effectively defeating analog computing methods. Such a complete victory that today "digital" = "computing".

The progress made in "printing" binary digital switches is amazing. However, something is still missing. There are other options. For many years, Heinz von Foerster ran a biocomputing laboratory (funded by the U.S. government) next to the Digital Computing Laboratory (DCL) at the University of Illinois, where he explored simulations calculation method. Furthermore, the limitations of so-called "fuzzy logic" (and other non-Boolean logic) and current "neural networks" suggest that purely binary numerical methods may eventually give way to grand, Hegelian A synthesis of analog and digital. Isn't this the prediction of "cyborgs" and "wet-ware" in science fiction? Isn't this the potential shown in recent advances in neuroscience?

Centralized and distributed

Computing seems to swing like a pendulum between centralized and distributed poles.

In the early days of digital computing, mainframe computers were used for centralized processing, such as organizing payroll, billing customers, and tracking inventory. Time-sharing systems and clusters of microcomputers began to distribute access rights, bringing computing closer to the user. A decade later, personal computers popularized computing.

Originally, the Internet was designed to be completely "distributed" - there was no central node that made the network vulnerable to attack. In practice, centralized routing facilities such as "Internet Exchange Points" (IXPs), which can increase speed and reduce costs, can also make the network vulnerable. Internet pioneers touted its democratizing effects, such as allowing anyone to publish information. More recently, Amazon, Facebook, Google, and others have become giant monopolies that centralize access to information and people.

Related to the centralized and distributed dimensions of computing is the shift from standalone products to connected products. We believe that independence and connection are not a dimension of computation. Clearly, computing devices "want" to be connected; that is, networked devices are more valuable than stand-alone products. Also, so-called standalone products may not be as independent as the name suggests. In many cases, value is co-created through the use of the product. In some cases, usage relies on service networks, not to mention production and sales networks. For example, even Kodak's earliest cameras required film, processing and printing. "Intelligent interconnected products" rely on the Internet to provide information and services and enhance the value of products. The question is not: will the products be connected? Rather: is the network centralized or decentralized?

Fixed and mobile

40 years after the advent of the personal computer, a lot of the software we use still produces "dead" documents, meaning that the information in those documents is "fixed" - locked into the document. In a desktop computing environment, software applications read and write specific file types, and it is difficult to move information from one application to another. Take a word processor; if you want to add data-driven diagrams, you need a spreadsheet application; or, if you want to add a complex diagram, you need a drawing application. Updating the chart requires going back to the original application, and while Microsoft supports some file links, most people find it easier to re-export and re-import.

Some "authoring tools" try to solve this problem. Apple's Hypercard app, launched in 1987, showed that text, numbers, images and scripts could be combined in a single "fluid" environment.

In 1990, Microsoft provided OLE (Object Linking and Embedding). Two years later, Apple introduced OpenDoc, a software component framework designed to support compound documents. At least in internal discussions, Apple has considered the possibility of subverting the app-first model and replacing it with a document-first model. However, neither Apple nor any other company has addressed this underlying paradox. Users want to be in their data; but apps support developers; developers create diversity and bring users to the platform.

Data pipeline applications, such as IFTTT, are beginning to solve the problem of connecting siloed data in a networked environment.

In early 2018, Mike Bostock, one of the creators of D3.js, released a beta version of Observable, an interactive notebook for real-time data analysis, visualization, and exploration. The idea of this reactive programming environment was inspired by the principle of explorable explanation coined by Bret Victor. It is also rooted in notebook interfaces, such as python's Jupyter project (2014) and Stephen Wolfram's Mathematica (1988).

control and cooperation

Simple tools require our active control. In a sense, we "push around" our tools -- not just brooms, but hammers, even complex tools like a violin. However, certain classes of tools have a degree of independence. After we determine the set point, the thermostat can operate without our direct involvement. Smart thermostats like Nest (2011) can even try to "learn" our behaviors and adapt to them, adjusting their own set points during operation.

With the exception of smart thermostats, most of our interactions with computing systems require active control. For example, in AutoDesk's AutoCAD application (1982), buildings don't draw themselves (though the software may try to predict what we're going to do next and render it ahead of time). However, we may wish for more.

That's what Negroponte, founder of MIT's Architecture Machine Group (the predecessor to the MIT Media Lab), did. Negroponte was not interested in building a drawing tool, he wanted architects to have meaningful conversations with construction machines as if they were colleagues, albeit with different abilities.

Gordon Pask is a frequent visitor and consultant to the Architecture Machine Group, interested in second-order cybernetics, conversation theory, and interactions between humans and machines. One of Pask's works, "Musicolour", is an installation that responds to musicians' performances through light. Interestingly, the installation does not respond directly to a specific sound, but to the novelty of playing music. So if a musician plays a repetitive rhythm, "Musicolour" gets bored and stops producing any visual output. Therefore, in the process of "dialogue" with "Musicolour", musicians will reflect and change what they are playing.

In the age of Facebook and Alexa, it might be wise to revisit the notions of collaboration and dialogue that Negroponte, Pask and others have come up with.

ephemeral and lasting

Most digital products have a short lifespan as new models and types of products are released all the time. This means that the format changes frequently. This means that it is difficult to revisit past information in the long run. Try to find a DVD player or CD player, or even a Syquest drive or floppy disk drive. It is much easier to read a book from 1500 than to read a document from 1995.

Have you ever tried to revisit a website only to find it was down? To address this, the Internet Archive, a nonprofit digital library, allows Internet users to access archived web pages through its Web site, the Wayback Machine. (On the other hand, the EU has enacted the "right to be forgotten")

This dimension is similar to the concept of "Shearing Layers" coined by architect Frank Duffy and later called "Pace Layering" by Stewart Brand . Brand describes in his book How Buildings Learn: What Happens After They're Built that within a building, there are several layers of change, with different Speed happens. Brand extends "speed stratification" to culture, describing a series of layers that move at different speeds. More generally, we can say that one aspect of system resilience is the ability to adapt to different speeds. So far, our computing systems have not had that kind of resilience.

Peeping and Vipassana

Today, we use mobile devices as a kind of portal. We "peek" into the digital world through their rectangular screens. However, the digital world doesn't have to be trapped in these little rectangles.

The term "virtual reality" first appeared in Damien Broderick's 1982 novel The Judas Mandala. Since then, there has been a growing body of research around virtual and augmented reality. These two research directions bring us one step closer to directly interacting with the digital environment.

On the other hand, Dynamicland , a computer research group co-founded by Alan Kay and Bret Victor, is studying "dynamic reality" by augmenting physical reality with cameras, projections, robots, etc. (Dynamic Reality). They are building a public computing system in which the entire physical space is activated and we can "enter" a digital environment while maintaining a "presence" in the physical world and interacting with physical objects. Unlike virtual reality or augmented reality, Dynamicland isn't creating an illusion or hallucination. By moving from peeping digital environments to truly ubiquitous computing environments, we can finally go beyond screens, not just fingers and eyes, but entire bodies.

Consumption and Creation

Currently, only those with a programming background or resources are capable of creating digital products. This forces other people to be consumers rather than creators or authors. When creating digital products, our target audience concept is "users". The concept of "user" sometimes assumes that their primary goal is consumption, not play, creation, or conversation. What if the digital environment was inclusive enough that anyone could create? How do we provide tools that empower everyone to be an author?

Today, most mobile devices are used to consume information; we swipe to buy items, watch videos, browse news, and more. Mobile devices don't really give us the option to easily create digital products. Mobile apps still have to be coded on desktops and laptops.

Even on the World Wide Web, which we visit every day, our main interaction is "surfing". Creating a website or even a web page still requires special skills.

Still, blogs, vlogs, Pinterest, and other publishing tools offer hope. And tools like Hypercard and Minecraft (2009) show what "empowerment" might look like if we allowed not only professional programmers, but anyone to create digital products.

other possible dimensions

The above is not a conclusion, but a suggestion to explore and map the dimensions that make up a possible future computing "space". By shifting our focus from the product improvement roadmap to the possibility space, we can have a different set of conversations around the future we want to build.

The dimensions presented above are by no means complete or definitive. We considered other aspects, including:

- Serial processing, single clock vs parallel processing (or concurrent), multiple clocks (maybe an aspect of the centralized vs distributed dimension)

- Cathedral vs. Bazaar, top-down vs. bottom-up, proprietary vs. open source (and maybe centralized vs. distributed, etc.)

- Quality Engineering and Agile Prototyping (maybe another aspect of velocity layering)

- Virtual and physical (perhaps an aspect of voyeurism and insight)

- Rich media and text, GUI and command line, mouse and keyboard (and perhaps, broadly speaking, all aspects of consumption and creation)

- Automation and Augmentation (again, an aspect of Consumption and Creation)

Chances are, readers will imagine more possible dimensions. In a way, that's the crux of the matter - sparking a discussion about which dimensions are important and what do we value?

Visually compute paths in "space"

By studying the "spatial" dimension of computing, we can "locate" and even "map" the "location" of historical, existing, and proposed computing systems. Let's revisit the computing paradigms of the past and locate them in the "space" of computing.

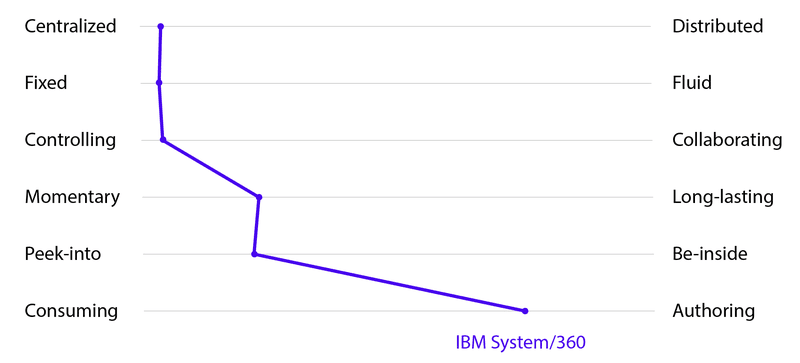

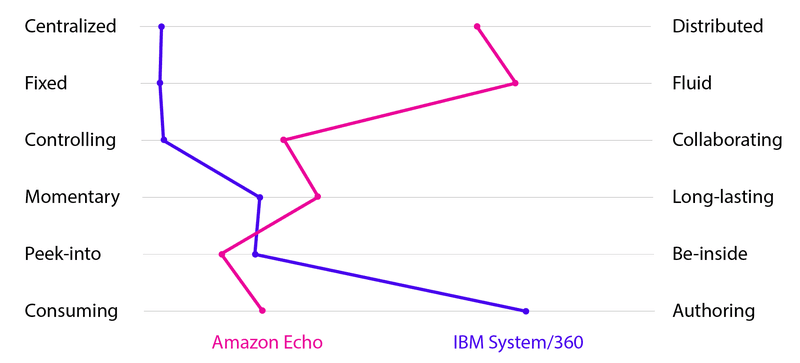

We can take a closer look by studying a specific product. In 1964, IBM introduced the IBM System/360, the first family of computers designed for a variety of applications. It was a revolutionary computer that allowed customers to buy low-end machines and upgrade them upwards over time. If the IBM System/360 was in the "space" of computing, what would its coordinates be? As a mainframe computer, its computing power is concentrated and packaged into its physical form. It is designed to be used without much flexibility. When the operator presses a button or enters information, it "listens" for commands. The computer was delivered between 1965 and 1978, or over a dozen years. What is being calculated is only visible when the result is output. It allows the operator to input what needs to be calculated.

Below, the above-mentioned six dimensions are represented by rows, respectively. (Analog vs. digital not included because most examples from the past 70 years will be digital). The IBM System/360 is a point in each dimension, and a path is drawn connecting each point, forming the overall pattern or configuration of the example. Admittedly, these points are rough approximations and are open to question.

Today, computers have not only shrunk in size, but can now be deformed into many different shapes. For example, the Amazon Echo, first introduced in 2014, can be placed anywhere in your home and answer simple questions by voice. Where does the Echo fit in the computing "space" compared to the IBM System/360? As a voice assistant, it is able to connect and communicate with other distributed services on the network through APIs. While it helps you keep your shopping list (short term), the information stays in the Amazon ecosystem (long term). Its response to you is currently very limited; it asks and assumes that your question or request is straightforward. While we're not sure how long the product will last, the Amazon Echo itself (for better or worse) keeps your order history, preferences, and more. As a black cylindrical product, it's nearly impossible to communicate to the average user in an understandable way what it's processing, listening to, and interpreting, other than telling you what it's recording from the Amazon Echo app. The product is largely built for people to consume in the Amazon ecosystem.

In a similar fashion, we drew the Amazon Echo.

By looking at the "snake" diagrams for the IBM System/360 and Amazon Echo, we can see that the two products trace two different paths in the "space" of computing. Comparing these two "snake" diagrams side-by-side, we can begin to understand the differences between the two products, not only at the level of hardware, operating systems, and programming languages, but also in terms of how they enhance and expand our human capabilities.

As we've seen, the Amazon Echo is more flexible as a platform and product, but it's easier to "consume" than the IBM System/360, which, at least for its users, is in many ways better for authoring open.

As we've already started showing above, if we track products in the "space" of the calculation, we may find that the most recent and current products are to the left of most dimensions. One reason for this may be that organizations and their managers are under enormous pressure to achieve growth.

In the past, when we thought of computing milestones, we naturally associated those milestones with timelines. In this case, the Amazon Echo comes 50 years after IBM introduced the System/360. However, by comparing the dimensions of two different products from two different computing eras, we can talk about the two products not in terms of their "progress" in time, but in terms of their similarities and differences in purpose and approach.

Our mental models—the frameworks in which we place information in context—support thinking about different goals. The Lean Startup methodology helps us iterate quickly and understand what our customers like. The Business Model Canvas helps us to visualize the value proposition of the business and the resources needed to operate. User-centered design philosophy, in many practices, focuses on creating products for people, largely assuming that they will use and consume, and rarely go beyond that. While these frameworks have helped us in a consumer-centric world, we have few frameworks to think about in other computing worlds.

our responsibility

The future is not predetermined. It has yet to be invented.

While tech trends dominate tech news, influencing what we think is possible and credible, we are free to choose. We don't have to accept what a monopoly has to offer. We can still create the future in our own way. We can go back to the values that drove the PC revolution and inspired the first generation of the Internet.

Glass rectangles and black cylinders are not the future. By searching in the "alternative space" computing system, as Simon suggests, we can imagine other possible futures -- paths that haven't been traveled. In this "space", although some dimensions are currently less recognizable than others, by jointly researching and thereby illuminating those unexplored dimensions, we can together create an alternative future.

Compiled from: Journal of Design and Science article Defining the Dimensions of the “Space” of Computing

Like my work?

Don't forget to support or like, so I know you are with me..

Comment…